- Reviews / Why join our community?

- For companies

- Frequently asked questions

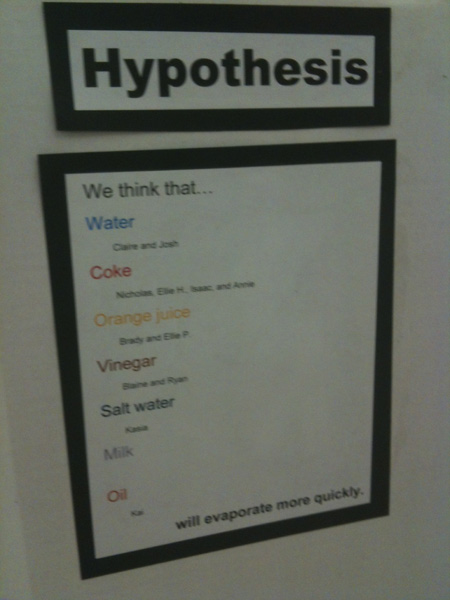

User Research – The Importance of Hypotheses

It is easy to be tempted to look at the objective of your user research and pump out a solution that fits your best idea of how to achieve those objectives. That’s because experienced professionals can be quite good at that but then again they can also be very bad at it.

It is better to take your objectives and generate some hypothetical situations and then test those hypotheses with your users before turning them into concrete action. This gives you (and hopefully your clients) more confidence in your ideas or it highlights the need for changing those hypotheses because they don’t work in reality.

Let’s say that your objective is to create a network where people can access short (say a chapter) parts of a full text before they decide to buy the text or not. (Rather like Amazon does).

You can create some simple hypotheses around this objective in a few minutes brainstorming .

User-Attitude

We think that people would like to share their favourite clips with others on Facebook and Twitter.

User-Behaviour

We think that people will only share their favourite authors and books. They won’t share things that aren’t important to them.

User-Social Context

We think that people will be more likely to share their favourite authors and books if they are already popular with other users.

Why does this matter?

One of the things about design projects is that when you have a group of intelligent, able and enthusiastic developers, stakeholders , etc. that they all bring their own biases and understanding to the table when determining the objectives for a project. Those objectives may be completely sound but the only way to know this is to test those ideas with your users.

You cannot force a user to meet your objectives. You have to shape your objectives to what a user wants/needs to do with your product.

What happens to our product if our users don’t want to share their reading material with others? What if they feel that Facebook, Twitter, etc. are platforms where they want to share images and videos but not large amounts of text?

If you generate hypotheses for your user-research; you can test them at the relevant stage of research. The benefits include:

- Articulating a hypothesis makes it easy for your team to be sure that you’re testing the right thing.

- Articulating a hypothesis often guides us to a quick solution as to how to test that hypothesis.

- It is easy to communicate the results of your research against these hypotheses. For example:

- We thought people would want to share their favourite authors on social networks and they did.

- We believed that the popularity of an author would relate to their “sharability” but we found that most readers wanted to emphasize their own unique taste and are more likely to share obscure but moving works than those already in the public eye.

Header Image: Author/Copyright holder: Dave. Copyright terms and licence: CC BY-NC-ND 2.0

User Experience: The Beginner’s Guide

Get Weekly Design Tips

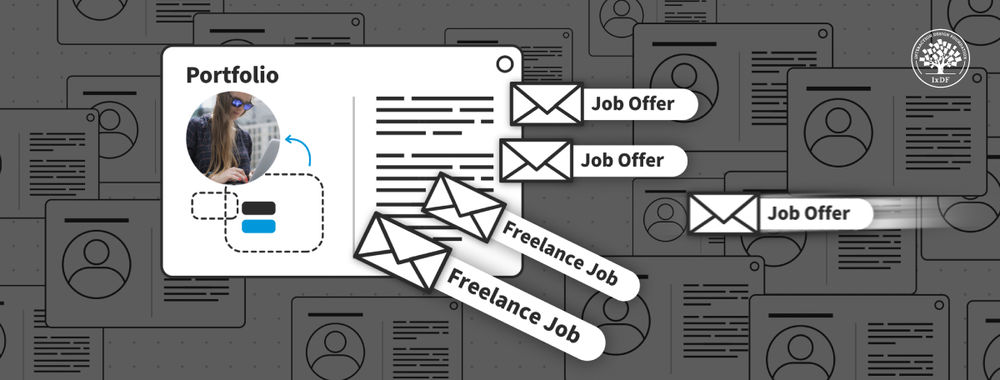

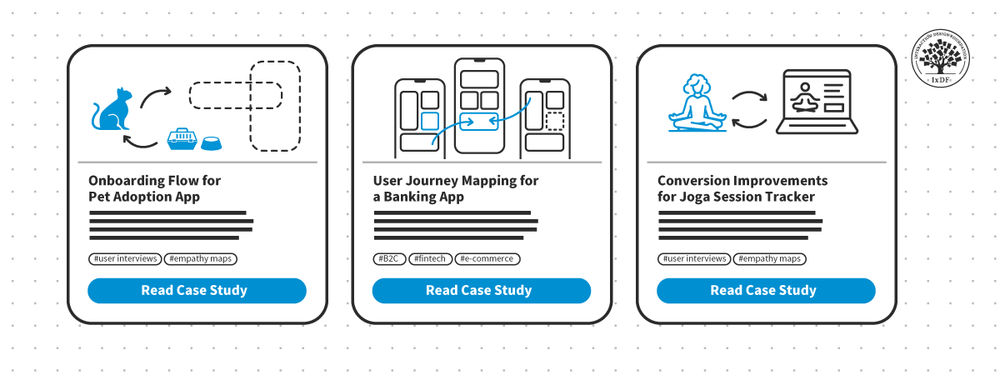

What you should read next, picture perfect: how to use visuals to elevate your ux/ui design portfolio case studies.

4 Tips to Amplify the Potential of Your UX/UI Design Portfolio

No Experience? No Problem! 3 Ways to Find Projects for Your UX/UI Design Portfolio Case Studies

The Myths of Mobile Design and Why They Matter

5 Steps for Human-Centered Mobile Design

Customer Journey Maps — Walking a Mile in Your Customer’s Shoes

- 1.1k shares

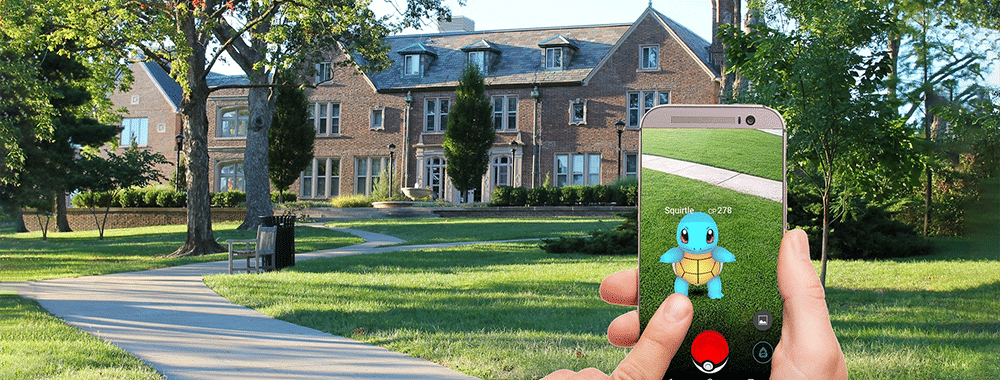

How to Design for AR Experiences on the Go

Your Gateway to UX Design: Norman Doors

- 2 weeks ago

How to Select the Best Idea by the End of an Ideation Session

- 3 weeks ago

Revolutionize UX Design with VR Experiences

Open Access—Link to us!

We believe in Open Access and the democratization of knowledge . Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change , cite this article , link to us, or join us to help us democratize design knowledge !

Privacy Settings

Our digital services use necessary tracking technologies, including third-party cookies, for security, functionality, and to uphold user rights. Optional cookies offer enhanced features, and analytics.

Experience the full potential of our site that remembers your preferences and supports secure sign-in.

Governs the storage of data necessary for maintaining website security, user authentication, and fraud prevention mechanisms.

Enhanced Functionality

Saves your settings and preferences, like your location, for a more personalized experience.

Referral Program

We use cookies to enable our referral program, giving you and your friends discounts.

Error Reporting

We share user ID with Bugsnag and NewRelic to help us track errors and fix issues.

Optimize your experience by allowing us to monitor site usage. You’ll enjoy a smoother, more personalized journey without compromising your privacy.

Analytics Storage

Collects anonymous data on how you navigate and interact, helping us make informed improvements.

Differentiates real visitors from automated bots, ensuring accurate usage data and improving your website experience.

Lets us tailor your digital ads to match your interests, making them more relevant and useful to you.

Advertising Storage

Stores information for better-targeted advertising, enhancing your online ad experience.

Personalization Storage

Permits storing data to personalize content and ads across Google services based on user behavior, enhancing overall user experience.

Advertising Personalization

Allows for content and ad personalization across Google services based on user behavior. This consent enhances user experiences.

Enables personalizing ads based on user data and interactions, allowing for more relevant advertising experiences across Google services.

Receive more relevant advertisements by sharing your interests and behavior with our trusted advertising partners.

Enables better ad targeting and measurement on Meta platforms, making ads you see more relevant.

Allows for improved ad effectiveness and measurement through Meta’s Conversions API, ensuring privacy-compliant data sharing.

LinkedIn Insights

Tracks conversions, retargeting, and web analytics for LinkedIn ad campaigns, enhancing ad relevance and performance.

LinkedIn CAPI

Enhances LinkedIn advertising through server-side event tracking, offering more accurate measurement and personalization.

Google Ads Tag

Tracks ad performance and user engagement, helping deliver ads that are most useful to you.

Share Knowledge, Get Respect!

or copy link

Cite according to academic standards

Simply copy and paste the text below into your bibliographic reference list, onto your blog, or anywhere else. You can also just hyperlink to this article.

New to UX Design? We’re giving you a free ebook!

Download our free ebook The Basics of User Experience Design to learn about core concepts of UX design.

In 9 chapters, we’ll cover: conducting user interviews, design thinking, interaction design, mobile UX design, usability, UX research, and many more!

New to UX Design? We’re Giving You a Free ebook!

Integrations

What's new?

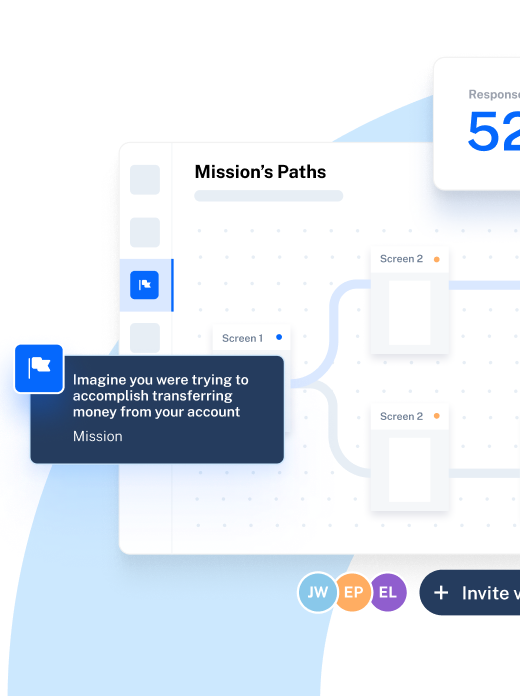

In-Product Prompts

Participant Management

Interview Studies

Prototype Testing

Card Sorting

Tree Testing

Live Website Testing

Automated Reports

Templates Gallery

Choose from our library of pre-built mazes to copy, customize, and share with your own users

Browse all templates

Financial Services

Tech & Software

Product Designers

Product Managers

User Researchers

By use case

Concept & Idea Validation

Wireframe & Usability Test

Content & Copy Testing

Feedback & Satisfaction

Content Hub

Educational resources for product, research and design teams

Explore all resources

Question Bank

Maze Research Success Hub

Guides & Reports

Help Center

Future of User Research Report

The Optimal Path Podcast

User Research

Mar 21, 2024

Creating a research hypothesis: How to formulate and test UX expectations

A research hypothesis helps guide your UX research with focused predictions you can test and learn from. Here’s how to formulate your own hypotheses.

Armin Tanovic

All great products were once just thoughts—the spark of an idea waiting to be turned into something tangible.

A research hypothesis in UX is very similar. It’s the starting point for your user research; the jumping off point for your product development initiatives.

Formulating a UX research hypothesis helps guide your UX research project in the right direction, collect insights, and evaluate not only whether an idea is worth pursuing, but how to go after it.

In this article, we’ll cover what a research hypothesis is, how it's relevant to UX research, and the best formula to create your own hypothesis and put it to the test.

Test your hypothesis with Maze

Maze lets you validate your design and test research hypotheses to move forward with authentic user insights.

What defines a research hypothesis?

A research hypothesis is a statement or prediction that needs testing to be proven or disproven.

Let’s say you’ve got an inkling that making a change to a feature icon will increase the number of users that engage with it—with some minor adjustments, this theory becomes a research hypothesis: “ Adjusting Feature X’s icon will increase daily average users by 20% ”.

A research hypothesis is the starting point that guides user research . It takes your thought and turns it into something you can quantify and evaluate. In this case, you could conduct usability tests and user surveys, and run A/B tests to see if you’re right—or, just as importantly, wrong .

A good research hypothesis has three main features:

- Specificity: A hypothesis should clearly define what variables you’re studying and what you expect an outcome to be, without ambiguity in its wording

- Relevance: A research hypothesis should have significance for your research project by addressing a potential opportunity for improvement

- Testability: Your research hypothesis must be able to be tested in some way such as empirical observation or data collection

What is the difference between a research hypothesis and a research question?

Research questions and research hypotheses are often treated as one and the same, but they’re not quite identical.

A research hypothesis acts as a prediction or educated guess of outcomes , while a research question poses a query on the subject you’re investigating. Put simply, a research hypothesis is a statement, whereas a research question is (you guessed it) a question.

For example, here’s a research hypothesis: “ Implementing a navigation bar on our dashboard will improve customer satisfaction scores by 10%. ”

This statement acts as a testable prediction. It doesn’t pose a question, it’s a prediction. Here’s what the same hypothesis would look like as a research question: “ Will integrating a navigation bar on our dashboard improve customer satisfaction scores? ”

The distinction is minor, and both are focused on uncovering the truth behind the topic, but they’re not quite the same.

Why do you use a research hypothesis in UX?

Research hypotheses in UX are used to establish the direction of a particular study, research project, or test. Formulating a hypothesis and testing it ensures the UX research you conduct is methodical, focused, and actionable. It aids every phase of your research process , acting as a north star that guides your efforts toward successful product development .

Typically, UX researchers will formulate a testable hypothesis to help them fulfill a broader objective, such as improving customer experience or product usability. They’ll then conduct user research to gain insights into their prediction and confirm or reject the hypothesis.

A proven or disproven hypothesis will tell if your prediction is right, and whether you should move forward with your proposed design—or if it's back to the drawing board.

Formulating a hypothesis can be helpful in anything from prototype testing to idea validation, and design iteration. Put simply, it’s one of the first steps in conducting user research.

Whether you’re in the initial stages of product discovery for a new product, a single feature, or conducting ongoing research, a strong hypothesis presents a clear purpose and angle for your research It also helps understand which user research methodology to use to get your answers.

What are the types of research hypotheses?

Not all hypotheses are built the same—there are different types with different objectives. Understanding the different types enables you to formulate a research hypothesis that outlines the angle you need to take to prove or disprove your predictions.

Here are some of the different types of hypotheses to keep in mind.

Null and alternative hypotheses

While a normal research hypothesis predicts that a specific outcome will occur based upon a certain change of variables, a null hypothesis predicts that no difference will occur when you introduce a new condition.

By that reasoning, a null hypothesis would be:

- Adding a new CTA button to the top of our homepage will make no difference in conversions

Null hypotheses are useful because they help outline what your test or research study is trying to dis prove, rather than prove, through a research hypothesis.

An alternative hypothesis states the exact opposite of a null hypothesis. It proposes that a certain change will occur when you introduce a new condition or variable. For example:

- Adding a CTA button to the top of our homepage will cause a difference in conversion rates

Simple hypotheses and complex hypotheses

A simple hypothesis is a prediction that includes only two variables in a cause-and-effect sequence, with one variable dependent on the other. It predicts that you'll achieve a particular outcome based on a certain condition. The outcome is known as the dependent variable and the change causing it is the independent variable .

For example, this is a simple hypothesis:

- Including the search function on our mobile app will increase user retention

The expected outcome of increasing user retention is based on the condition of including a new search function. But, what happens when there are more than two factors at play?

We get what’s called a complex hypothesis. Instead of a simple condition and outcome, complex hypotheses include multiple results. This makes them a perfect research hypothesis type for framing complex studies or tracking multiple KPIs based on a single action.

Building upon our previous example, a complex research hypothesis could be:

- Including the search function on our mobile app will increase user retention and boost conversions

Directional and non-directional hypotheses

Research hypotheses can also differ in the specificity of outcomes. Put simply, any hypothesis that has a specific outcome or direction based on the relationship of its variables is a directional hypothesis . That means that our previous example of a simple hypothesis is also a directional hypothesis.

Non-directional hypotheses don’t specify the outcome or difference the variables will see. They just state that a difference exists. Following our example above, here’s what a non-directional hypothesis would look like:

- Including the search function on our mobile app will make a difference in user retention

In this non-directional hypothesis, the direction of difference (increase/decrease) hasn’t been specified, we’ve just noted that there will be a difference.

The type of hypothesis you write helps guide your research—let’s get into it.

How to write and test your UX research hypothesis

Now we’ve covered the types of research hypothesis examples, it’s time to get practical.

Creating your research hypothesis is the first step in conducting successful user research.

Here are the four steps for writing and testing a UX research hypothesis to help you make informed, data-backed decisions for product design and development.

1. Formulate your hypothesis

Start by writing out your hypothesis in a way that’s specific and relevant to a distinct aspect of your user or product experience. Meaning: your prediction should include a design choice followed by the outcome you’d expect—this is what you’re looking to validate or reject.

Your proposed research hypothesis should also be testable through user research data analysis. There’s little point in a hypothesis you can’t test!

Let’s say your focus is your product’s user interface—and how you can improve it to better meet customer needs. A research hypothesis in this instance might be:

- Adding a settings tab to the navigation bar will improve usability

By writing out a research hypothesis in this way, you’re able to conduct relevant user research to prove or disprove your hypothesis. You can then use the results of your research—and the validation or rejection of your hypothesis—to decide whether or not you need to make changes to your product’s interface.

2. Identify variables and choose your research method

Once you’ve got your hypothesis, you need to map out how exactly you’ll test it. Consider what variables relate to your hypothesis. In our case, the main variable of our outcome is adding a settings tab to the navigation bar.

Once you’ve defined the relevant variables, you’re in a better position to decide on the best UX research method for the job. If you’re after metrics that signal improvement, you’ll want to select a method yielding quantifiable results—like usability testing . If your outcome is geared toward what users feel, then research methods for qualitative user insights, like user interviews , are the way to go.

3. Carry out your study

It’s go time. Now you’ve got your hypothesis, identified the relevant variables, and outlined your method for testing them, you’re ready to run your study. This step involves recruiting participants for your study and reaching out to them through relevant channels like email, live website testing , or social media.

Given our hypothesis, our best bet is to conduct A/B and usability tests with a prototype that includes the additional UI elements, then compare the usability metrics to see whether users find navigation easier with or without the settings button.

We can also follow up with UX surveys to get qualitative insights and ask users how they found the task, what they preferred about each design, and to see what additional customer insights we uncover.

💡 Want more insights from your usability tests? Maze Clips enables you to gather real-time recordings and reactions of users participating in usability tests .

4. Analyze your results and compare them to your hypothesis

By this point, you’ve neatly outlined a hypothesis, chosen a research method, and carried out your study. It’s now time to analyze your findings and evaluate whether they support or reject your hypothesis.

Look at the data you’ve collected and what it means. Given that we conducted usability testing, we’ll want to look to some key usability metrics for an indication of whether the additional settings button improves usability.

For example, with the usability task of ‘ In account settings, find your profile and change your username ’, we can conduct task analysis to compare the times spent on task and misclick rates of the new design, with those same metrics from the old design.

If you also conduct follow-up surveys or interviews, you can ask users directly about their experience and analyze their answers to gather additional qualitative data . Maze AI can handle the analysis automatically, but you can also manually read through responses to get an idea of what users think about the change.

By comparing the findings to your research hypothesis, you can identify whether your research accepts or rejects your hypothesis. If the majority of users struggle with finding the settings page within usability tests, but had a higher success rate with your new prototype, you’ve proved the hypothesis.

However, it's also crucial to acknowledge if the findings refute your hypothesis rather than prove it as true. Ruling something out is just as valuable as confirming a suspicion.

In either case, make sure to draw conclusions based on the relationship between the variables and store findings in your UX research repository . You can conduct deeper analysis with techniques like thematic analysis or affinity mapping .

UX research hypotheses: four best practices to guide your research

Knowing the big steps for formulating and testing a research hypothesis ensures that your next UX research project gives you focused, impactful results and insights. But, that’s only the tip of the research hypothesis iceberg. There are some best practices you’ll want to consider when using a hypothesis to test your UX design ideas.

Here are four research hypothesis best practices to help guide testing and make your UX research systematic and actionable.

Align your hypothesis to broader business and UX goals

Before you begin to formulate your hypothesis, be sure to pause and think about how it connects to broader goals in your UX strategy . This ensures that your efforts and predictions align with your overarching design and development goals.

For example, implementing a brand new navigation menu for current account holders might work for usability, but if the wider team is focused on boosting conversion rates for first-time site viewers, there might be a different research project to prioritize.

Create clear and actionable reports for stakeholders

Once you’ve conducted your testing and proved or disproved your hypothesis, UX reporting and analysis is the next step. You’ll need to present your findings to stakeholders in a way that's clear, concise, and actionable. If your hypothesis insights come in the form of metrics and statistics, then quantitative data visualization tools and reports will help stakeholders understand the significance of your study, while setting the stage for design changes and solutions.

If you went with a research method like user interviews, a narrative UX research report including key themes and findings, proposed solutions, and your original hypothesis will help inform your stakeholders on the best course of action.

Consider different user segments

While getting enough responses is crucial for proving or disproving your hypothesis, you’ll want to consider which users will give you the highest quality and most relevant responses. Remember to consider user personas —e.g. If you’re only introducing a change for premium users, exclude testing with users who are on a free trial of your product.

You can recruit and target specific user demographics with the Maze Panel —which enables you to search for and filter participants that meet your requirements. Doing so allows you to better understand how different users will respond to your hypothesis testing. It also helps you uncover specific needs or issues different users may have.

Involve stakeholders from the start

Before testing or even formulating a research hypothesis by yourself, ensure all your stakeholders are on board. Informing everyone of your plan to formulate and test your hypothesis does three things:

Firstly, it keeps your team in the loop . They’ll be able to inform you of any relevant insights, special considerations, or existing data they already have about your particular design change idea, or KPIs to consider that would benefit the wider team.

Secondly, informing stakeholders ensures seamless collaboration across multiple departments . Together, you’ll be able to fit your testing results into your overall CX strategy , ensuring alignment with business goals and broader objectives.

Finally, getting everyone involved enables them to contribute potential hypotheses to test . You’re not the only one with ideas about what changes could positively impact the user experience, and keeping everyone in the loop brings fresh ideas and perspectives to the table.

Test your UX research hypotheses with Maze

Formulating and testing out a research hypothesis is a great way to define the scope of your UX research project clearly. It helps keep research on track by providing a single statement to come back to and anchor your research in.

Whether you run usability tests or user interviews to assess your hypothesis—Maze's suite of advanced research methods enables you to get the in-depth user and customer insights you need.

Frequently asked questions about research hypothesis

What is the difference between a hypothesis and a problem statement in UX?

A research hypothesis describes the prediction or method of solving that problem. A problem statement, on the other hand, identifies a specific issue in your design that you intend to solve. A problem statement will typically include a user persona, an issue they have, and a desired outcome they need.

How many hypotheses should a UX research problem have?

Technically, there are no limits to the amount of hypotheses you can have for a certain problem or study. However, you should limit it to one hypothesis per specific issue in UX research. This ensures that you can conduct focused testing and reach clear, actionable results.

Verifying that you are not a robot...

Understanding Your Users: A Practical Guide to User Research Methods

Research areas.

Human-Computer Interaction and Visualization

Learn more about how we conduct our research

We maintain a portfolio of research projects, providing individuals and teams the freedom to emphasize specific types of work.

UX Research: Objectives, Assumptions, and Hypothesis

by Rick Dzekman

An often neglected step in UX research

Introduction

UX research should always be done for a clear purpose – otherwise you’re wasting the both your time and the time of your participants. But many people who do UX research fail to properly articulate the purpose in their research objectives. A major issue is that the research objectives include assumptions that have not been properly defined.

When planning UX research you have some goal in mind:

- For generative research it’s usually to find out something about users or customers that you previously did not know

- For evaluative research it’s usually to identify any potential issues in a solution

As part of this goal you write down research objectives that help you achieve that goal. But for many researchers (especially more junior ones) they are missing some key steps:

- How will those research objectives help to reach that goal?

- What assumptions have you made that are necessary for those objectives to reach that goal?

- How does your research (questions, tasks, observations, etc.) help meet those objectives?

- What kind of responses or observations do you need from your participants to meet those objectives?

One approach people use is to write their objectives in the form of research hypothesis. There are a lot of problems when trying to validate a hypothesis with qualitative research and sometimes even with quantitative.

This article focuses largely on qualitative research: interviews, user tests, diary studies, ethnographic research, etc. With qualitative research in mind let’s start by taking a look at a few examples of UX research hypothesis and how they may be problematic.

Research hypothesis

Example hypothesis: users want to be able to filter products by colour.

At first it may seem that there are a number of ways to test this hypothesis with qualitative research. For example we might:

- Observe users shopping on sites with and without colour filters and see whether or not they use them

- Ask users who are interested in our products about how narrow down their choices

- Run a diary study where participants document the ways they narrowed down their searches on various stores

- Make a prototype with colour filters and see if participants use them unprompted

These approaches are all effective but they do not and cannot prove or disprove our hypothesis. It’s not that the research methods are ineffective it’s that the hypothesis itself is poorly expressed.

The first problem is that there are hidden assumptions made by this hypothesis. Presumably we would be doing this research to decide between a choice of possible filters we could implement. But there’s no obvious link between users wanting to filter by colour and a benefit from us implementing a colour filter. Users may say they want it but how will that actually benefit their experience?

The second problem with this hypothesis is that we’re asking a question about “users” in general. How many users would have to want colour filters before we could say that this hypothesis is true?

Example Hypothesis: Adding a colour filter would make it easier for users to find the right products

This is an obvious improvement to the first example but it still has problems. We could of course identify further assumptions but that will be true of pretty much any hypothesis. The problem again comes from speaking about users in general.

Perhaps if we add the ability to filter by colour it might make the possible filters crowded and make it more difficult for users who don’t need colour to find the filter that they do need. Perhaps there is a sample bias in our research participants that does not apply broadly to our user base.

It is difficult (though not impossible) to design research that could prove or disprove this hypothesis. Any such research would have to be quantitative in nature. And we would have to spend time mapping out what it means for something to be “easier” or what “the right products” are.

Example Hypothesis: Travelers book flights before they book their hotels

The problem with this hypothesis should now be obvious: what would it actually mean for this hypothesis to be proved or disproved? What portion of travelers would need to book their flights first for us to consider this true?

Example Hypothesis: Most users who come to our app know where and when they want to fly

This hypothesis is better because it talks about “most users” rather than users in general. “Most” would need to be better defined but at least this hypothesis is possible to prove or disprove.

We could address this hypothesis with quantitative research. If we found out that it was true we could focus our design around the primary use case or do further research about how to attract users at different stages of their journey.

However there is no clear way to prove or disprove this hypothesis with qualitative research. If the app has a million users and 15/20 research participants tell you that this is true would your findings generalise to the entire user base? The margin of error on that finding is 20-25%, meaning that the true results could be closer to 50% or even 100% depending on how unlucky you are with your sample.

Example Hypothesis: Customers want their bank to help them build better savings habits

There are many things wrong with this hypothesis but we will focus on the hidden assumptions and the links to design decisions. Two big assumptions are that (1) it’s possible to find out what research participants want and (2) people’s wants should dictate what features or services to provide.

Research objectives

One of the biggest problem with using hypotheses is that they set the wrong expectations about what your research results are telling you. In Thinking, Fast and Slow, Daniel Kahneman points out that:

- “extreme outcomes (both high and low) are more likely to be found in small than in large samples”

- “the prominence of causal intuitions is a recurrent theme in this book because people are prone to apply causal thinking inappropriately, to situations that require statistical reasoning”

- “when people believe a conclusion is true, they are also very likely to believe arguments that appear to support it, even when these arguments are unsound”

Using a research hypothesis primes us to think that we have found some fundamental truth about user behaviour from our qualitative research. This leads to overconfidence about what the research is saying and to poor quality research that could simply have been skipped in exchange for simply making assumption. To once again quote Kahneman: “you do not believe that these results apply to you because they correspond to nothing in your subjective experience”.

We can fix these problems by instead putting our focus on research objectives. We pay attention to the reason that we are doing the research and work to understand if the results we get could help us with our objectives.

This does not get us off the hook however because we can still create poor research objectives.

Let’s look back at one of our prior hypothesis examples and try to find effective research objectives instead.

Example objectives: deciding on filters

In thinking about the colour filter we might imagine that this fits into a larger project where we are trying to decide what filters we should implement. This is decidedly different research to trying to decide what order to implement filters in or understand how they should work. In this case perhaps we have limited resources and just want to decide what to implement first.

A good approach would be quantitative research designed to produce some sort of ranking. But we should not dismiss qualitative research for this particular project – provided our assumptions are well defined.

Let’s consider this research objective: Understand how users might map their needs against the products that we offer . There are three key aspects to this objective:

- “Understand” is a common form of research objective and is a way that qualitative research can discover things that we cannot find with quant. If we don’t yet understand some user attitude or behaviour we cannot quantify it. By focusing our objective on understanding we are looking at uncovering unknowns.

- By using the word “might” we are not definitively stating that our research will reveal all of the ways that users think about their needs.

- Our focus is on understanding the users’ mental models. Then we are not designing for what users say that they want and we aren’t even designing for existing behaviour. Instead we are designing for some underlying need.

The next step is to look at the assumptions that we are making. One assumption is that mental models are roughly the same between most people. So even though different users may have different problems that for the most part people tend to think about solving problems with the same mental machinery. As we do more research we might discover that this assumption is not true and there are distinctly different kinds of behaviours. Perhaps we know what those are in advance and we can recruit our research participants in a way that covers those distinct behaviours.

Another assumption is that if we understand our users’ mental models that we will be able to design a solution that will work for most people. There are of course more assumptions we could map but this is a good start.

Now let’s look at another research objective: Understand why users choose particular filters . Again we are looking to understand something that we did not know before.

Perhaps we have some prior research that tells us what the biggest pain points are that our products solve. If we have an understanding of why certain filters are used we can think about how those motivations fit in with our existing knowledge.

Mapping objectives to our research plan

Our actual research will involve some form of asking questions and/or making observations. It’s important that we don’t simply forget about our research objectives and start writing questions. This leads to completing research and realising that you haven’t captured anything about some specific objective.

An important step is to explicitly write down all the assumptions that we are making in our research and to update those assumptions as we write our questions or instructions. These assumptions will help us frame our research plan and make sure that we are actually learning the things that we think we are learning. Consider even high level assumptions such as: a solution we design with these insights will lead to a better experience, or that a better experience is necessarily better for the user.

Once we have our main assumptions defined the next step is to break our research objective down further.

Breaking down our objectives

The best way to consider this breakdown is to think about what things we could learn that would contribute to meeting our research objective. Let’s consider one of the previous examples: Understand how users might map their needs against the products that we offer

We may have an assumption that users do in fact have some mental representation of their needs that align with the products they might purchase. An aspect of this research objective is to understand whether or not this true. So two sub-objectives may be to (1) understand why users actually buy these sorts of products (if at all), and (2) understand how users go about choosing which product to buy.

Next we might want to understand what our users needs actually are or if we already have research about this understand which particular needs apply to our research participants and why.

And finally we would want to understand what factors go into addressing a particular need. We may leave this open ended or even show participants attributes of the products and ask which ones address those needs and why.

Once we have a list of sub-objectives we could continue to drill down until we feel we’ve exhausted all the nuances. If we’re happy with our objectives the next step is to think about what responses (or observations) we would need in order to answer those objectives.

It’s still important that we ask open ended questions and see what our participants say unprompted. But we also don’t want our research to be so open that we never actually make any progress on our research objectives.

Reviewing our objectives and pilot studies

At the end it’s important to review every task, question, scenario, etc. and seeing which research objectives are being addressed. This is vital to make sure that your planning is worthwhile and that you haven’t missed anything.

If there’s time it’s also useful to run a pilot study and analyse the responses to see if they help to address your objectives.

Plan accordingly

It should be easy to see why research hypothesis are not suitable for most qualitative research. While it is possible to create suitable hypothesis it is more often than not going to lead to poor quality research. This is because hypothesis create the impression that qualitative research can find things that generalise to the entire user base. In general this is not true for the sample sizes typically used for qualitative research and also generally not the reason that we do qualitative research in the first place.

Instead we should focus on producing effective research objectives and making sure every part of our research plan maps to a suitable objective.

Hypothesis Testing in the User Experience

It’s something we all have completed and if you have kids might see each year at the school science fair.

- Does an expensive baseball travel farther than a cheaper one?

- Which melts an ice block quicker, salt water or tap water?

- Does changing the amount of vinegar affect the color when dying Easter eggs?

While the science project might be relegated to the halls of elementary schools or your fading childhood memory, it provides an important lesson for improving the user experience.

The science project provides us with a template for designing a better user experience. Form a clear hypothesis, identify metrics, and collect data to see if there is evidence to refute or confirm it. Hypothesis testing is at the heart of modern statistical thinking and a core part of the Lean methodology .

Instead of approaching design decisions with pure instinct and arguments in conference rooms, form a testable statement, invite users, define metrics, collect data and draw a conclusion.

- Does requiring the user to double enter an email result result in more valid email addresses?

- Will labels on the top of form fields or the left of form fields reduce the time to complete the form?

- Does requiring the last four digits of your Social Security Number improve application rates over asking for a full SSN?

- Do users have more trust in the website if we include the McAfee security symbol or the Verisign symbol ?

- Do more users make purchases if the checkout button is blue or red?

- Does a single long form generate higher form submissions than the division of the form on three smaller pages?

- Will users find items faster using mega menu navigation or standard drop-down navigation?

- Does the number of monthly invoices a small business sends affect which payment solution they prefer?

- Do mobile users prefer to download an app to shop for furniture or use the website?

Each of the above questions is both testable and represents real examples. It’s best to have as specific a hypothesis as possible and isolate the variable of interest. Many of these hypotheses can be tested with a simple A/B test , unmoderated usability test , survey or some combination of them all .

Even before you collect any data, there is an immediate benefit gained from forming hypotheses. It forces you and your team to think through the assumptions in your designs and business decisions. For example, many registration systems require users to enter their email address twice. If an email address is wrong, in many cases a company has no communication with a prospective customer.

Requiring two email fields would presumably reduce the number of mistyped email addresses. But just like legislation can have unintended consequences, so do rules in the user interface. Do users just copy and paste their email thus negating the double fields? If you then disable the pasting of email addresses into the field, does this lead to more form abandonment and less overall customers?

With a clear hypothesis to test, the next step involves identifying metrics that help quantify the experience . Like most tests, you can use a simple binary metric (yes/no, pass/fail, convert/didn’t convert). For example, you could collect how many users registered using the double email vs. the single email form, how many submitted using the last four numbers of their SSN vs. the full SSN, and how many found an item with the mega menu vs. the standard menu.

Binary metrics are simple, but they usually can’t fully describe the experience. This is why we routinely collect multiple metrics, both performance and attitudinal. You can measure the time it takes users to submit alternate versions of the forms, or the time it takes to find items using different menus. Rating scales and forced ranking questions are good ways of measuring preferences for downloading apps or choosing a payment solution.

With a clear research hypothesis and some appropriate metrics, the next steps involve collecting data from the right users and analyzing the data statistically to test the hypothesis. Technically we rework our research hypothesis into what’s called the Null Hypothesis, then look for evidence against the Null Hypothesis, usually in the form of the p-value . This is of course a much larger topic we cover in Quantifying the User Experience .

While the process of subjecting data to statistical analysis intimidates many designers and researchers (recalling those school memories again), remember that the hardest and most important part is working with a good testable hypothesis. It takes practice to convert fuzzy business questions into testable hypotheses. Once you’ve got that down, the rest is mechanics that we can help with.

You might also be interested in

5 rules for creating a good research hypothesis

UserTesting

A hypothesis is a proposed explanation made on the basis of limited evidence. It is the starting point for further investigation of something that peaks your curiosity.

A good hypothesis is critical to creating a measurable study with successful outcomes. Without one, you’re stumbling through the fog and merely guessing which direction to travel in. It’s an especially critical step in A/B and Multivariate testing.

Every user research study needs clear goals and objectives, and a hypothesis is essential for this to happen. Writing a good hypothesis looks like this:

1: Problem : Think about the problem you’re trying to solve and what you know about it.

2: Question : Consider which questions you want to answer.

3: Hypothesis : Write your research hypothesis.

4: Goal : State one or two SMART goals for your project (specific, measurable, achievable, relevant, time-bound).

5: Objective : Draft a measurable objective that aligns directly with each goal.

In this article, we will focus on writing your hypothesis.

Five rules for a good hypothesis

1: A hypothesis is your best guess about what will happen. A good hypothesis says, "this change will result in this outcome. The "change" meaning a variation of an element. For example manipulating the label, color, text, etc. The "outcome" is the measure of success or the metric—such as click-through rate, conversion, etc.

2: Your hypothesis may be wrong—just learn from it. The initial hypothesis might be quite bold, such as “Variation B will result in 40% conversion over variation A”. If the conversion uptick is only 35% then your hypothesis is false. But you can still learn from it.

3: It must be specific. Explicitly stating values are important. Be bold, but not unrealistic. You must believe that what you suggest is indeed possible. When possible, be specific and assign numeric values to your predictions.

4: It must be measurable. The hypothesis must lead to concrete success metrics for the key measure. If you choose to evaluate click through, then measure clicks. If looking for conversion, then measure conversion, even if on a subsequent page. If measuring both, state in the study design which is more important, click through or conversion.

5: It should be repeatable. With a good hypothesis you should be able to run multiple different experiments that test different variants. And when retesting these variants, you should get the same results. If you find that your results are inconsistent, then revaluate prior versions and try a different direction.

How to structure your hypothesis

Any good hypothesis has two key parts, the variant and the result.

First, state which variant will be affected. Only state one (A, B ,or C) or the recipe if multivariate (A & B). Be sure that you’ve recorded each version of variant testing in your documentation for clarity. Also, ensure to include detailed descriptions of flows or processes for the purpose of re-testing.

Next, state the expected outcome. “Variant B will result in a 40% higher rate of course completion.” After the hypothesis, be sure to specifically document the metric that will measure the result - in this case, completion. Leave no ambiguity in your metric.

Remember, always use a "control" when testing. The control is a factor that will not change during testing. It will be used as a benchmark to compare the results of the variants. The control is generally the current design in use.

A good hypothesis begins with data. Whether the data is from web analytics, user research, competitive analyses, or your gut, a hypothesis should start with data you want to better understand.

It should make sense, be easy to read without ambiguity, and be based on reality rather than pie-in-the-sky thinking or simply shooting for a company KPI or objectives and key results (OKR).

The data that results from a hypothesis is incremental and yields small insights to be built over time.

Hypothesis example

Imagine you are an eccomerce website trying to better understand your customer's journey. Based on data and insights gathered, you noticed that many website visitors are struggling to locate the checkout button at the end of their journey. You find that 30% of visitors abandon the site with items still in the cart.

You are trying to understand whether changing the checkout icon on your site will increase checkout completion.

The shopping bag icon is variant A, the shopping cart icon is variant B, and the checkmark is the control (the current icon you are using on your website).

Hypothesis: The shopping cart icon (variant B) will increase checkout completion by 15%.

After exposing users to 3 different versions of the site, with the 3 different checkout icons. The data shows...

- 55% of visitors shown the checkmark (control), completed their checkout.

- 70% of visitors shown the shopping bag icon (variant A), completed their checkout.

- 73% of visitors shown the shopping cart icon (variant B), completed their checkout.

The results shows evidence that a change in the icon led to an increase in checkout completion. Now we can take these insights further with statistical testing to see if these differences are statistically significant . Variant B was greater than our control by 18%, but is that difference significant enough to completely abandon the checkmark? Variant A and B both showed an increase, but which is better between the two? This is the beginning of optimizing our site for a seamless customer journey.

Quick tips for creating a good hypothesis

- Keep it short—just one clear sentence

- State the variant you believe will “win” (include screenshots in your doc background)

- State the metric that will define your winner (a download, purchase, sign-up … )

- Avoid adding attitudinal metrics with words like “because” or “since”

- Always use a control to measure against your variant

Get started with experience research

Everything you need to know to effectively plan, conduct, and analyze remote experience research.

In this Article

Get started now

About the author(s)

With UserTesting’s on-demand platform, you uncover ‘the why’ behind customer interactions. In just a few hours, you can capture the critical human insights you need to confidently deliver what your customers want and expect.

Related Blog Posts

Continuous discovery: all your questions answered

5 tips for retailers preparing for the 2024 holiday shopping season

How and why you should test your product description pages

Human understanding. Human experiences.

Get the latest news on events, research, and product launches

Oh no! We're unable to display this form.

Please check that you’re not running an adblocker and if you are please whitelist usertesting.com.

If you’re still having problems please drop us an email .

By submitting the form, I agree to the Privacy Policy and Terms of Use .

The Complete Guide To UX Research (User Research)

UX Research is a term that has been trending in the past few years. There's no surprise why it's so popular - User Experience Research is all about understanding your customer and their needs, which can help you greatly improve your conversion rate and user experience on your website. In this article, we're going to provide a complete guide to UX research as well as how to start implementing it in your organisation.Throughout this article we will give you a complete high-level overview of the entire UX Research meaning, supported by more in-depth articles for each topic.

Introduction to UX Research

Wether you're a grizzled UX Researcher who's been in the field for decades or a UX Novice who's just getting started, UX Research is an integral aspect of the UX Design process. Before diving into this article on UX research methods and tools, let's first take some time to break down what UX research actually entails.

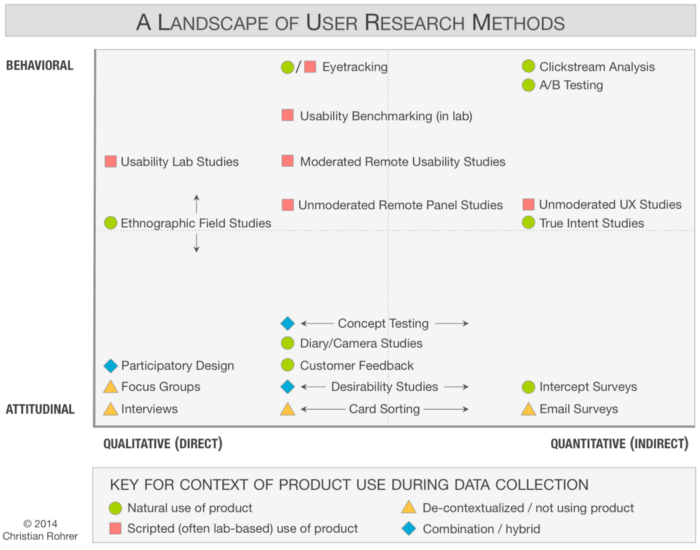

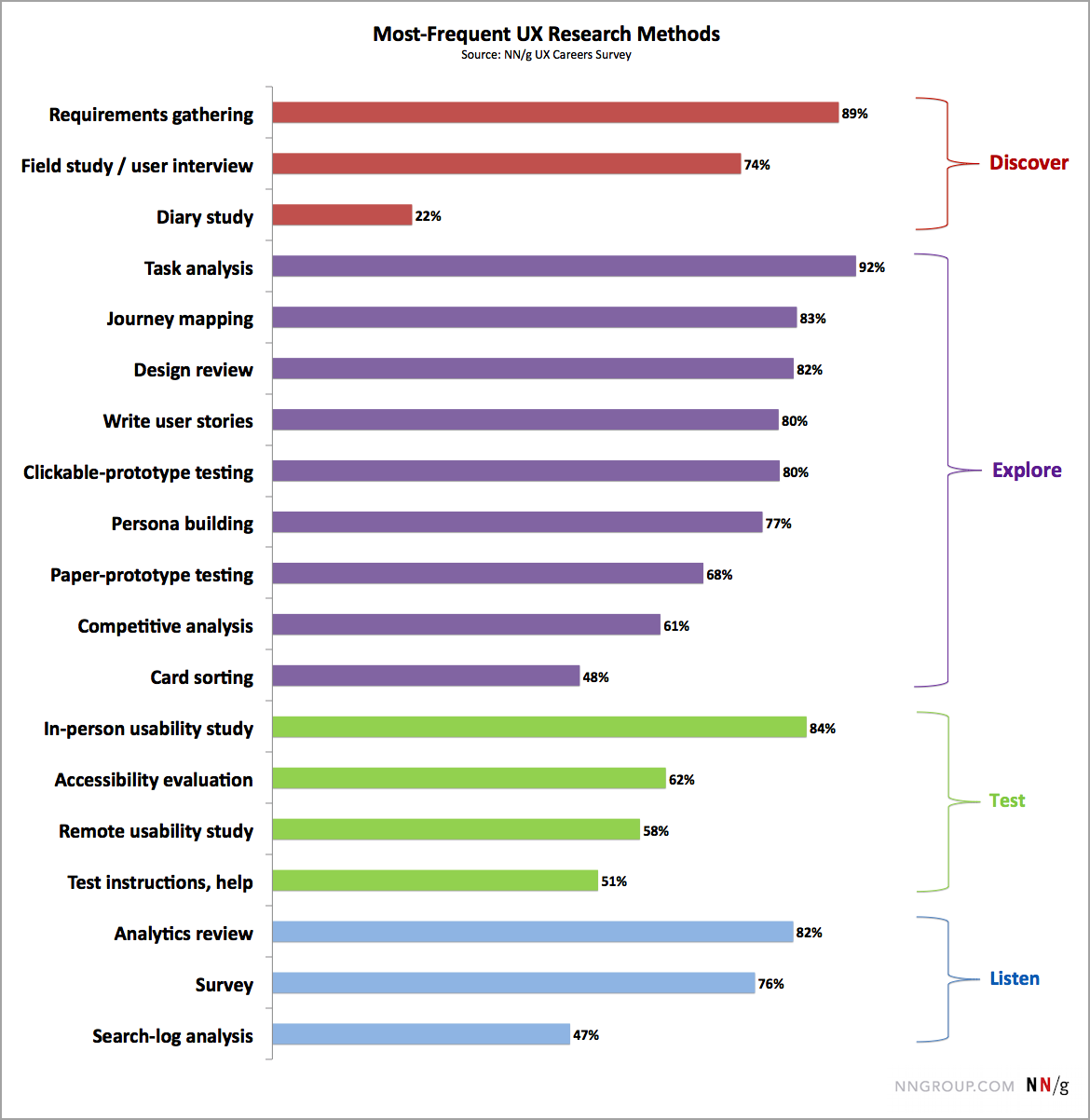

Each of these UX Research Methods has its own strengths and weaknesses, so it's important to understand your goals for the UX Research activities you want to complete.

What is UX Research?

UX research begins with UX designers and UX researchers studying the real world needs of users. User Experience Research is a process --it's not just one thing-- that involves collecting data, conducting interviews, usability testing prototypes or website designs with human participants in order to deeply understand what people are looking for when they interact with a product or service.

By using different sorts of user-research techniques you can better understand not only people desires from their product of service, but a deeper human need which can serve as an incredibly powerful opportunity.

There's an incredible amount of different sorts of research methods. Most of them can be divided in two camps: Qualitative and Quantitative Research.

Qualitative research - Understanding needs can be accomplished through observation, in depth interviews and ethnographic studies. Quantitative Research focusses more on the numbers, analysing data and collecting measurable statistics.

Within these two groups there's an incredible amount of research activities such as Card Sorting, Competitive Analysis, User Interviews, Usability Tests, Personas & Customer Journeys and many more. We've created our The Curated List of Research Techniques to always give you an up-to-date overview.

Why is UX Research so important?

When I started my career as a digital designer over 15 years ago, I felt like I was always hired to design the client's idea. Simply translate what they had in their head into a UI without even thinking about changing the user experience. Needless to say: This is a recipe for disaster. An no, this isn't a "Client's don't know anything" story. Nobody knows! At least in the beginning. The client had "the perfect idea" for a new digital feature. The launch date was already set and the development process had to start as soon as possible.

When the feature launched, we expected support might get a few questions or even receive a few thank-you emails. We surely must've affected the user experience somehow!

But that didn't happen. Nothing happened. The feature wasn't used.

Because nobody needed it.

This is exactly what happens when you skip user experience research because you think you're solving a problem that "everybody" has, but nobody really does.

Conducting User Experience research can help you to have a better understanding of your stakeholders and what they need. This is incredibly valuable information from which you can create personas and customer journeys. It doesn't matter if you're creating a new product or service or are improving an existing once.

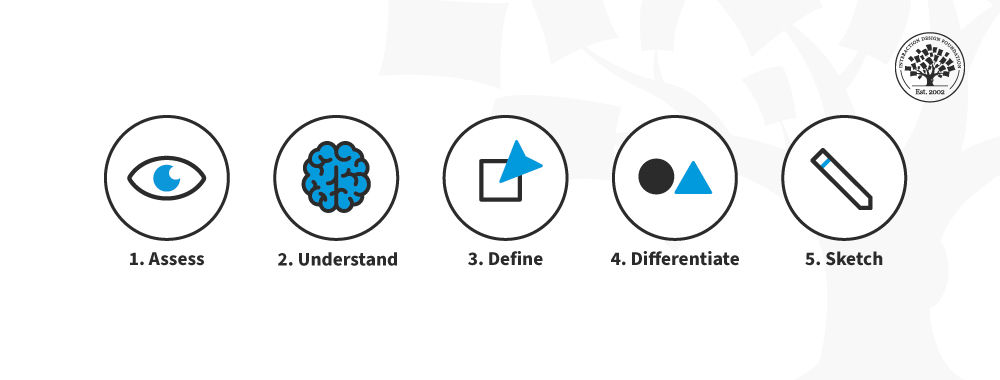

Five Steps for conducting User Research

Created by Eric Sanders , the Research Learning Spiral provides five main steps for your user research.

- Objectives: What are the knowledge gaps we need to fill?

- Hypotheses: What do we think we understand about our users?

- Methods: Based on time and manpower, what methods should we select?

- Conduct: Gather data through the selected methods.

- Synthesize: Fill in the knowledge gaps, prove or disprove our hypotheses, and discover opportunities for our design efforts.

1: Objectives: Define the Problem Statement

A problem statement is a concise description of an issue to be addressed or a condition to be improved upon. It identifies the gap between the current (problem) state and desired (goal) state of a process or product.

Problem statements are the first steps in your research because they help you to understand what's wrong or needs improving. For example, if your product is a mobile app and the problem statement says that customers are having difficulty paying for items within the application, then UX research will lead you (hopefully) down that path. Most likely it will involve some form of usability testing.

Check out this article if you'd like to learn more about Problem Statements.

2: Hypotheses: What we think we know about our user groups

After getting your Problem Statement right, there's one more thing to do before doing any research. Make sure you have created a clear research goal for yourself. How do you identify Research Objectives? By asking questions:

- Who are we doing this for? The starting point for your personas!

- What are we doing? What's happening right now? What do our user want? What does the company need?

- Think about When. If you're creating a project plan, you'll need a timeline. It also helps to keep in mind when people are using your products or service.

- Where is the logical next step. Where do people use your product? Why there? What limitations are there to that location? Where can you perform research? Where do your users live?

- Why are we doing this? Why should or shouldn't we be doing this? Why teaches you all about motivations from people and for the project.

- Last but not least: How? Besides thinking about the research activities itself, think about how people will test a product or feature. How will the user insights (outcome of the research) work be used in the User Centered Design - and development process?

3: Methods: Choose the right research method

UX research is about exploration, and you want to make sure that your method fits the needs of what you're trying to explore. There are many different methods. In a later chapter we'll go over the most common UX research methods .

For now, all you need to keep in mind that that there are a lot of different ways of doing research.

You definitely don't need to do every type of activity but it would be useful to have a decent understanding of the options you have available, so you pick the right tools for the job.

4. Conduct: Putting in the work

Apply your chosen user research methods to your Hypotheses and Objectives! The various techniques used by the senior product designer in the BTNG Design Process can definitely be overwhelming. The product development process is not a straight line from A to B. UX Researchers often discover new qualitative insights in the user experience due to uncovering new (or incorrect) user needs. So please do understand that UX Design is a lot more than simply creating a design.

5. Synthesise: Evaluating Research Outcome

So you started with your Problem Statement (Objectives), you drafted your hypotheses, chose the top research methods, conducted your research as stated in the research process and now "YOU ARE HERE".

The last step is to Synthesise what you've learned. Start by filling in the knowledge gaps. What unknowns are you now able to answer?

Which of your hypotheses are proven (or disproven)?

And lastly, which new exciting new opportunities did you discover!

Evaluating the outcome of the User Experience Research is an essential part of the work.

Make sure to keep them brief and to-the-point. A good rule of thumb is to include the top three positive comments and the top three problems.

UX Research Methods

Choosing the right ux research method.

Making sure you use the right types of user experience research in any project is essential. Since time and money is always limited, we need to make sure we always get the most bang-for-our-buck. This means we need to pick the UX research method that will give us the most insights as possible for a project.

Three things to keep in mind when making a choice among research methodologies:

- Stages of the product life cycle - Is it a new or existing product?

- Quantitative vs. Qualitative - In depth talk directly with people or data?

- Attitudinal vs. Behavioural - What people say vs what people do

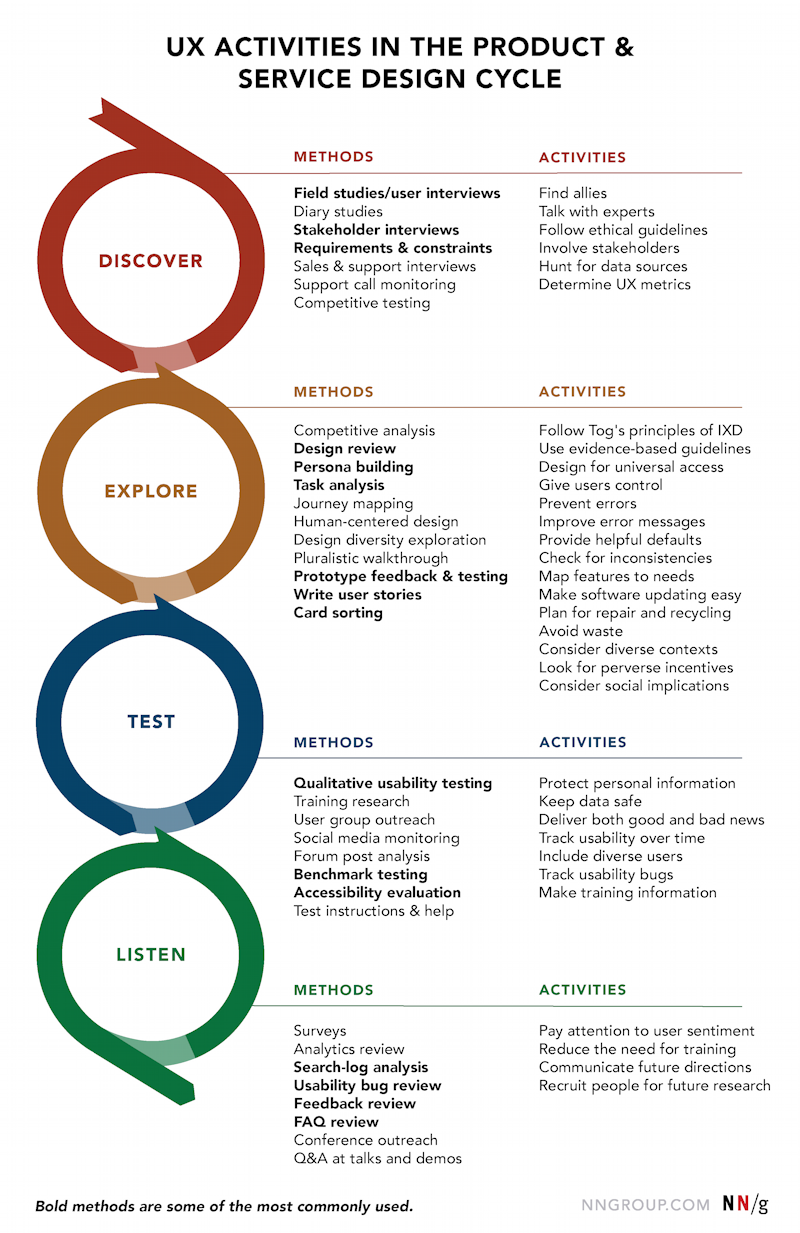

Image from Nielsen Norman Group

Most frequently used methods of UX Research

- Card Sorting: Way before UX Research even was a "thing", psychological research originally used Card Sorting. With Card Sorting, you try to find out how people group things and what sort of hierarchies they use. The BTNG Research Team is specialised in remote research. So our modern Card Sorting user experience research have a few modern surprises.

- Usability Testing: Before launching a new feature or product it is important to do user testing. Give them tasks to complete and see how well the prototype works and learn more about user behaviours.

- Remote Usability Testing: During the COVID-19 lockdown, finding the appropriate ux research methods haven't always been that easy. Luckily, we've adopted plenty of modern solutions that help us with collecting customer feedback even with a remote usability test.

- Research-Based User Personas: A profile of a fictional character representing a specific stakeholder relevant to your product or service. Combine goals and objections with attitude and personality. The BTNG Research Team creates these personas for the target users after conducing both quantitative and qualitative user research.

- Field Studies: Yes, we actually like to go outside. What if your product isn't a B2B desktop application which is being used behind a computer during office hours? At BTNG we have different types of Field Studies which all help you gain valuable insights into human behaviour and the user experience.

- The Expert Interview: Combine your talent with that of one of BTNG's senior researcher. Conducting ux research without talking to the experts on your team would be a waste of time. In every organisation there are people who know a lot about their product or service and have unique insights. We always like to include them in the UX Research!

- Eye Movement Tracking: If you have an existing digital experience up and running - Eye Movement Tracking can help you to identify user experience challenges in your funnel. The outcome shows a heatmap of where the user looks (and doesn't).

Check out this article for a in-depth guide on UX Research Methods.

Qualitative vs. Quantitative UX research methods

Since this is a topic that we can on about for hours, we decided to split this section up in a few parts. First let's start with the difference.

Qualitative UX Research is based on an in-depth understanding of the human behaviour and needs. Qualitative user research includes interviews, observations (in natural settings), usability tests or contextual inquiry. More than often you'll obtain unexpected, valuable insights through this from of user experience research methods.

Quantitative UX Research relies on statistical analysis to make sense out of data (quantitative data) gathered from UX measurements: A/B Tests - Surveys etc. Quantitative UX Research is as you might have guessed, a lot more data-orientated.

If you'd like to learn more about these two types of research, check out these articles:

Get the most out of your User Research with Qualitative Research

Quantitative Research: The Science of Mining Data for Insights

Balancing qualitative and quantitative UX research

Both types of research have amazing benefits but also challenges. Depending on the research goal, it would be wise to have a good understanding which types of research you would like to be part of the ux design and would make the most impact.

The BTNG Research Team loves to start with Qualitative Research to first get a better understanding of the WHY and gain new insights. To validate these new learning they use Quantitative Research in your user experience research.

A handful of helpful UX Research Tools

The landscape of UX research tools has been growing rapidly. The BTNG Research team use a variety of UX research tools to help with well, almost everything. From running usability tests, creating prototypes and even for recruiting participants.

In the not-too-distant future, we'll create a Curated UX Research Tool article. For now, a handful of helpful UX Research Tools should do the trick.

- For surveys : Typeform

- For UX Research Recruitment: Dscout

- For analytics and heatmaps: VWO

- For documenting research: Notion & Airtable

- For Customer Journey Management : TheyDo

- For transcriptions: Descript

- For remote user testing: Maze

- For Calls : Zoom

Surveys: Typeform

What does it do? Survey Forms can be boring. Typeform is one of those ux research tools that helps you to create beautiful surveys with customisable templates and an online editor. For example, you can add videos to your survey or even let people draw their answers instead of typing them in a text box. Who is this for? Startup teams that want to quickly create engaging and modern looking surveys but don't know how to code it themselves.

Highlights: Amazing UX, looks and feel very modern, create forms with ease that match your branding, great reports and automation.

Why is it our top pick? Stop wasting time on ux research tools with too many buttons. Always keep the goal of your ux research methods in mind. Keep things lean, fast and simple with a product with amazing UX.

https://www.typeform.com/

UX Research Recruitment: Dscout

What does it do? Dscout is a remote research platform that helps you recruit participants for your ux research (the right ones). With a pool of +100.000 real users, our user researchers can hop on video calls and collect data for your qualitative user research. So test out those mobile apps user experience and collect all the data! Isn't remote research amazing?

Highlights: User Research Participant Recruitment, Live Sessions,Prototype feedback, competitive analysis, in-the-wild product discovery, field work supplementations, shopalongs.

Why is it our top pick? Finding the right people is more important than finding people fast. BTNG helps corporate clients in all types of industries which require a unique set of users, each time. Dscout helps us to quickly find the right people and make sure our user research is delivered on time and our research process stays in tact.

https://dscout.com/

Analytics and heatmaps: VWO

What does it do? When we were helping the Financial Times, our BTNG Research Team collaborated with FT Marketing Team who were already running experiments with VWO. 50% of the traffic would see one version of a certain page while 50% saw a different version. Which performed best? Perhaps you'd take a look at time-on-page. But more importantly: Which converts better!

Hotjar provides Product Experience Insights that show how users behave and what they feel strongly about, so product teams can deliver real value to them.

Highlights: VWO is an amazing suite that does it all:Automated Feedback, Heatmaps, EyeTracking, User Session Recordings (Participant Tracking) and one thing that Hotjar doesn't do: A/B Testing.

Why is it our top pick? Even tho it's an expensive product, it does give you value for money. Especially the reports with very black and white outcomes are great for presenting the results you've made.

https://vwo.com/

Documenting research: Notion

What does it do? Notion is our command center, where we store and constantly update our studio's aggregate wisdom. It is a super-flexible tool that helps to organise project documentation, prepare for interviews with either clients or their product users, accumulate feedback, or simply take notes.

Highlights: A very clean, structured way to write and share information with your team in a beautiful designed app with an amazing user experience.

Why is it our top pick? There's no better, more structured way to share information.

https://www.notion.so/

Customer Journey Management: TheyDo

What does it do? TheyDo is a modern Journey Management Platform. It centralises your journeys in an easy to manage system, where everyone has access to a single source of truth of the customer experience. It’s like a CMS for journeys.

Highlights: Customer Journey Map designer, Personas and 2x2 Persona Matrix, Opportunity & Solution Management & Prioritisation.

Why is it our top pick? TheyDo fits perfectly with BTNG's way of helping companies become more customer-centric. It helps to visualise the current experience of stakeholders. With those insight which we capture from interviews or usability testing, we discover new opportunities. A perfect starting point for creating solutions!

https://www.theydo.io/

Transcriptions: Descript

What does it do? Descript is an all-in-one solution for audio & video recording, editing and transcription. The editing is as easy as a doc. Imagine you’ve interviewed 20 different people about a new flavor of soda or a feature for your app. You just drop all those files into a Descript Project, and they show up in different “Compositions” (documents) in the sidebar. In a couple of minutes they’ll be transcribed, with speaker labels added automatically.

Highlights: Overdub, Filler Word Removal, Collaboration, Subtitles, Remote Recording and Studio Sound.

Why is it our top pick? Descript is an absolute monster when it comes to recording, editing and transcribing videos. It truly makes digesting the work after recording fast and even fun!

https://www.descript.com/

Remote user testing: Maze

What does it do? Maze is a-mazing remote user testing platform for unmoderated usability tests. With Maze, you can create and run in-depth usability tests and share them with your testers via a link to get actionable insights. Maze also generates a usability study report instantly so that you can share it with anyone.

It’s handy that the tool integrates directly with Figma, InVision, Marvel, and Sketch, thus, you can import a working prototype directly from the design tool you use. The BTNG Design Team with their Figma skills has an amazing chemistry with the Research Team due to that Figma/Maze integration.

Highlights: Besides unmoderated usability testing, Maze can help with different UX Research Methods, like card sorting, tree testing, 5-second testing, A/B testing, and more.

Why is it our top pick? Usability testing has been a time consuming way of qualitative research. Trying to find out how users interact (Task analysis) during an Interviews combined with keeping an eye on the prototype can be... a challenge. The way that Maze allows us to run (besides our hands on usability test) now also run unmoderated usability testing is a powerful weapon in our arsenal.

https://maze.co/

Calls: Zoom

What does it do? As the other video conferencing tools you can run video calls. But what makes Zoom a great tool? We feel that the integration with conferencing equipment is huge for our bigger clients. Now that there's also a Miro integration we can make our user interviews even more fun and interactive!

Highlights: Call Recording, Collaboration tools, Screen Sharing, Free trial, connects to conferencing equipment, host up to 500 people!

Why is it our top pick? Giving the research participants of your user interviews a pleasant experience is so important. Especially when you're looking for qualitative feedback on your ux design, you want to make sure they feel comfortable. And yes, you'll have to start using a paid version - but the user interface of Zoom alone is worth it. Even the Mobile App is really solid.

https://zoom.us/

In Conclusion

No matter what research methodology you rely on if it is qualitative research methods or perhaps quantitative data - keep in mind that user research is an essential part of the Design Process. Not only your UX designer will thank you, but also your users.

In every UX project we've spoken to multiple users - no matter if it was a task analysis, attitudinal research or focus groups... They all had one thing in common:

People thanked us for taking the time to listen to them.

So please, stop thinking about the potential UX research methods you might use in your design process and consider what it REALLY is about:

Solving the right problems for the right people.

And there's only one way to get there: Trying things out, listening, learning and improving.

Looking for help? Reach out!

See the Nielsen Norman Group’s list of user research tips: https://www.nngroup.com/articles/ux-research-cheat-sheet/

Find an extensive range of user research considerations, discussed in Smashing Magazine: https://www.smashingmagazine.com/2018/01/comprehensive-guide-ux-research/

Here’s a convenient and example-rich catalogue of user research tools: https://blog.airtable.com/43-ux-research-tools-for-optimizing-your-product/

Related Posts

How to generate UX Insights

The importance of User Research

How to recruit participants

What is ux research.

UX Research Tools

Are you an agency specialized in UX, digital marketing, or growth? Join our Partner Program

Learn / Guides / UX research guide

Back to guides

How to write effective UX research questions (with examples)

Collecting and analyzing real user feedback is essential in delivering an excellent user experience (UX). But not all user research is created equal—and done wrong, it can lead to confusion, miscommunication, and non-actionable results.

Last updated

Reading time.

You need to ask the right UX research questions to get the valuable insights necessary to continually optimize your product and generate user delight.

This article shows you how to write strong UX research questions, ensuring you go beyond guesswork and assumptions . It covers the difference between open- and close-ended research questions, explains how to go about creating your own UX research questions, and provides several examples to get you started.

Use Hotjar to ask your users the right UX research questions

Put your UX research questions to work with Hotjar's Feedback and Survey tools to uncover product experience insights

The different types of UX research questions

Let’s face it, asking the right UX research questions is hard. It’s a skill that takes a lot of practice and can leave even the most seasoned UX researchers drawing a blank.

There are two main categories of UX research questions: open-ended and close-ended, both of which are essential to achieving thorough, high-quality UX research. Qualitative research—based on descriptions and experiences—leans toward open-ended questions, whereas quantitative research leans toward closed-ended questions.

Let’s dive into the differences between them.

Open-ended UX research questions

Open-ended UX research questions are exactly what they sound like: they prompt longer, more free-form responses, rather than asking someone to choose from established possible answers—like multiple-choice tests.

Open questions are easily recognized because they:

Usually begin with how, why, what, describe, or tell me

Can’t be easily answered with just yes or no, or a word or two

Are qualitative rather than quantitative

If there’s a simple fact you’re trying to get to, a closed question would work. For anything involving our complex and messy human nature, open questions are the way to go.

Open-ended research questions aim to discover more about research participants and gather candid user insights, rather than seeking specific answers.

Some examples of UX research that use open-ended questions include:

Usability testing

Diary studies

Persona research

Use case research

Task analysis

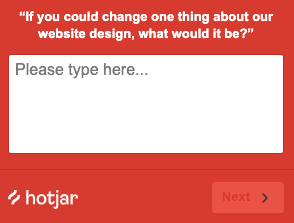

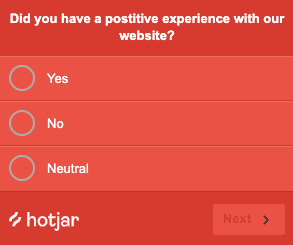

Check out a concrete example of an open-ended UX research question in action below. Hotjar’s Survey tool is a perfect way of gathering longer-form user feedback, both on-site and externally.

Pros and cons of open-ended UX research questions

Like everything in life, open-ended UX research questions have their pros and cons.

Advantages of open-ended questions include:

Detailed, personal answers

Great for storytelling

Good for connecting with people on an emotional level

Helpful to gauge pain points, frustrations, and desires

Researchers usually end up discovering more than initially expected

Less vulnerable to bias

Drawbacks include:

People find them more difficult to answer than closed-ended questions

More time-consuming for both the researcher and the participant

Can be difficult to conduct with large numbers of people

Can be challenging to dig through and analyze open-ended questions

Closed-ended UX research questions

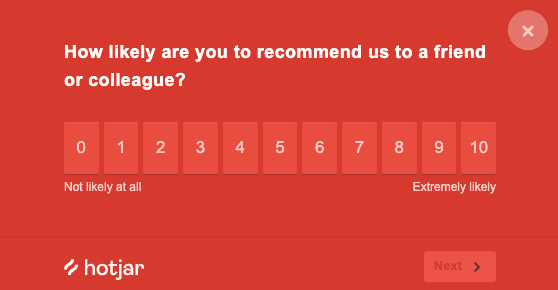

Close-ended UX research questions have limited possible answers. Participants can respond to them with yes or no, by selecting an option from a list, by ranking or rating, or with a single word.

They’re easy to recognize because they’re similar to classic exam-style questions.

More technical industries might start with closed UX research questions because they want statistical results. Then, we’ll move on to more open questions to see how customers really feel about the software we put together.

While open-ended research questions reveal new or unexpected information, closed-ended research questions work well to test assumptions and answer focused questions. They’re great for situations like:

Surveying a large number of participants

When you want quantitative insights and hard data to create metrics

When you’ve already asked open-ended UX research questions and have narrowed them down into close-ended questions based on your findings