- Time Series

Time Series Analysis in Python – A Comprehensive Guide with Examples

- February 13, 2019

- Selva Prabhakaran

Time series is a sequence of observations recorded at regular time intervals. This guide walks you through the process of analyzing the characteristics of a given time series in python.

- What is a Time Series?

- How to import Time Series in Python?

- What is panel data?

- Visualizing a Time Series

- Patterns in a Time Series

- Additive and multiplicative Time Series

- How to decompose a Time Series into its components?

- Stationary and non-stationary Time Series

- How to make a Time Series stationary?

- How to test for stationarity?

- What is the difference between white noise and a stationary series?

- How to detrend a Time Series?

- How to deseasonalize a Time Series?

- How to test for seasonality of a Time Series?

- How to treat missing values in a Time Series?

- What is autocorrelation and partial autocorrelation functions?

- How to compute partial autocorrelation function?

- How to estimate the forecastability of a Time Series?

- Why and How to smoothen a Time Series?

- How to use Granger Causality test to know if one Time Series is helpful in forecasting another?

1. What is a Time Series?

Time series is a sequence of observations recorded at regular time intervals.

Depending on the frequency of observations, a time series may typically be hourly, daily, weekly, monthly, quarterly and annual. Sometimes, you might have seconds and minute-wise time series as well, like, number of clicks and user visits every minute etc.

Why even analyze a time series?

Because it is the preparatory step before you develop a forecast of the series.

Besides, time series forecasting has enormous commercial significance because stuff that is important to a business like demand and sales, number of visitors to a website, stock price etc are essentially time series data.

————————————————————————————————————————————-

Download Free Resource: You might enjoy working through the updated version of the code ( Time Series Workbook download ) used in this post.

So what does analyzing a time series involve?

Time series analysis involves understanding various aspects about the inherent nature of the series so that you are better informed to create meaningful and accurate forecasts.

2. How to import time series in python?

So how to import time series data?

The data for a time series typically stores in .csv files or other spreadsheet formats and contains two columns: the date and the measured value.

Let’s use the read_csv() in pandas package to read the time series dataset (a csv file on Australian Drug Sales) as a pandas dataframe. Adding the parse_dates=['date'] argument will make the date column to be parsed as a date field.

Alternately, you can import it as a pandas Series with the date as index. You just need to specify the index_col argument in the pd.read_csv() to do this.

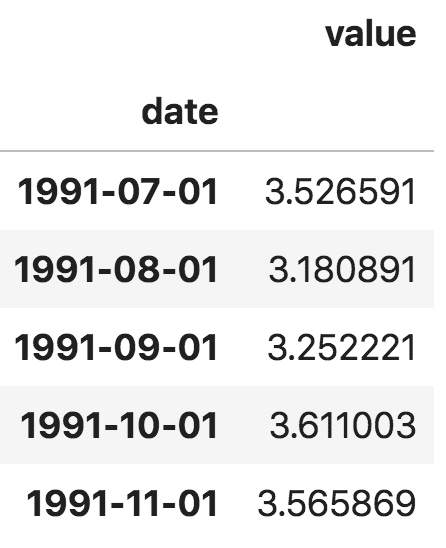

Note, in the series, the ‘value’ column is placed higher than date to imply that it is a series.

3. What is panel data?

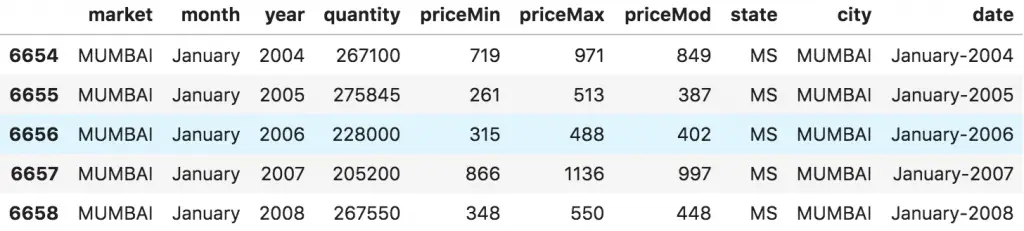

Panel data is also a time based dataset.

The difference is that, in addition to time series, it also contains one or more related variables that are measured for the same time periods.

Typically, the columns present in panel data contain explanatory variables that can be helpful in predicting the Y, provided those columns will be available at the future forecasting period.

An example of panel data is shown below.

4. Visualizing a time series

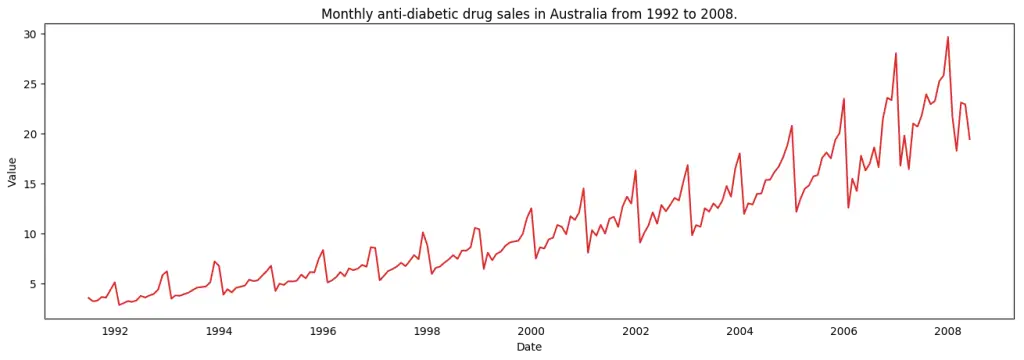

Let’s use matplotlib to visualise the series.

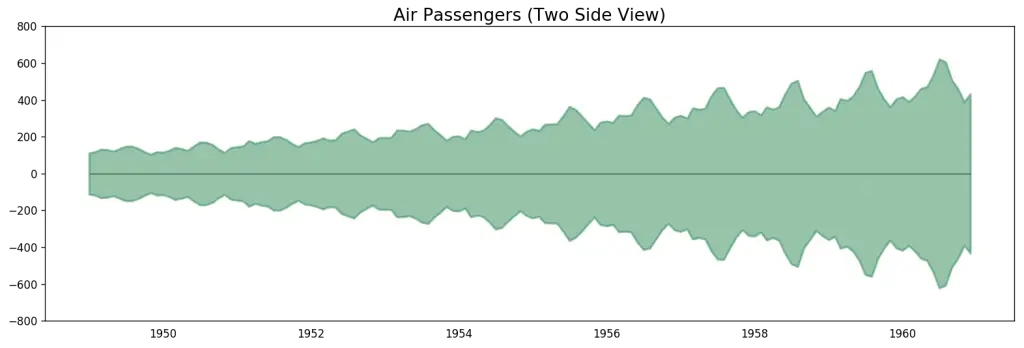

Since all values are positive, you can show this on both sides of the Y axis to emphasize the growth.

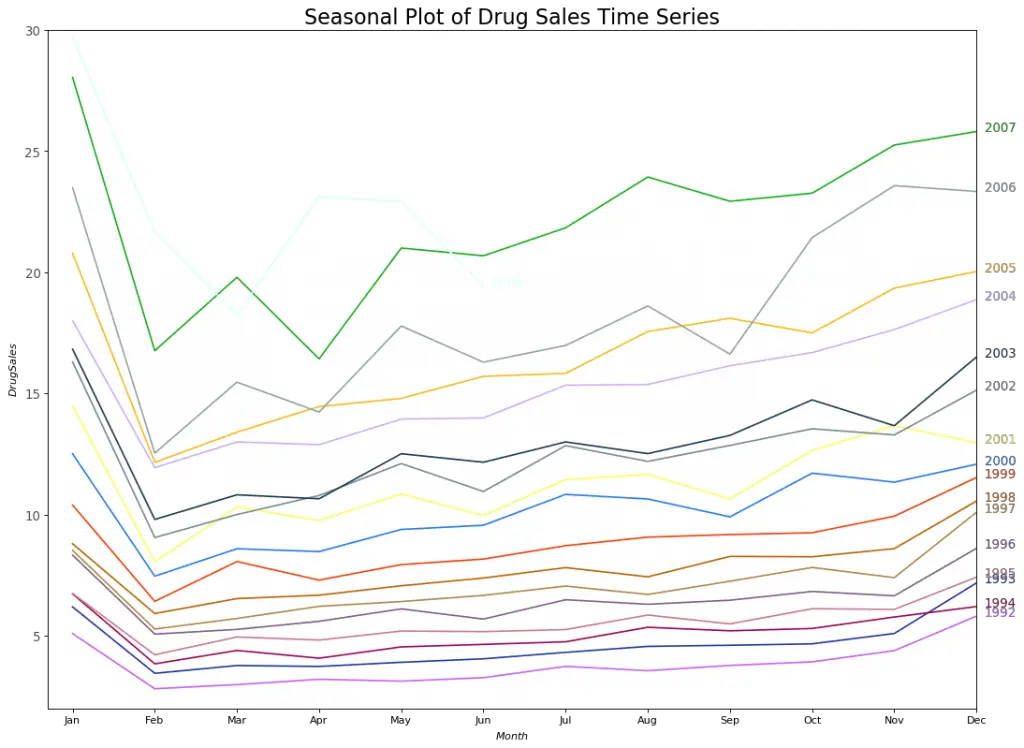

Since its a monthly time series and follows a certain repetitive pattern every year, you can plot each year as a separate line in the same plot. This lets you compare the year wise patterns side-by-side.

Seasonal Plot of a Time Series

There is a steep fall in drug sales every February, rising again in March, falling again in April and so on. Clearly, the pattern repeats within a given year, every year.

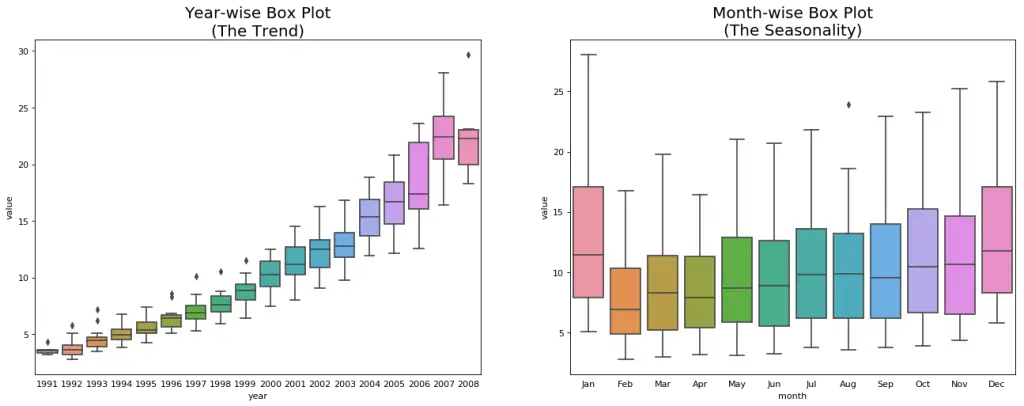

However, as years progress, the drug sales increase overall. You can nicely visualize this trend and how it varies each year in a nice year-wise boxplot. Likewise, you can do a month-wise boxplot to visualize the monthly distributions.

Boxplot of Month-wise (Seasonal) and Year-wise (trend) Distribution

You can group the data at seasonal intervals and see how the values are distributed within a given year or month and how it compares over time.

The boxplots make the year-wise and month-wise distributions evident. Also, in a month-wise boxplot, the months of December and January clearly has higher drug sales, which can be attributed to the holiday discounts season.

So far, we have seen the similarities to identify the pattern. Now, how to find out any deviations from the usual pattern?

5. Patterns in a time series

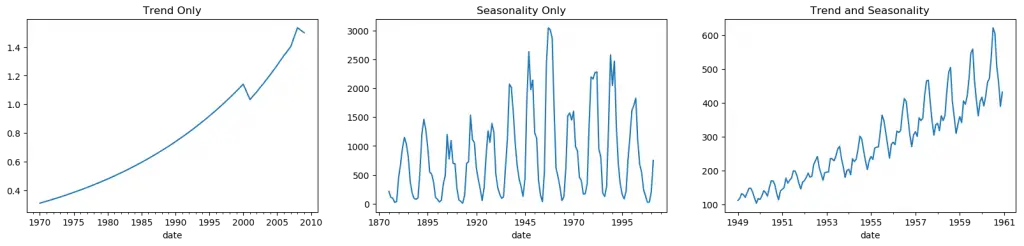

Any time series may be split into the following components: Base Level + Trend + Seasonality + Error

A trend is observed when there is an increasing or decreasing slope observed in the time series. Whereas seasonality is observed when there is a distinct repeated pattern observed between regular intervals due to seasonal factors. It could be because of the month of the year, the day of the month, weekdays or even time of the day.

However, It is not mandatory that all time series must have a trend and/or seasonality. A time series may not have a distinct trend but have a seasonality. The opposite can also be true.

So, a time series may be imagined as a combination of the trend, seasonality and the error terms.

Another aspect to consider is the cyclic behaviour. It happens when the rise and fall pattern in the series does not happen in fixed calendar-based intervals. Care should be taken to not confuse ‘cyclic’ effect with ‘seasonal’ effect.

So, How to diffentiate between a ‘cyclic’ vs ‘seasonal’ pattern?

If the patterns are not of fixed calendar based frequencies, then it is cyclic. Because, unlike the seasonality, cyclic effects are typically influenced by the business and other socio-economic factors.

6. Additive and multiplicative time series

Depending on the nature of the trend and seasonality, a time series can be modeled as an additive or multiplicative, wherein, each observation in the series can be expressed as either a sum or a product of the components:

Additive time series: Value = Base Level + Trend + Seasonality + Error

Multiplicative Time Series: Value = Base Level x Trend x Seasonality x Error

7. How to decompose a time series into its components?

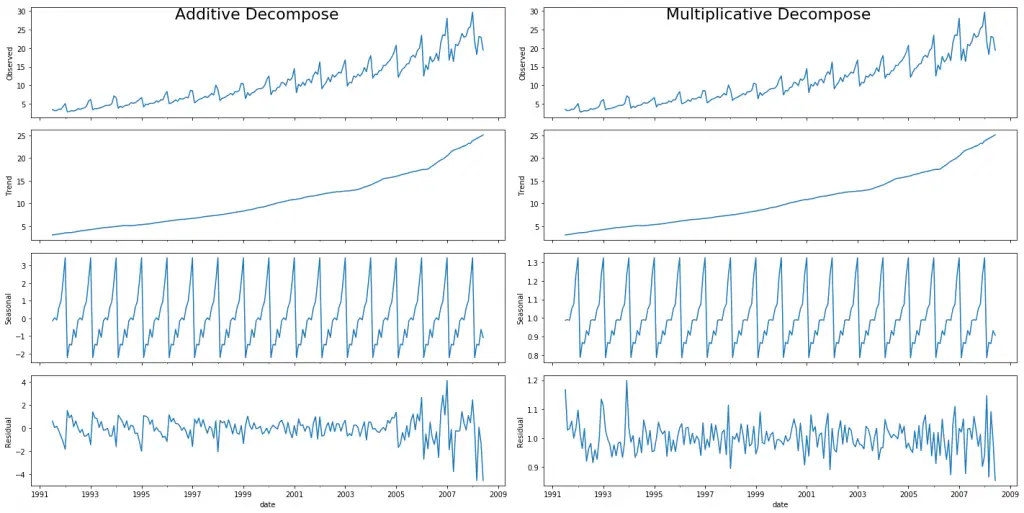

You can do a classical decomposition of a time series by considering the series as an additive or multiplicative combination of the base level, trend, seasonal index and the residual.

The seasonal_decompose in statsmodels implements this conveniently.

Setting extrapolate_trend='freq' takes care of any missing values in the trend and residuals at the beginning of the series.

If you look at the residuals of the additive decomposition closely, it has some pattern left over. The multiplicative decomposition, however, looks quite random which is good. So ideally, multiplicative decomposition should be preferred for this particular series.

The numerical output of the trend, seasonal and residual components are stored in the result_mul output itself. Let’s extract them and put it in a dataframe.

If you check, the product of seas , trend and resid columns should exactly equal to the actual_values .

8. Stationary and Non-Stationary Time Series

Stationarity is a property of a time series. A stationary series is one where the values of the series is not a function of time.

That is, the statistical properties of the series like mean, variance and autocorrelation are constant over time. Autocorrelation of the series is nothing but the correlation of the series with its previous values, more on this coming up.

A stationary time series id devoid of seasonal effects as well.

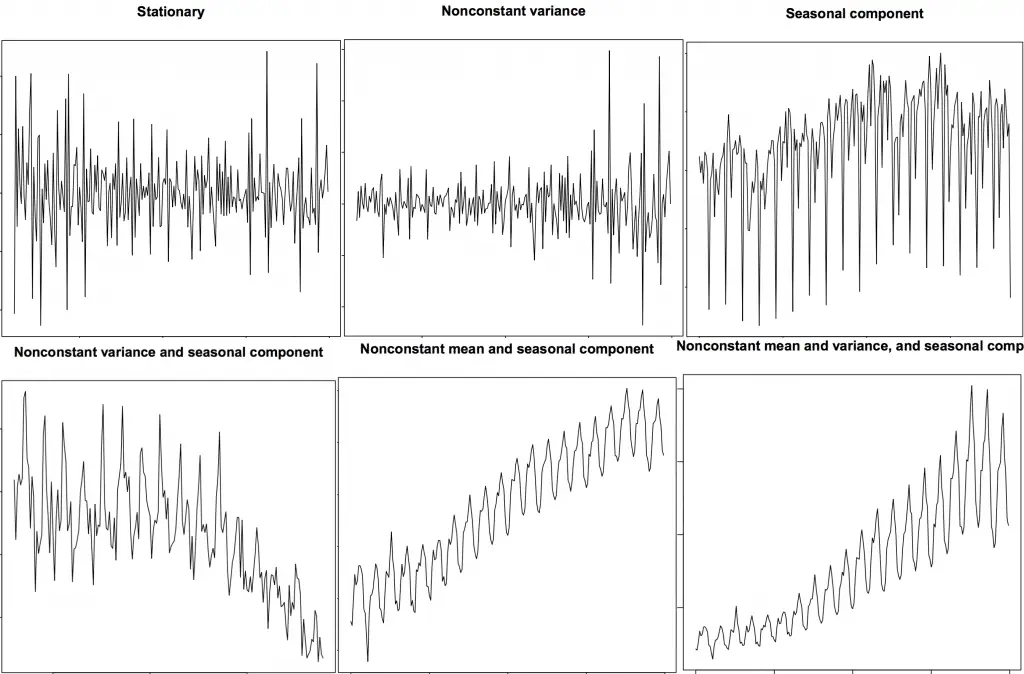

So how to identify if a series is stationary or not? Let’s plot some examples to make it clear:

The above image is sourced from R’s TSTutorial .

So why does a stationary series matter? why am I even talking about it?

I will come to that in a bit, but understand that it is possible to make nearly any time series stationary by applying a suitable transformation. Most statistical forecasting methods are designed to work on a stationary time series. The first step in the forecasting process is typically to do some transformation to convert a non-stationary series to stationary.

9. How to make a time series stationary?

You can make series stationary by:

- Differencing the Series (once or more)

- Take the log of the series

- Take the nth root of the series

- Combination of the above

The most common and convenient method to stationarize the series is by differencing the series at least once until it becomes approximately stationary.

So what is differencing?

If Y_t is the value at time ‘t’, then the first difference of Y = Y t – Y t-1. In simpler terms, differencing the series is nothing but subtracting the next value by the current value.

If the first difference doesn’t make a series stationary, you can go for the second differencing. And so on.

9. Why make a non-stationary series stationary before forecasting?

Forecasting a stationary series is relatively easy and the forecasts are more reliable.

An important reason is, autoregressive forecasting models are essentially linear regression models that utilize the lag(s) of the series itself as predictors.

We know that linear regression works best if the predictors (X variables) are not correlated against each other. So, stationarizing the series solves this problem since it removes any persistent autocorrelation, thereby making the predictors(lags of the series) in the forecasting models nearly independent.

Now that we’ve established that stationarizing the series important, how do you check if a given series is stationary or not?

10. How to test for stationarity?

The stationarity of a series can be established by looking at the plot of the series like we did earlier.

Another method is to split the series into 2 or more contiguous parts and computing the summary statistics like the mean, variance and the autocorrelation. If the stats are quite different, then the series is not likely to be stationary.

Nevertheless, you need a method to quantitatively determine if a given series is stationary or not. This can be done using statistical tests called ‘Unit Root Tests’. There are multiple variations of this, where the tests check if a time series is non-stationary and possess a unit root.

There are multiple implementations of Unit Root tests like:

- Augmented Dickey Fuller test (ADH Test)

- Kwiatkowski-Phillips-Schmidt-Shin – KPSS test (trend stationary)

- Philips Perron test (PP Test)

The most commonly used is the ADF test, where the null hypothesis is the time series possesses a unit root and is non-stationary. So, id the P-Value in ADH test is less than the significance level (0.05), you reject the null hypothesis.

The KPSS test, on the other hand, is used to test for trend stationarity. The null hypothesis and the P-Value interpretation is just the opposite of ADH test. The below code implements these two tests using statsmodels package in python.

11. What is the difference between white noise and a stationary series?

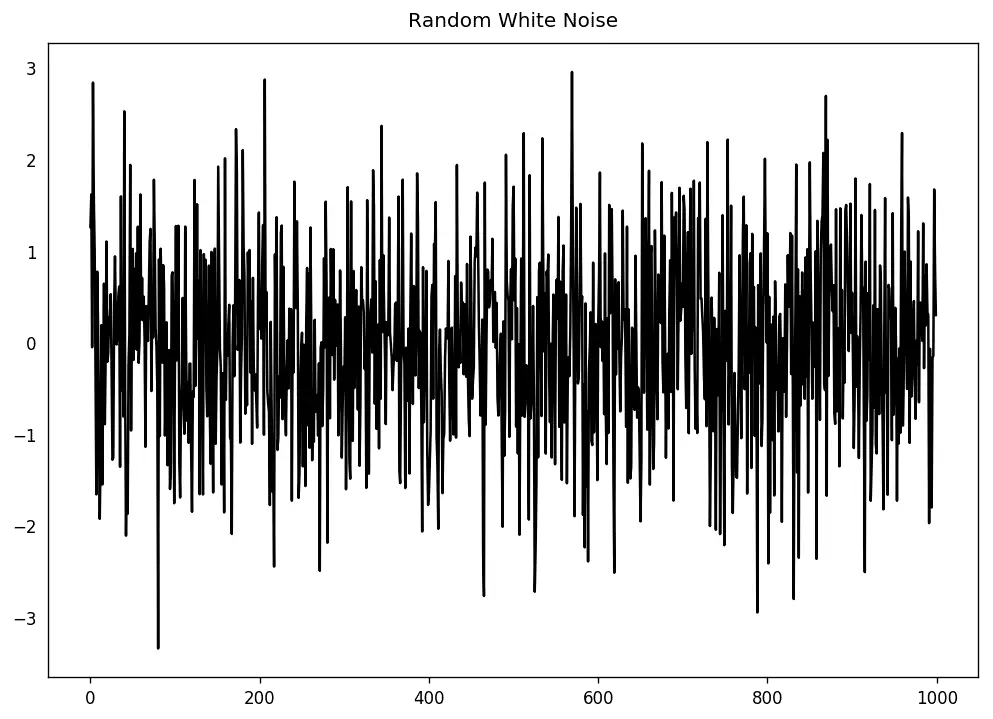

Like a stationary series, the white noise is also not a function of time, that is its mean and variance does not change over time. But the difference is, the white noise is completely random with a mean of 0.

In white noise there is no pattern whatsoever. If you consider the sound signals in an FM radio as a time series, the blank sound you hear between the channels is white noise.

Mathematically, a sequence of completely random numbers with mean zero is a white noise.

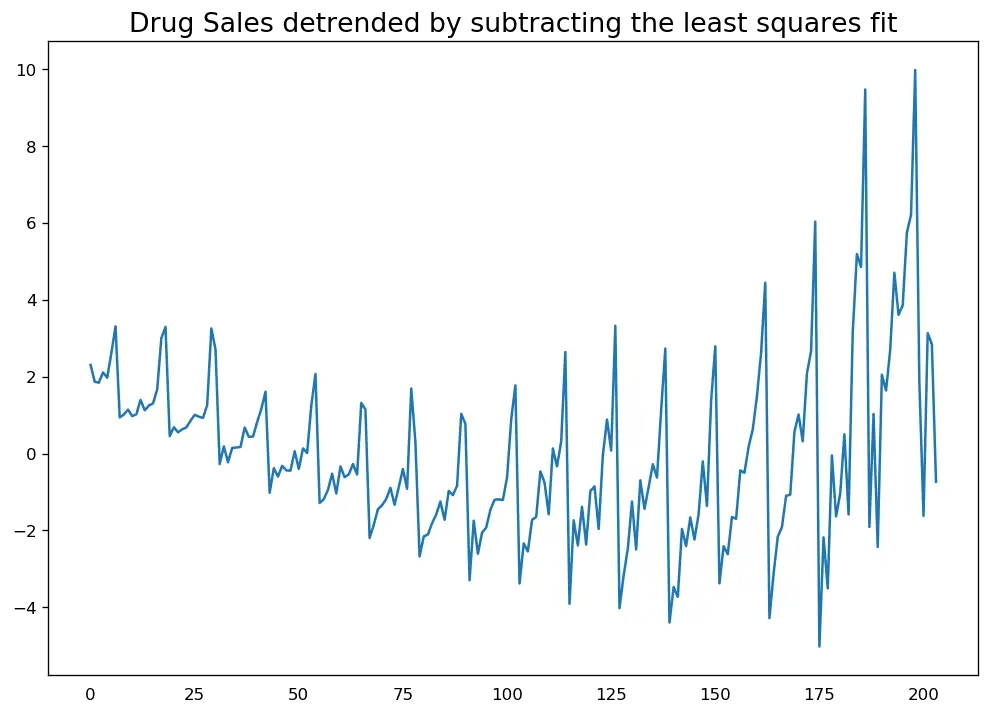

12. How to detrend a time series?

Detrending a time series is to remove the trend component from a time series. But how to extract the trend? There are multiple approaches.

- Subtract the line of best fit from the time series. The line of best fit may be obtained from a linear regression model with the time steps as the predictor. For more complex trends, you may want to use quadratic terms (x^2) in the model.

- Subtract the trend component obtained from time series decomposition we saw earlier.

- Subtract the mean

- Apply a filter like Baxter-King filter(statsmodels.tsa.filters.bk filter) or the Hodrick-Prescott Filter (statsmodels.tsa.filters.hp filter) to remove the moving average trend lines or the cyclical components.

Let’s implement the first two methods.

13. How to deseasonalize a time series?

There are multiple approaches to deseasonalize a time series as well. Below are a few:

If dividing by the seasonal index does not work well, try taking a log of the series and then do the deseasonalizing. You can later restore to the original scale by taking an exponential.

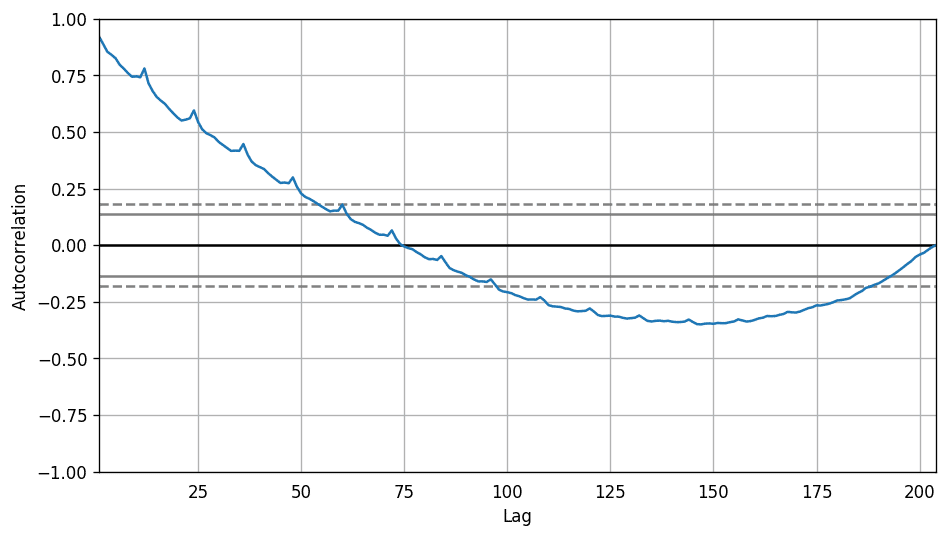

14. How to test for seasonality of a time series?

The common way is to plot the series and check for repeatable patterns in fixed time intervals. So, the types of seasonality is determined by the clock or the calendar:

- Hour of day

- Day of month

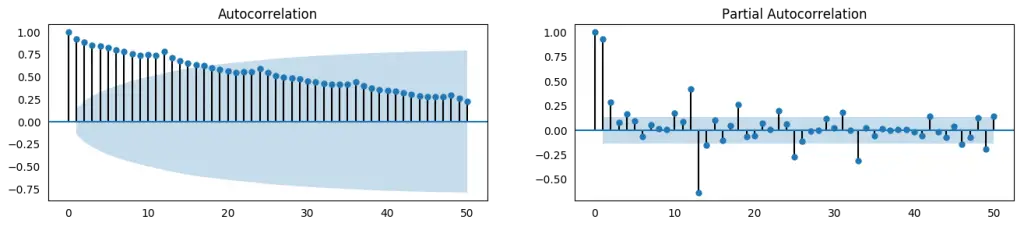

However, if you want a more definitive inspection of the seasonality, use the Autocorrelation Function (ACF) plot. More on the ACF in the upcoming sections. But when there is a strong seasonal pattern, the ACF plot usually reveals definitive repeated spikes at the multiples of the seasonal window.

For example, the drug sales time series is a monthly series with patterns repeating every year. So, you can see spikes at 12th, 24th, 36th.. lines.

I must caution you that in real word datasets such strong patterns is hardly noticed and can get distorted by any noise, so you need a careful eye to capture these patterns.

Alternately, if you want a statistical test, the CHTest can determine if seasonal differencing is required to stationarize the series.

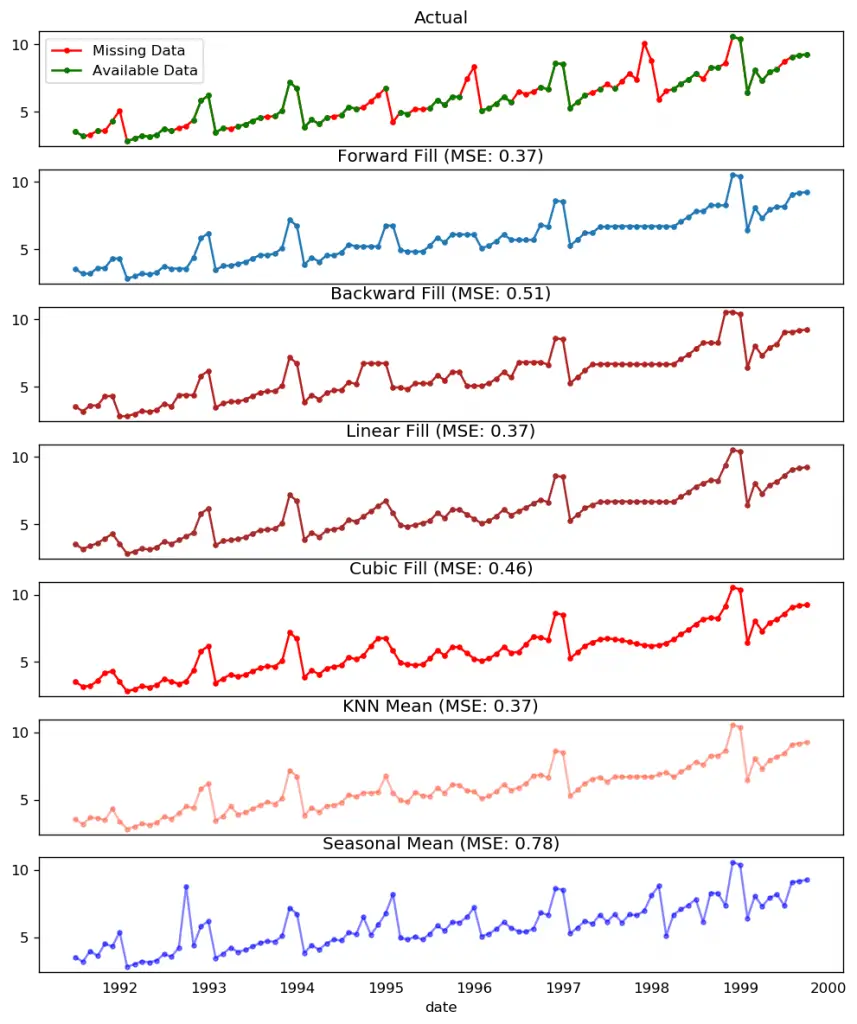

15. How to treat missing values in a time series?

Sometimes, your time series will have missing dates/times. That means, the data was not captured or was not available for those periods. It could so happen the measurement was zero on those days, in which case, case you may fill up those periods with zero.

Secondly, when it comes to time series, you should typically NOT replace missing values with the mean of the series, especially if the series is not stationary. What you could do instead for a quick and dirty workaround is to forward-fill the previous value.

However, depending on the nature of the series, you want to try out multiple approaches before concluding. Some effective alternatives to imputation are:

- Backward Fill

- Linear Interpolation

- Quadratic interpolation

- Mean of nearest neighbors

- Mean of seasonal couterparts

To measure the imputation performance, I manually introduce missing values to the time series, impute it with above approaches and then measure the mean squared error of the imputed against the actual values.

You could also consider the following approaches depending on how accurate you want the imputations to be.

- If you have explanatory variables use a prediction model like the random forest or k-Nearest Neighbors to predict it.

- If you have enough past observations, forecast the missing values.

- If you have enough future observations, backcast the missing values

- Forecast of counterparts from previous cycles.

16. What is autocorrelation and partial autocorrelation functions?

Autocorrelation is simply the correlation of a series with its own lags. If a series is significantly autocorrelated, that means, the previous values of the series (lags) may be helpful in predicting the current value.

Partial Autocorrelation also conveys similar information but it conveys the pure correlation of a series and its lag, excluding the correlation contributions from the intermediate lags.

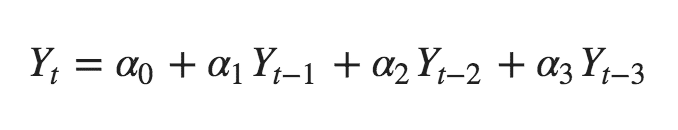

17. How to compute partial autocorrelation function?

So how to compute partial autocorrelation?

The partial autocorrelation of lag (k) of a series is the coefficient of that lag in the autoregression equation of Y. The autoregressive equation of Y is nothing but the linear regression of Y with its own lags as predictors.

For Example, if Y_t is the current series and Y_t-1 is the lag 1 of Y , then the partial autocorrelation of lag 3 ( Y_t-3 ) is the coefficient $\alpha_3$ of Y_t-3 in the following equation:

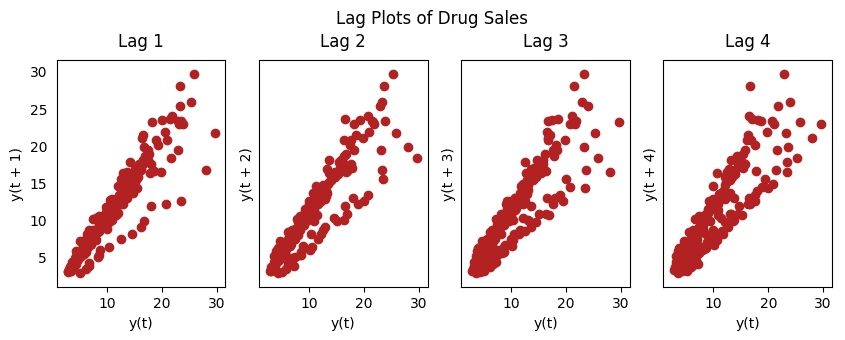

18. Lag Plots

A Lag plot is a scatter plot of a time series against a lag of itself. It is normally used to check for autocorrelation. If there is any pattern existing in the series like the one you see below, the series is autocorrelated. If there is no such pattern, the series is likely to be random white noise.

In below example on Sunspots area time series, the plots get more and more scattered as the n_lag increases.

19. How to estimate the forecastability of a time series?

The more regular and repeatable patterns a time series has, the easier it is to forecast. The ‘Approximate Entropy’ can be used to quantify the regularity and unpredictability of fluctuations in a time series.

The higher the approximate entropy, the more difficult it is to forecast it.

Another better alternate is the ‘Sample Entropy’.

Sample Entropy is similar to approximate entropy but is more consistent in estimating the complexity even for smaller time series. For example, a random time series with fewer data points can have a lower ‘approximate entropy’ than a more ‘regular’ time series, whereas, a longer random time series will have a higher ‘approximate entropy’.

Sample Entropy handles this problem nicely. See the demonstration below.

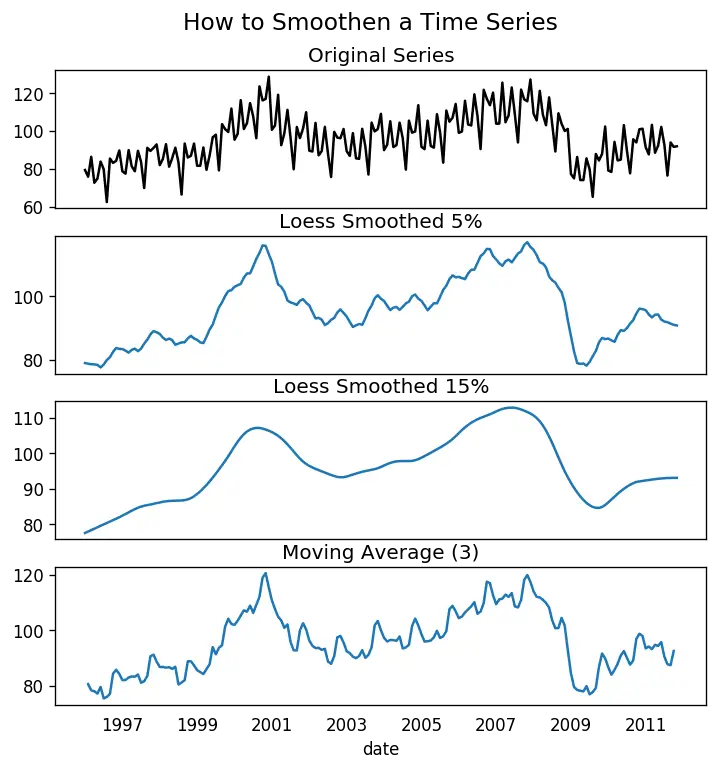

20. Why and How to smoothen a time series?

Smoothening of a time series may be useful in:

- Reducing the effect of noise in a signal get a fair approximation of the noise-filtered series.

- The smoothed version of series can be used as a feature to explain the original series itself.

- Visualize the underlying trend better

So how to smoothen a series? Let’s discuss the following methods:

- Take a moving average

- Do a LOESS smoothing (Localized Regression)

- Do a LOWESS smoothing (Locally Weighted Regression)

Moving average is nothing but the average of a rolling window of defined width. But you must choose the window-width wisely, because, large window-size will over-smooth the series. For example, a window-size equal to the seasonal duration (ex: 12 for a month-wise series), will effectively nullify the seasonal effect.

LOESS, short for ‘LOcalized regrESSion’ fits multiple regressions in the local neighborhood of each point. It is implemented in the statsmodels package, where you can control the degree of smoothing using frac argument which specifies the percentage of data points nearby that should be considered to fit a regression model.

Download dataset: Elecequip.csv

How to use Granger Causality test to know if one time series is helpful in forecasting another?

Granger causality test is used to determine if one time series will be useful to forecast another.

How does Granger causality test work?

It is based on the idea that if X causes Y, then the forecast of Y based on previous values of Y AND the previous values of X should outperform the forecast of Y based on previous values of Y alone.

So, understand that Granger causality should not be used to test if a lag of Y causes Y. Instead, it is generally used on exogenous (not Y lag) variables only.

It is nicely implemented in the statsmodel package.

It accepts a 2D array with 2 columns as the main argument. The values are in the first column and the predictor (X) is in the second column.

The Null hypothesis is: the series in the second column, does not Granger cause the series in the first. If the P-Values are less than a significance level (0.05) then you reject the null hypothesis and conclude that the said lag of X is indeed useful.

The second argument maxlag says till how many lags of Y should be included in the test.

In the above case, the P-Values are Zero for all tests. So the ‘month’ indeed can be used to forecast the Air Passengers.

22. What Next

That’s it for now. We started from the very basics and understood various characteristics of a time series. Once the analysis is done the next step is to begin forecasting.

In the next post, I will walk you through the in-depth process of building time series forecasting models using ARIMA. See you soon.

More Articles

Granger causality test in python, granger causality test, arima model – complete guide to time series forecasting in python, augmented dickey fuller test (adf test) – must read guide, kpss test for stationarity, vector autoregression (var) – comprehensive guide with examples in python, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Stat 510: applied time series analysis.

- Overview

- Materials

- Assessment Plan

- Prerequisites

- Online Notes

Time series data are intriguing yet complicated information to work with. While this course will provide students with a basic understanding of the nature and basic processes used to analyze such data, you will quickly realize that this is a small first step in being able to confidently understand what trends might exist within a set of data and the complexities of being able to use this information to make predictions or forecasts. Yet, whether it is financial, medical or weather related, this type of data is quite frequently found in much of our daily lives.

Course Topics

Topics typically covered in this graduate level course include:

- Understanding the characteristics of time series data

- Understanding moving average models and partial autocorrelation as foundations for analysis of time series data

- Exploratory Data Analysis - Trends in time series data

- Using smoothing and removing trends when working with time series data

- Understanding how periodograms are used with time series data

- Implementing ARMA and ARIMA time series models

- Identifying and interpreting various patterns for intervention effects

- Examining the analysis of repeated measures design

- Using ARCH and AR models in multivariate time series contexts

- Using spectral density estimation and spectral analysis

- Using fractional differencing and threshold models with time series data

- Statistical Modeling

- Modeling with R

- Time Series Methods

Course Author(s)

Dr. Megan Romer is the current author of the materials used in this course. The material builds on that of the course's previous authors, Robert Heckard and John Fricks.

This course makes extensive use of the R Statistical Software. This is open-source free software that can be downloaded from the R Project home page. For more information and links to download this software please see the Statistical Software page. MS Word is also required.

R involves programming. Students should be a quick learner of software packages. Students who have no experience with programming or are anxious about being able to manipulate software code are strongly encouraged to take the one-credit course in R in order to establish this foundation. R will be supported and sample programs will be supplied but you will be required to do some programing on your own. Due to different software applications, software versions, and platforms, there may be issues with running code. Students must be proactive in seeking advice and help from appropriate sources, including documentation resources, the class discussion forum, the teaching assistant, instructor or helpdesk.

Shumway R.H., Stoffer, D.S. (2012). Time Series Analysis and Its Applications With R Examples , 4th Edition, Springer. ISBN: 978-3319524511

(The text is required, though students do not have to purchase it because it is available electronically through the Penn State library.)

Last updated: FA23

Assessment Plan

Lab / Homework Activities - will be given weekly. In order to receive credit for homework, all assignments must include HOW an answer is obtained, not just the numerical solution. These assignments can be compiled in Word, however, submission as a .pdf is prefered.

Exams - There will be one mid-term and one final exam. These are 'take-home' application oriented exams that should be completed in the time specified by the instructor.

Prerequisites

STAT 462 - Applied Regression Analysis, or STAT 501 - Regression Methods, or STAT 511 - Regression Analysis and Modeling

- Show All Code

- Hide All Code

- Download Rmd

Time Series Analysis - Assignment 2

Time series analysis of antarctica land ice mass series, james angus, required packages, introduction, aims/objectives.

This report summarises the analysis of the Antarctica land ice mass series, and the process followed to identify suitable ARIMA(p,d,q) models as part of Assignment 2 for Time Series Analysis. The report covers analysis of the dataset, addressing non-stationarity, and using model specification tools to suggest possible ARIMA models.

Methodology

Creating a function for producing acf and pacf plots.

To reduce repetition of R codes, a function was created to produce ACF and PACF plots.

Retrieving the data

A summary of the Antarctica land ice mass data imported into R showed the imported dataset had 19 observations, including a minimum date of 2002 and a maximum date of 2020, the same as the original data file.

The data summary also showed that between 2002 and 2020, the mean annual change in land ice mass relative to 2001 was -1,063.77 billion metric tons. Considering the timeframe for the series was 19 years, it was shocking to note that the ‘Annual_ice_mass’ had a range of 2,531.51 billion metric tons, more than double the mean annual change and indicative of a very large change in land ice mass over the course of less than 20 years.

As the Antarctic land Ice dataset had been stored as a dataframe when it was first imported into R, it was converted to a time series object with a frequency of 1 as each observation was an annual change.

Exploring the data

The Antarctic land ice series was plotted to visually inspect the series for the 5 key time series traits; trend, seasonality, changing variance, behaviour, and change point/intervention.

Figure 1: Time series plot of the Annual Antarctica land ice mass series.

The time series plot of the Antarctica land ice mass series showed the following time series traits;

From the time series plot in Figure 1, it was clear the series had a downward, linear trend, indicating that the Antarctic land ice mass was getting smaller relative to 2001 as time progressed.

- Seasonality

There were no clear signs of seasonality in Figure 1.

- Changing variance

There were no clear signs of changing variance in Figure 1.

The time series plot in Figure 1 exhibited autoregressive (AR) behaviour, with many successive points. There were no clear fluctuations in the series, so there was no evidence of moving average (MA) behaviour.

- Change point

There was no clear evidence of a change point (intervention) in the Antarctica land ice mass series as the series appeared to exhibit the same trend and behaviour, with no sudden changes. This was not surprising considering the factors that would affect Antarctic land ice mass (e.g., climate change) do not change substantially from year to year. It would take a very large intervention (e.g., a meteor strike) to change the behaviour of the series.

The points in Figure 1 show evidence of succeeding measurements being related to one another, and Figure 2 highlights this relationship more clearly using a scatter plot of neighbouring pairs.

Figure 2: Scatter plot of each Annual Antarctica land ice mass measurement and the measurement taken in the previous year.

The scatter plot in Figure 2 shows a strong, upward trend, indicative of a strong correlation between neighbouring pairs. The plot indicates that small annual changes in Antarctic land ice mass (relative to 2001) tend to be followed by small changes, moderate changes tend to be followed by moderate changes, and large changes tend to be followed by large changes. These observations were supported by the correlation between neighbouring annual land ice mass changes which, at 0.985, indicated a very strong, positive correlation between neighbouring points.

Assessing stationarity

Figure 1 showed a strong, downward, linear trend in the Antarctica ice series, suggesting that the raw Antarctica ice series was non-stationary. As ARIMA models can only be produced using a stationary series, ACF and PACF plotswere produced to assess the series for stationarity.

Figure 3: ACF and PACF plots of the Antarctica land ice mass series.

The ACF plot in Figure 3 showed 3 significant autocorrelation lags and a decaying pattern, indicative of a non-stationary, autoregressive process. There was no evidence of seasonality in the series as there was no clear wave pattern in the ACF lags.

The first partial autocorrelation lag in the PACF plot in Figure 3 was highly significant, while all other lags were not significant, indicative of a non-stationary, autoregressive series. Several unit root tests, including an Augmented Dickey-Fuller test, were also used to assess the series for stationarity, with results shown in Table 4.

Table 4: Statistical tests for stationarity and normality in the Antarctica land ice mass series.

The null hypothesis under the Augmented Dickey-Fuller test and the Phillips-Perron (PP) test is that the series Is non-stationary. With p-values of 0.30 for the Augmented Dickey-Fuller test, and 0.75 for the PP test, there was insufficient evidence to reject the null hypothesis of both tests and therefore we could conclude that the series was not stationary. This conclusion was supported by results of a KPSS test, which produced a p-value of 0.01, indicating there was sufficient evidence to reject the null hypothesis under the KPSS test that the series was stationary. Finally, a Q-Q plot was produced to assess the raw series for normality, alongside a Shapiro-Wilk test. Although the Q-Q plot in Figure 4 showed deviations from the diagonal in each tail of the series, the Shapiro-Wilk test produced a p-value of 0.147, indicating that the measurements in the Antarctica land ice mass series were approximately normally distributed.

Figure 4: Normal Q-Q plot of the Antarctica land ice mass series.

Table 5: Result of the Shapiro-Wilk test on the raw Antarctica Ice series.

Addressing non-stationarity

Transformation.

Although there was no clear evidence of changing variance in Figure 1, a Box-Cox transformation was applied to the series to observe the impact of transformation on the series.

As the series contained negative values (declines in the land ice mass relative to 2001), a constant had to be added to the series to make all values greater than zero before a Box-Cox transformation could be applied. A vector of candidate power transformation values was also required to obtain candidate lambda values for the Box-Cox transformation due to computational limits. Through trial and error, limits of zero and 2 were identified as appropriate power transformation limits for the Box-Cox transformation.

The plot of candidate lambda values for the Box-Cox transformation of the Antarctica land ice mass series can be seen in Figure 5. Table 6 shows the values of the first and third vertical lines from Figure 5 were 0.65 and 1.22, respectively, and the chosen lambda value for the Box-Cox transformation (the middle vertical line in Figure 5) was 0.89.

Figure 5: Log likelihood plot for the lambda values in the Box-Cox transformation of the Antarctica land ice mass series.

Table 6: Results for candidate lambda values from the Box-Cox power transformation function.

With an appropriate lambda value identified, the Box-Cox transformation was applied to the Antarctica Ice series and the resultant series was plotted for visual inspection in Figure 6.

Figure 6: Time series plot of the Box-Cox transformed Annual Antarctica land ice mass series.

The time series plot in Figure 6 showed no clear change or improvement in the variation of the series, and the Q-Q plot in Figure 7 still showed deviations from the diagonal at both tails of the series. There was also little change in the result from the Shapiro-Wilk test of the Box-Cox transformed series, shown in Table 7, indicating that values in the series were still approximately normally distributed.

Figure 7: Normal Q-Q Plot for the Box-Cox transformed Antarctica Ice series.

Table 7: Results from the Shapiro-Wilk test of the Box-Cox transformed Antarctic Ice series.

Finally, the Augmented Dickey-Fuller test (Table 8) produced a p-value of 0.563, indicating that the Box-Cox transformed Antarctica Ice series was non-stationary.

Table 8: Results from the Augmented Dickey-Fuller test of the Box-Cox transformed Antarctic Ice series.

As there was no evidence to suggest the raw Antarctica Ice series exhibited changing variation, and the Box-Cox transformation did not change or improve the normality of the series, the Box-Cox transformation was deemed unnecessary, and the raw series would be used when applying differencing to address non-stationarity.

Differencing

Differencing was applied to the raw Antarctica Ice series to address the trend in the raw series, with the resulting series plotted in Figure 8.

Figure 8: Time series plot of first differenced Antarctica Ice series

The time series plot in Figure 8 appeared to exhibit a slight downward trend, indicating that the first differenced series may still be non-stationary. However, the ACF and PACF plots of the first differenced Antarctica Ice series (Figure 9) showed no significant points and no decaying pattern, suggesting the first differenced series may have been stationary.

Figure 9: ACF and PACF plots of the first differenced Antarctica land ice mass series.

To test for stationarity, an Augmented Dickey-Fuller test of the first differenced series was performed, with the test resulting in a p-value of 0.357 which, at the α=0.05 level, indicated that the first differenced Antarctica Ice series was non-stationary.

Table 9: Result from the Augmented Dickey-Fuller test of the first differenced Antarctic Ice series.

As first differencing had not produced a stationary series, second differencing was applied to the raw Antarctica Ice series, with the resulting series plotted in Figure 10.

Figure 10: Time series plot of second differenced Antarctica Ice series.

The second differenced time series in Figure 10 showed no clear trend, suggesting that second differencing may have resulted in a stationary series.

Figure 11: ACF and PACF plots of the second differenced Antarctica land ice mass series.

The ACF and PACF plots of the second differenced series (Figure 11) showed a significant PACF lag at the second lag, suggesting the second differenced series may be non-stationary. Supporting this was the Augmented Dickey-Fuller test of the second differenced series (Table 10), which produced a p-value of 0.073, indicating that, at the α=0.05 level, the second differenced series may still have been non-stationary.

However, as the result of the Augmented Dickey-Fuller test was within the “doubt range” of 0.03 to 0.1, additional unit root tests were performed to better evaluate whether second differencing had resulted in a stationary series.

Table 10: Results from the Augmented Dickey-Fuller test, Phillips-Perron test, and KPSS test of the second differenced Antarctic Ice series.

A Phillips-Perron Unit Root test and a KPSS test (Table 11) produced p-values of 0.026, and 0.1, respectively, indicating there was sufficient evidence to conclude the second differenced series was stationary.

Table 11: Results from the Phillips-Perron test, and KPSS test of the second differenced Antarctic Ice series.

As the series appeared stationary in Figure 10, and the Phillips-Perron Unit Root test and KPSS test in Table 11 indicated the second differenced series was stationary, it was concluded that second differencing of the Antarctica Ice series had resulted in a stationary series.

As second differencing the Antarctica Ice series had resulted in a stationary series, it was deemed suitable for use in model specification. All proposed ARIMA(p,d,q) models would have a value of “d” equal to 2, as second differencing had been applied.

Model specification

Acf & pacf plots.

The first step in identifying potential models for the Antarctica Ice series was to use ACF and PACF plots to propose values for the orders of autoregression (p) and moving average (q).

Figure 12: ACF and PACF plots of the second differenced Antarctica Ice series.

From the PACF plot in Figure 12, the second partial autocorrelation lag was deemed significant, and the first lag was deemed borderline significant, indicating that the value of “p” could be 1 or 2. These values were understandable given autoregressive behaviour was identified in the raw Antarctica Ice series.

There were no clearly significant autocorrelation lags in the ACF plot of the second differenced series. However, the first lag of the ACF plot was deemed borderline significant, and thus the value of “q” was proposed as either 0 or 1.

The resulting proposed models from the ACF and PACF plots were:

- { ARIMA(1,2,0), ARIMA(2,2,0), ARIMA(1,2,1), ARIMA(2,2,1) }

The second step in identifying potential models for the Antarctica Ice series involved using the extended ACF function, or EACF. Running the EACF function on the second differenced series with default parameters resulted in an error, which was overcome by specifying the maximum values for AR and MA in the EACF function. The resulting output is shown in Table 12.

Table 12: EACF plot of the second differenced Antarctic Ice series.

The most top-left point in the EACF plot was selected as (0,0), and the models proposed using the EACF plot were:

- { ARIMA(0,2,0), ARIMA(0,2,1) }

It should be noted that the ARIMA(0,2,0) model will have no coefficients. Trend modelling would be used to see if this model captures the autocorrelation in the series.

The third step in identifying potential models for the Antarctica Ice series was to use the Bayesian Information Criterion, or “BIC”, to propose values for “p” and “q”.

The BIC plot was first produced using values of 10 for ‘nma’ and ‘nar’ however, this resulted in an error. As it was known from the ACF, PAC, and EACF plots that the orders of “p” and “q” would likely be small, the values of ‘nma’ and ‘nar’ were reduced to 5, and then to 3, with the resulting BIC plots shown in Figure 13 and Figure 14, respectively.

Figure 13: BIC plot of the second differenced Antarctic Ice series, using ‘nar’ and ‘nma’ values of 5.

Figure 14: BIC plot of the second differenced Antarctic Ice series, using ‘nar’ and ‘nma’ values of 3.

The BIC plot with values of 3 for ‘nar’ and ‘nma’ (Figure 14) proposed models that were in-line with the ACF, PACF, and EACF plots, so it was selected for use in proposing models for the second differenced series.

The model proposals from the BIC model specification method were:

- { ARIMA(1,2,1), ARIMA(2,2,1), ARIMA(3,2,1), ARIMA(1,2,0), ARIMA(2,2,0) }

Final set of possible models

The final set of possible models for the Antarctica land ice mass series was:

- { ARIMA(1,2,0),

- ARIMA(2,2,0),

- ARIMA(1,2,1),

- ARIMA(2,2,1),

- ARIMA(0,2,0),

- ARIMA(0,2,1),

- ARIMA(3,2,1) }

Most of these models made sense in the context of the data exploration, which identified autoregressive behaviour in the raw Antarctica Ice series (i.e., “p” would likely be non-zero) and no clear evidence of moving average behaviour (i.e., “q” would likely be low or zero). It should be noted that the ARIMA(0,2,0) model will have no coefficients, and trend modelling would be required to see if this model captures the autocorrelation in the series.

The following models were proposed by more than one model specification method;

- ARIMA(2,2,1) }

Further testing, including diagnostic checking, would be required to identify the optimal model from the set of proposed models.

Summary & Conclusion

The objective of the analysis and model specification conducted was to understand the Antarctica land ice mass dataset, and to propose a set of possible ARIMA(p,d,q) models for the series.

Exploration of the Antarctica land ice mass dataset revealed a large range and a mean change of -1,063.77 billion metric tons, relative to the ice mass in 2001.

Analysis of the Antarctica Ice series identified that the series exhibited a clear downward trend and autoregressive behaviour (successive points), with no clear signs of seasonality, changing variance, or a change point. A high correlation between neighbouring points was also identified, which aligned with the autocorrelation behaviour observed when the series was plotted.

ACF and PACF plots of the Antarctica Ice series identified significant autocorrelation lags, and a single, very significant partial autocorrelation lag, indicative of a non-stationary series. Further tests confirmed that the raw series was not stationary, but that the measurements in the series were approximately normally distributed.

Although the series did not exhibit changing variance, a Box-Cox transformation was applied to the series to observe its impact. Visual inspection, a Shapiro-Wilk test, and a unit root test identified that the Box-Cox transformation did not change the series much, so the transformation was deemed unnecessary.

Differencing was then applied to the raw series to address the trend in the series. Visual inspection and a unit root test confirmed that first differencing did not overcome the non-stationarity, and so second differencing was applied. Although the Augmented Dickey-Fuller test of the second differenced series suggested the series was still non-stationary, visual inspection, and further unit root tests (PP and KPSS) confirmed that second differencing had made the series stationary. As such, the second differenced series was chosen for use in model specification.

ACF, PACF, EACF and BIC model specification tools were used to proposed suitable ARIMA(p,d,q) models for the Antarctica Ice series, with the final set of proposed models containing 7 ARIMA(p,d,q) models;

{ ARIMA(1,2,0), ARIMA(2,2,0), ARIMA(1,2,1), ARIMA(2,2,1), ARIMA(0,2,0), ARIMA(0,2,1), ARIMA(3,2,1) }.

Time Series and Forecasting: A Project-based Approach with R

Chapter 21 assignment: project proposal.

In this assignment you will develop your initial concept note into a draft of a full project proposal. Treat this assignment as a “dry run” for developing a proposal for a grant or fellowship application, or for your Ph.D. prospectus.

Your proposal should include at least the following sections and information.

Front matter: Descriptive title, your name, date, reference to “SYS 5581 Time Series & Forecasting, Spring 2021”.

Abstract: A very brief summary of the project.

21.1 Introduction

Give a narrative description of the problem you are addressing, and the methods you will use to address it. Provide context:

- What is the question you are attempting to answer?

- Why is this question important? (Who cares?)

- How will you go about attempting to answer this question?

This work addresses the question: Why do people not use probabilistic forecasts for decision-making?

21.2 The data and the data-generating process

Describe the data set you will be analyzing, and where it comes from, how it was generated and collected. Identify the source of the data. Give a narrative description of the data-generating process: this piece is critical.

Since these will be time series data: identify the frequency of the data series (e.g., hourly, monthly), and the period of record.

21.3 Exploratory data analysis

Provide a brief example of the data, showing how they are structured.

21.4 Plot the time series.

21.5 perform and report the results of other exploratory data analysis, 21.5.1 stl decomposition.

21.5.2 Fitting data to simple models

21.5.3 Work with ln(GDP)

21.6 Statistical model

21.6.1 formal model of data-generating process.

Write down an equation (or set of equations) that represent the data-generating process formally.

If applicable: describe any transformations of the data (e.g., differencing, taking logs) you need to make to get the data into a form (e.g., linear) ready for numerical analysis.

What kind of process is it? \(AR(p)\) ? White noise with drift? Something else?

Write down an equation expressing each realization of the stochastic process \(y_t\) as a function of other observed data (which could include lagged values of \(y\) ), unobserved parameters ( \(\beta\) ), and an error term ( \(\varepsilon_t\) ). Ex:

\[y = X\cdot\beta + \varepsilon\] Add a model of the error process. Ex: \(\varepsilon \sim N(0, \sigma^2 I_T)\) .

21.6.2 Discussion of the statistical model

Describe how the formal statistical model captures and aligns with the narrative of the data-generating process. Flag any statistical challenges raised by the data generating process, e.g. selection bias; survivorship bias; omitted variables bias, etc.

21.7 Plan for data analysis

Describe what information you wish to extract from the data. Do you wish to… estimate the values of the unobserved model parameters? create a tool for forecasting? estimate the exceedance probabilities for future realizations of \(y_t\) ?

Describe your plan for getting this information. OLS regression? Some other statistical technique?

If you can: describe briefly which computational tools you will use (e.g., R), and which packages you expect to draw on.

21.8 Submission requirements

Prepare your proposal using Markdown . (You may find it useful to generate your Markdown file from some other tool, e.g. R Markdown in R Studio.) Submit your proposal by pushing it to your repo within the course organization on Github. When your proposal is ready, notify the instructor by also creating a submission for this assignment on Collab. Please also upload a PDF version of your proposal to Collab as part of your submission.

21.9 Comment

Depending on your prior experience, you may find this assignment challenging. Treat this assignment as an opportunity to make progress on your own research program. Make your proposal as complete as you can. But note that this assignment is merely the First Draft. You will have more opportunity to refine your work over the next two months, in consultation with the instructor, your advisor, and your classmates.

21.10 References

Time Series Analysis Explained

Time series analysis is a powerful statistical method that examines data points collected at regular intervals to uncover underlying patterns and trends. This technique is highly relevant across various industries, as it enables informed decision making and accurate forecasting based on historical data. By understanding the past and predicting the future, time series analysis plays a crucial role in fields such as finance, health care, energy, supply chain management, weather forecasting, marketing, and beyond. In this guide, we will dive into the details of what time series analysis is, why it’s used, the value it creates, how it’s structured, and the important base concepts to learn in order to understand the practice of using time series in your data analytics practice.

Table of Contents

- What Is Time Series Analysis?

- Why Do Organizations Use Time Series Analysis?

- Components of Time Series Data

Types of Data

- Important Time Series Terms and Concepts

Time Series Analysis Techniques

- Advantages of Time Series Analysis

- Challenges of Time Series Analysis

- The Future of Time Series Analysis.

What Is Time Series Analysis?

Time series analysis is indispensable in data science, statistics, and analytics.

At its core, time series analysis focuses on studying and interpreting a sequence of data points recorded or collected at consistent time intervals. Unlike cross-sectional data, which captures a snapshot in time, time series data is fundamentally dynamic, evolving over chronological sequences both short and extremely long. This type of analysis is pivotal in uncovering underlying structures within the data, such as trends, cycles, and seasonal variations.

Technically, time series analysis seeks to model the inherent structures within the data, accounting for phenomena like autocorrelation, seasonal patterns, and trends. The order of data points is crucial; rearranging them could lose meaningful insights or distort interpretations. Furthermore, time series analysis often requires a substantial dataset to maintain the statistical significance of the findings. This enables analysts to filter out 'noise,' ensuring that observed patterns are not mere outliers but statistically significant trends or cycles.

To delve deeper into the subject, you must distinguish between time-series data, time-series forecasting, and time-series analysis. Time-series data refers to the raw sequence of observations indexed in time order. On the other hand, time-series forecasting uses historical data to make future projections, often employing statistical models like ARIMA (AutoRegressive Integrated Moving Average). But Time series analysis, the overarching practice, systematically studies this data to identify and model its internal structures, including seasonality, trends, and cycles. What sets time series apart is its time-dependent nature, the requirement for a sufficiently large sample size for accurate analysis, and its unique capacity to highlight cause-effect relationships that evolve.

Why Do Organizations Use Time Series Analysis?

Time series analysis has become a crucial tool for companies looking to make better decisions based on data. By studying patterns over time, organizations can understand past performance and predict future outcomes in a relevant and actionable way. Time series helps turn raw data into insights companies can use to improve performance and track historical outcomes.

For example, retailers might look at seasonal sales patterns to adapt their inventory and marketing. Energy companies could use consumption trends to optimize their production schedule. The applications even extend to detecting anomalies—like a sudden drop in website traffic—that reveal deeper issues or opportunities. Financial firms use it to respond to stock market shifts instantly. And health care systems need it to assess patient risk in the moment.

Rather than a series of stats, time series helps tell a story about evolving business conditions over time. It's a dynamic perspective that allows companies to plan proactively, detect issues early, and capitalize on emerging opportunities.

Components of Time Series Data

Time series data is generally comprised of different components that characterize the patterns and behavior of the data over time. By analyzing these components, we can better understand the dynamics of the time series and create more accurate models. Four main elements make up a time series dataset:

- Seasonality

Trends show the general direction of the data, and whether it is increasing, decreasing, or remaining stationary over an extended period of time. Trends indicate the long-term movement in the data and can reveal overall growth or decline. For example, e-commerce sales may show an upward trend over the last five years.

Seasonality refers to predictable patterns that recur regularly, like yearly retail spikes during the holiday season. Seasonal components exhibit fluctuations fixed in timing, direction, and magnitude. For instance, electricity usage may surge every summer as people turn on their air conditioners.

Cycles demonstrate fluctuations that do not have a fixed period, such as economic expansions and recessions. These longer-term patterns last longer than a year and do not have consistent amplitudes or durations. Business cycles that oscillate between growth and decline are an example.

Finally, noise encompasses the residual variability in the data that the other components cannot explain. Noise includes unpredictable, erratic deviations after accounting for trends, seasonality, and cycles.

In summary, the key components of time series data are:

- Trends: Long-term increases, decreases, or stationary movement

- Seasonality: Predictable patterns at fixed intervals

- Cycles: Fluctuations without a consistent period

- Noise: Residual unexplained variability

Understanding how these elements interact allows for deeper insight into the dynamics of time series data.

When embarking on time series analysis, the first step is often understanding the type of data you're working with. This categorization primarily falls into three distinct types: Time Series Data, Cross-Sectional Data, and Pooled Data. Each type has unique features that guide the subsequent analysis and modeling.

- Time Series Data: Comprises observations collected at different time intervals. It's geared towards analyzing trends, cycles, and other temporal patterns.

- Cross-Sectional Data: Involves data points collected at a single moment in time. Useful for understanding relationships or comparisons between different entities or categories at that specific point.

- Pooled Data: A combination of Time Series and Cross-Sectional data. This hybrid enriches the dataset, allowing for more nuanced and comprehensive analyses.

Understanding these data types is crucial for appropriately tailoring your analytical approach, as each comes with its own set of assumptions and potential limitations.

Important Time Series Terms & Concepts

Time series analysis is a specialized branch of statistics focused on studying data points collected or recorded sequentially over time. It incorporates various techniques and methodologies to identify patterns, forecast future data points, and make informed decisions based on temporal relationships among variables. This form of analysis employs an array of terms and concepts that help in the dissection and interpretation of time-dependent data.

- Dependence : The relationship between two observations of the same variable at different periods is crucial for understanding temporal associations.

- Stationarity : A property where the statistical characteristics like mean and variance are constant over time; often a prerequisite for various statistical models.

- Differencing : A transformation technique to turn stationary into non-stationary time series data by subtracting consecutive or lagged values.

- Specification : The process of choosing an appropriate analytical model for time series analysis could involve selection criteria, such as the type of curve or the degree of differencing.

- Exponential Smoothing : A forecasting method that uses a weighted average of past observations, prioritizing more recent data points for making short-term predictions.

- Curve Fitting : The use of mathematical functions to best fit a set of data points, often employed for non-linear relationships in the data.

- ARIMA (Auto Regressive Integrated Moving Average) : A widely-used statistical model for analyzing and forecasting time series data, encompassing aspects like auto-regression, integration (differencing), and moving average.

Time series analysis is critical for businesses to predict future outcomes, assess past performances, or identify underlying patterns and trends in various metrics. Time series analysis can offer valuable insights into stock prices, sales figures, customer behavior, and other time-dependent variables. By leveraging these techniques, businesses can make informed decisions, optimize operations, and enhance long-term strategies.

Time series analysis offers a multitude of benefits to businesses.The applications are also wide-ranging, whether it's in forecasting sales to manage inventory better, identifying the seasonality in consumer behavior to plan marketing campaigns, or even analyzing financial markets for investment strategies. Different techniques serve distinct purposes and offer varied granularity and accuracy, making it vital for businesses to understand the methods that best suit their specific needs.

- Moving Average : Useful for smoothing out long-term trends. It is ideal for removing noise and identifying the general direction in which values are moving.

- Exponential Smoothing : Suited for univariate data with a systematic trend or seasonal component. Assigns higher weight to recent observations, allowing for more dynamic adjustments.

- Autoregression : Leverages past observations as inputs for a regression equation to predict future values. It is good for short-term forecasting when past data is a good indicator.

- Decomposition : This breaks down a time series into its core components—trend, seasonality, and residuals—to enhance the understanding and forecast accuracy.

- Time Series Clustering : Unsupervised method to categorize data points based on similarity, aiding in identifying archetypes or trends in sequential data.

- Wavelet Analysis : Effective for analyzing non-stationary time series data. It helps in identifying patterns across various scales or resolutions.

- Intervention Analysis : Assesses the impact of external events on a time series, such as the effect of a policy change or a marketing campaign.

- Box-Jenkins ARIMA models : Focuses on using past behavior and errors to model time series data. Assumes data can be characterized by a linear function of its past values.

- Box-Jenkins Multivariate models : Similar to ARIMA, but accounts for multiple variables. Useful when other variables influence one time series.

- Holt-Winters Exponential Smoothing : Best for data with a distinct trend and seasonality. Incorporates weighted averages and builds upon the equations for exponential smoothing.

The Advantages of Time Series Analysis

Time series analysis is a powerful tool for data analysts that offers a variety of advantages for both businesses and researchers. Its strengths include:

- Data Cleansing : Time series analysis techniques such as smoothing and seasonality adjustments help remove noise and outliers, making the data more reliable and interpretable.

- Understanding Data : Models like ARIMA or exponential smoothing provide insight into the data's underlying structure. Autocorrelations and stationarity measures can help understand the data's true nature.

- Forecasting : One of the primary uses of time series analysis is to predict future values based on historical data. Forecasting is invaluable for business planning, stock market analysis, and other applications.

- Identifying Trends and Seasonality : Time series analysis can uncover underlying patterns, trends, and seasonality in data that might not be apparent through simple observation.

- Visualizations : Through time series decomposition and other techniques, it's possible to create meaningful visualizations that clearly show trends, cycles, and irregularities in the data.

- Efficiency : With time series analysis, less data can sometimes be more. Focusing on critical metrics and periods can often derive valuable insights without getting bogged down in overly complex models or datasets.

- Risk Assessment : Volatility and other risk factors can be modeled over time, aiding financial and operational decision-making processes.

Challenges of Time Series Analysis

While time series analysis has a lot to offer, it also comes with its own set of limitations and challenges, such as:

- Limited Scope : Time series analysis is restricted to time-dependent data. It's not suitable for cross-sectional or purely categorical data.

- Noise Introduction : Techniques like differencing can introduce additional noise into the data, which may obscure fundamental patterns or trends.

- Interpretation Challenges : Some transformed or differenced values may need more intuitive meaning, making it easier to understand the real-world implications of the results.

- Generalization Issues : Results may only sometimes be generalizable, primarily when the analysis is based on a single, isolated dataset or period.

- Model Complexity : The choice of model can greatly influence the results, and selecting an inappropriate model can lead to unreliable or misleading conclusions.

- Non-Independence of Data : Unlike other types of statistical analysis, time series data points are not always independent, which can introduce bias or error in the analysis.

- Data Availability : Time series analysis often requires many data points for reliable results, and such data may not always be easily accessible or available.

The Future of Time Series Analysis

The future of time series analysis will likely see significant advances thanks to innovations in machine learning and artificial intelligence. These technologies will enable more sophisticated and accurate forecasting models while also improving how we handle real-world complexities like missing data and sparse datasets.

Some key developments are likely to include:

- Hybrid models strategically combine multiple techniques —such as ARIMA, exponential smoothing, deep learning LSTM networks, and Fourier transforms—to capitalize on their respective strengths. Blending approaches in this way can produce more robust and precise forecasts.

- Advanced deep learning algorithms like LSTM recurrent neural networks can uncover subtle patterns and interdependencies in time series data. LSTMs excel at sequence modeling and time series forecasting tasks.

- Real-time analysis and monitoring using predictive analytics and anomaly detection over streaming data. Real-time analytics will become indispensable for time-critical monitoring and decision-making applications as computational speeds increase.

- Automated time series model selection using hyperparameter tuning, Bayesian methods, genetic algorithms, and other techniques to systematically determine the optimal model specifications and parameters for a given dataset and context. This relieves analysts of much tedious trial-and-error testing.

- State-of-the-art missing data imputation, cleaning, and preprocessing techniques to overcome data quality issues: For example, advanced interpolation, Kalman filtering, and robust statistical methods can minimize distortions caused by gaps, noise, outliers, and irregular intervals in time series data.

In summary, we can expect major leaps in time series forecasting accuracy, efficiency, and applicability as modern AI and data processing innovations integrate into standard applied analytics practice. The future is bright for leveraging these technologies to extract valuable insights from time series data.

Related resources

.webp)

This is the default text value

Watch on-DEMAND DEMOS

ATTEND AN EVENT

Get a free trial

INTERACTIVE DEMOS

JOIN THE COMMUNITY

SCHEDULE A CALL

ATTEND A LIVE LAB

SEE WORKBOOK EXAMPLES

JOIN THE SIGMA COMMUNITY

The University of Chicago The Law School

Innovation clinic—significant achievements for 2023-24.

The Innovation Clinic continued its track record of success during the 2023-2024 school year, facing unprecedented demand for our pro bono services as our reputation for providing high caliber transactional and regulatory representation spread. The overwhelming number of assistance requests we received from the University of Chicago, City of Chicago, and even national startup and venture capital communities enabled our students to cherry-pick the most interesting, pedagogically valuable assignments offered to them. Our focus on serving startups, rather than all small- to medium-sized businesses, and our specialization in the needs and considerations that these companies have, which differ substantially from the needs of more traditional small businesses, has proven to be a strong differentiator for the program both in terms of business development and prospective and current student interest, as has our further focus on tackling idiosyncratic, complex regulatory challenges for first-of-their kind startups. We are also beginning to enjoy more long-term relationships with clients who repeatedly engage us for multiple projects over the course of a year or more as their legal needs develop.

This year’s twelve students completed over twenty projects and represented clients in a very broad range of industries: mental health and wellbeing, content creation, medical education, biotech and drug discovery, chemistry, food and beverage, art, personal finance, renewable energy, fintech, consumer products and services, artificial intelligence (“AI”), and others. The matters that the students handled gave them an unparalleled view into the emerging companies and venture capital space, at a level of complexity and agency that most junior lawyers will not experience until several years into their careers.

Representative Engagements

While the Innovation Clinic’s engagements are highly confidential and cannot be described in detail, a high-level description of a representative sample of projects undertaken by the Innovation Clinic this year includes:

Transactional/Commercial Work

- A previous client developing a symptom-tracking wellness app for chronic disease sufferers engaged the Innovation Clinic again, this time to restructure its cap table by moving one founder’s interest in the company to a foreign holding company and subjecting the holding company to appropriate protections in favor of the startup.

- Another client with whom the Innovation Clinic had already worked several times engaged us for several new projects, including (1) restructuring their cap table and issuing equity to an additional, new founder, (2) drafting several different forms of license agreements that the company could use when generating content for the platform, covering situations in which the company would license existing content from other providers, jointly develop new content together with contractors or specialists that would then be jointly owned by all creators, or commission contractors to make content solely owned by the company, (3) drafting simple agreements for future equity (“Safes”) for the company to use in its seed stage fundraising round, and (4) drafting terms of service and a privacy policy for the platform.

- Yet another repeat client, an internet platform that supports independent artists by creating short films featuring the artists to promote their work and facilitates sales of the artists’ art through its platform, retained us this year to draft a form of independent contractor agreement that could be used when the company hires artists to be featured in content that the company’s Fortune 500 brand partners commission from the company, and to create capsule art collections that could be sold by these Fortune 500 brand partners in conjunction with the content promotion.

- We worked with a platform using AI to accelerate the Investigational New Drug (IND) approval and application process to draft a form of license agreement for use with its customers and an NDA for prospective investors.

- A novel personal finance platform for young, high-earning individuals engaged the Innovation Clinic to form an entity for the platform, including helping the founders to negotiate a deal among them with respect to roles and equity, terms that the equity would be subject to, and other post-incorporation matters, as well as to draft terms of service and a privacy policy for the platform.

- Students also formed an entity for a biotech therapeutics company founded by University of Chicago faculty members and an AI-powered legal billing management platform founded by University of Chicago students.

- A founder the Innovation Clinic had represented in connection with one venture engaged us on behalf of his other venture team to draft an equity incentive plan for the company as well as other required implementing documentation. His venture with which we previously worked also engaged us this year to draft Safes to be used with over twenty investors in a seed financing round.

More information regarding other types of transactional projects that we typically take on can be found here .

Regulatory Research and Advice

- A team of Innovation Clinic students invested a substantial portion of our regulatory time this year performing highly detailed and complicated research into public utilities laws of several states to advise a groundbreaking renewable energy technology company as to how its product might be regulated in these states and its clearest path to market. This project involved a review of not only the relevant state statutes but also an analysis of the interplay between state and federal statutes as it relates to public utilities law, the administrative codes of the relevant state executive branch agencies, and binding and non-binding administrative orders, decisions and guidance from such agencies in other contexts that could shed light on how such states would regulate this never-before-seen product that their laws clearly never contemplated could exist. The highly varied approach to utilities regulation in all states examined led to a nuanced set of analysis and recommendations for the client.

- In another significant research project, a separate team of Innovation Clinic students undertook a comprehensive review of all settlement orders and court decisions related to actions brought by the Consumer Financial Protection Bureau for violations of the prohibition on unfair, deceptive, or abusive acts and practices under the Consumer Financial Protection Act, as well as selected relevant settlement orders, court decisions, and other formal and informal guidance documents related to actions brought by the Federal Trade Commission for violations of the prohibition on unfair or deceptive acts or practices under Section 5 of the Federal Trade Commission Act, to assemble a playbook for a fintech company regarding compliance. This playbook, which distilled very complicated, voluminous legal decisions and concepts into a series of bullet points with clear, easy-to-follow rules and best practices, designed to be distributed to non-lawyers in many different facets of this business, covered all aspects of operations that could subject a company like this one to liability under the laws examined, including with respect to asset purchase transactions, marketing and consumer onboarding, usage of certain terms of art in advertising, disclosure requirements, fee structures, communications with customers, legal documentation requirements, customer service and support, debt collection practices, arrangements with third parties who act on the company’s behalf, and more.

Miscellaneous

- Last year’s students built upon the Innovation Clinic’s progress in shaping the rules promulgated by the Financial Crimes Enforcement Network (“FinCEN”) pursuant to the Corporate Transparency Act to create a client alert summarizing the final rule, its impact on startups, and what startups need to know in order to comply. When FinCEN issued additional guidance with respect to that final rule and changed portions of the final rule including timelines for compliance, this year’s students updated the alert, then distributed it to current and former clients to notify them of the need to comply. The final bulletin is available here .

- In furtherance of that work, additional Innovation Clinic students this year analyzed the impact of the final rule not just on the Innovation Clinic’s clients but also its impact on the Innovation Clinic, and how the Innovation Clinic should change its practices to ensure compliance and minimize risk to the Innovation Clinic. This also involved putting together a comprehensive filing guide for companies that are ready to file their certificates of incorporation to show them procedurally how to do so and explain the choices they must make during the filing process, so that the Innovation Clinic would not be involved in directing or controlling the filings and thus would not be considered a “company applicant” on any client’s Corporate Transparency Act filings with FinCEN.

- The Innovation Clinic also began producing thought leadership pieces regarding AI, leveraging our distinct and uniquely University of Chicago expertise in structuring early-stage companies and analyzing complex regulatory issues with a law and economics lens to add our voice to those speaking on this important topic. One student wrote about whether non-profits are really the most desirable form of entity for mitigating risks associated with AI development, and another team of students prepared an analysis of the EU’s AI Act, comparing it to the Executive Order on AI from President Biden, and recommended a path forward for an AI regulatory environment in the United States. Both pieces can be found here , with more to come!

Innovation Trek

Thanks to another generous gift from Douglas Clark, ’89, and managing partner of Wilson, Sonsini, Goodrich & Rosati, we were able to operationalize the second Innovation Trek over Spring Break 2024. The Innovation Trek provides University of Chicago Law School students with a rare opportunity to explore the innovation and venture capital ecosystem in its epicenter, Silicon Valley. The program enables participating students to learn from business and legal experts in a variety of different industries and roles within the ecosystem to see how the law and economics principles that students learn about in the classroom play out in the real world, and facilitates meaningful connections between alumni, students, and other speakers who are leaders in their fields. This year, we took twenty-three students (as opposed to twelve during the first Trek) and expanded the offering to include not just Innovation Clinic students but also interested students from our JD/MBA Program and Doctoroff Business Leadership Program. We also enjoyed four jam-packed days in Silicon Valley, expanding the trip from the two and a half days that we spent in the Bay Area during our 2022 Trek.

The substantive sessions of the Trek were varied and impactful, and enabled in no small part thanks to substantial contributions from numerous alumni of the Law School. Students were fortunate to visit Coinbase’s Mountain View headquarters to learn from legal leaders at the company on all things Coinbase, crypto, and in-house, Plug & Play Tech Center’s Sunnyvale location to learn more about its investment thesis and accelerator programming, and Google’s Moonshot Factory, X, where we heard from lawyers at a number of different Alphabet companies about their lives as in-house counsel and the varied roles that in-house lawyers can have. We were also hosted by Wilson, Sonsini, Goodrich & Rosati and Fenwick & West LLP where we held sessions featuring lawyers from those firms, alumni from within and outside of those firms, and non-lawyer industry experts on topics such as artificial intelligence, climate tech and renewables, intellectual property, biotech, investing in Silicon Valley, and growth stage companies, and general advice on career trajectories and strategies. We further held a young alumni roundtable, where our students got to speak with alumni who graduated in the past five years for intimate, candid discussions about life as junior associates. In total, our students heard from more than forty speakers, including over twenty University of Chicago alumni from various divisions.

The Trek didn’t stop with education, though. Throughout the week students also had the opportunity to network with speakers to learn more from them outside the confines of panel presentations and to grow their networks. We had a networking dinner with Kirkland & Ellis, a closing dinner with all Trek participants, and for the first time hosted an event for admitted students, Trek participants, and alumni to come together to share experiences and recruit the next generation of Law School students. Several speakers and students stayed in touch following the Trek, and this resulted not just in meaningful relationships but also in employment for some students who attended.

More information on the purposes of the Trek is available here , the full itinerary is available here , and one student participant’s story describing her reflections on and descriptions of her experience on the Trek is available here .