Prediction vs Hypothesis: In-Depth Comparison

Considering discussing the difference between prediction and hypothesis, it’s important to understand the distinct meanings and implications of these two terms. Prediction and hypothesis are both used in scientific research and analysis, but they serve different purposes and have different characteristics.

In simple terms, a prediction is a statement or assertion about what will happen in the future based on existing knowledge or observations. It involves making an educated guess or forecast about an outcome or event that has not yet occurred. A prediction is often based on patterns, trends, or correlations identified in data or observations, and it aims to provide insight into what is likely to happen.

On the other hand, a hypothesis is a tentative explanation or proposition that is formulated to explain a specific phenomenon or observed data. It is a proposed explanation or theory that can be tested through scientific methods and experiments. A hypothesis is typically based on prior knowledge, observations, or existing theories, and it aims to provide a possible explanation for a particular phenomenon or set of observations.

While predictions focus on forecasting future events, hypotheses are concerned with explaining and understanding existing phenomena. Predictions can be validated or invalidated by subsequent events or data, whereas hypotheses can be supported or rejected through empirical testing and analysis. In the following sections, we will delve deeper into the characteristics, uses, and examples of predictions and hypotheses in various fields of study.

The Definitions

In the realm of scientific research and analysis, it is crucial to have a clear understanding of the terms “prediction” and “hypothesis.” These terms are often used interchangeably in everyday conversation, but they hold distinct meanings and play different roles in the scientific process. Let’s delve into the definitions of both prediction and hypothesis:

Define Prediction

A prediction, in the context of scientific inquiry, refers to a statement or assertion about a future event or outcome. It is a logical deduction or inference based on existing knowledge, observations, and patterns. Predictions are typically made with the intention of testing their accuracy and validity through empirical evidence.

Predictions are often formulated by researchers or scientists who aim to anticipate the results of an experiment, an observation, or a specific phenomenon. They are based on a comprehensive analysis of available data and previous research findings. Predictions can be either quantitative or qualitative, depending on the nature of the research question and the available information.

For instance, in the field of meteorology, a prediction might involve estimating the likelihood of rainfall in a particular region during a specific time frame. In this case, meteorologists use various atmospheric indicators, historical weather patterns, and mathematical models to make their predictions.

Predictions are essential in scientific research as they help guide experimental design, data collection, and analysis. They serve as a starting point for investigations and provide a framework for evaluating the accuracy of scientific theories and models.

Define Hypothesis

A hypothesis, on the other hand, is a tentative explanation or proposition that seeks to explain a phenomenon or answer a research question. It serves as a starting point for scientific investigations and provides a framework for designing experiments and gathering empirical evidence.

Hypotheses are formulated based on existing knowledge, observations, and theories. They are often derived from previous research findings, logical reasoning, or insights gained from preliminary studies. A hypothesis is a testable statement that can be either supported or refuted through empirical evidence.

Unlike predictions, which focus on specific future outcomes, hypotheses aim to explain the underlying mechanisms or causes behind observed phenomena. They are typically stated in an “if-then” format, suggesting a cause-and-effect relationship between variables.

For example, in the field of psychology, a hypothesis might propose that individuals who receive positive reinforcement for a certain behavior are more likely to repeat that behavior in the future. This hypothesis can then be tested through experiments or observational studies to determine its validity.

Hypotheses play a crucial role in the scientific method as they guide the collection and analysis of data. They provide a framework for researchers to make logical deductions, draw conclusions, and contribute to the existing body of knowledge in their respective fields.

How To Properly Use The Words In A Sentence

When it comes to scientific research and analysis, understanding the distinction between prediction and hypothesis is crucial. While these terms are often used interchangeably, they have distinct meanings and should be used appropriately in a sentence. In this section, we will explore how to effectively use both prediction and hypothesis in a sentence.

How To Use “Prediction” In A Sentence

When using the term “prediction” in a sentence, it is important to convey a sense of anticipation or forecasting. Predictions are statements that suggest what may happen in the future based on existing evidence or patterns. Here are a few examples of how to use “prediction” in a sentence:

- Scientists predict that global temperatures will continue to rise due to increased greenhouse gas emissions.

- Based on historical data, economists predict a recession in the next fiscal year.

- The weather forecast predicts heavy rainfall in the region tomorrow.

As you can see, the word “prediction” is used to indicate an expected outcome or result based on logical reasoning, analysis, or observation. It is often employed in scientific, economic, or weather-related contexts.

How To Use “Hypothesis” In A Sentence

Unlike a prediction, a hypothesis is a proposed explanation or theory that is subject to testing and evaluation. It is an educated guess or assumption that serves as the foundation for scientific inquiry. Here are a few examples of how to use “hypothesis” in a sentence:

- The researcher formulated a hypothesis to explain the observed phenomenon.

- Before conducting the experiment, the scientists developed a hypothesis to guide their investigation.

- The hypothesis suggested that increased exposure to sunlight would enhance plant growth.

As demonstrated in these examples, a hypothesis is typically used in scientific contexts to propose a tentative explanation for a phenomenon or to guide the process of experimentation and observation. It is an essential component of the scientific method and plays a crucial role in advancing knowledge and understanding.

More Examples Of Prediction & Hypothesis Used In Sentences

In this section, we will explore various examples of how the terms “prediction” and “hypothesis” can be used in sentences. These examples will help us grasp a better understanding of the context in which these terms are commonly employed.

Examples Of Using Prediction In A Sentence

- Based on the current market trends, our prediction is that the stock prices will soar in the next quarter.

- She made a prediction that the new marketing campaign would significantly boost sales.

- His accurate prediction of the election outcome impressed the political analysts.

- The weather forecast predicts heavy rainfall tomorrow, so be prepared.

- Our prediction is that the demand for renewable energy will continue to rise in the coming years.

- The economist’s prediction of an economic recession was met with skepticism by some experts.

- Despite the odds, his prediction of winning the championship turned out to be correct.

- In her prediction, she foresaw a decline in customer satisfaction due to poor product quality.

- The scientist’s prediction that the experiment would yield groundbreaking results proved to be accurate.

- Based on the data analysis, the prediction is that the company’s revenue will double by the end of the year.

Examples Of Using Hypothesis In A Sentence

- The researcher formulated a hypothesis to test the effects of the new drug on cancer cells.

- His hypothesis suggests that increased exposure to sunlight leads to higher vitamin D levels.

- Before conducting the experiment, the scientists developed a hypothesis to guide their research.

- According to the hypothesis, the higher the temperature, the faster the chemical reaction will occur.

- She proposed a hypothesis that lack of sleep negatively impacts cognitive performance.

- The hypothesis states that people who exercise regularly have lower risks of developing heart disease.

- Through careful observation and analysis, the researcher confirmed his hypothesis about plant growth.

- The hypothesis that increased stress levels lead to a weakened immune system has been widely studied.

- Scientists are currently testing a hypothesis that suggests a link between certain foods and allergies.

- Her hypothesis regarding the impact of social media on mental health sparked a lively debate among experts.

Common Mistakes To Avoid

When it comes to scientific research and analysis, it is crucial to understand the distinction between prediction and hypothesis. Unfortunately, many individuals mistakenly use these terms interchangeably, leading to confusion and potentially flawed conclusions. In order to prevent such errors and ensure accurate scientific discourse, it is important to be aware of the common mistakes made when using prediction and hypothesis incorrectly.

1. Failing To Recognize The Fundamental Difference

One of the most prevalent mistakes is the failure to recognize the fundamental difference between prediction and hypothesis. While both concepts are integral to scientific inquiry, they serve distinct purposes and involve different levels of certainty.

A prediction is a statement or claim about a future event or outcome based on existing knowledge or observations. It is typically derived from patterns or trends identified in data and aims to forecast what is likely to happen. Predictions are often expressed in probabilistic terms, acknowledging the inherent uncertainty associated with future events.

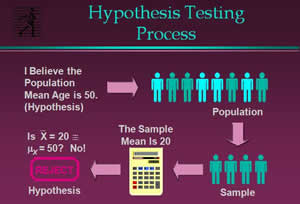

On the other hand, a hypothesis is a proposed explanation or tentative answer to a research question. It is formulated prior to conducting any experiments or gathering data and serves as a starting point for scientific investigation. Hypotheses are testable and falsifiable, allowing researchers to either support or reject them based on empirical evidence.

2. Using Prediction As A Substitute For Hypothesis

Another common mistake is using prediction as a substitute for hypothesis. This error arises when individuals make assumptions about the cause-and-effect relationship between variables without providing a clear rationale or theoretical framework.

For instance, stating that “increased consumption of vitamin C will lead to a decrease in the risk of developing a cold” is a prediction, not a hypothesis. In this case, the statement lacks the necessary explanation of the underlying mechanism or the specific factors that would support or refute the claim.

A hypothesis, in contrast, would involve formulating a more comprehensive statement such as “increased consumption of vitamin C enhances the immune system, leading to a decrease in the risk of developing a cold.” This hypothesis provides a theoretical basis for the expected relationship between vitamin C intake and cold prevention, allowing for further investigation and testing.

3. Overlooking The Role Of Experimentation

One crucial aspect that distinguishes a hypothesis from a prediction is the involvement of experimentation. A hypothesis is typically tested through systematic observation, data collection, and analysis, whereas a prediction focuses on making forecasts without necessarily requiring empirical evidence.

It is a common mistake to overlook the importance of experimentation and treat predictions as equivalent to hypotheses. While predictions can be valuable in guiding research and generating hypotheses, they should not be conflated with the rigorous scientific process of hypothesis testing.

4. Ignoring The Role Of Falsifiability

Falsifiability is a key criterion for a hypothesis, but it does not hold the same significance for predictions. A hypothesis must be formulated in a way that allows for the possibility of being proven false through empirical evidence. If a hypothesis cannot be disproven or tested, it lacks scientific validity.

However, predictions do not necessarily need to be falsifiable. They can be based on probabilities, trends, or observations without the requirement of being disproven. Predictions can be revised or refined based on new information, while hypotheses are subject to the possibility of being rejected or supported by empirical evidence.

5. Neglecting The Importance Of Context

Lastly, it is essential to consider the context in which predictions and hypotheses are used. The appropriate usage of these terms may vary depending on the field of study, research design, or specific scientific discipline.

For example, in the social sciences, predictions are often used to forecast human behavior or societal trends, whereas hypotheses are employed to test theoretical frameworks or explanatory models. Understanding the disciplinary nuances and context-specific conventions is crucial to avoid misinterpretation or confusion when using prediction and hypothesis interchangeably.

By being mindful of these common mistakes, researchers and individuals engaging in scientific discourse can ensure accurate and effective communication, fostering a more robust understanding of the scientific method and its applications.

Context Matters

When it comes to scientific inquiry and research, the choice between prediction and hypothesis is not always straightforward. The context in which these terms are used plays a crucial role in determining which one is more appropriate. Understanding this context is essential for researchers and scientists to effectively communicate their ideas and findings. Let’s delve into the intricacies of this choice and explore some examples of different contexts where the preference between prediction and hypothesis might shift.

1. Experimental Research

In experimental research, where scientists conduct controlled experiments to test their theories, the choice between prediction and hypothesis is often influenced by the nature of the study. In this context, a hypothesis is typically formulated as an educated guess or a tentative explanation for a phenomenon. It serves as the starting point for the research, guiding the experimental design and data analysis. For example, in a study investigating the effects of a new drug on blood pressure, a hypothesis could be formulated as follows:

Hypothesis: The administration of Drug X will lead to a significant decrease in blood pressure compared to a placebo.

On the other hand, predictions in experimental research are often specific statements about the expected outcomes of the experiment. They are derived from the hypothesis and are used to guide data collection and analysis. For instance, a prediction based on the above hypothesis could be:

Prediction: Participants who receive Drug X will show a mean decrease in systolic blood pressure of at least 10 mmHg compared to those who receive the placebo.

Therefore, in the context of experimental research, the choice between prediction and hypothesis depends on whether the researcher is formulating an initial explanation or making specific statements about the expected outcomes.

2. Observational Studies

In observational studies, where researchers observe and analyze existing data without intervening or manipulating variables, the choice between prediction and hypothesis may vary. In this context, hypotheses are often used to propose associations or relationships between variables. For example, in a study examining the relationship between physical activity and mental health, a hypothesis could be:

Hypothesis: There is a positive correlation between physical activity levels and mental well-being.

On the other hand, predictions in observational studies are often based on previous research or theoretical frameworks. They are specific statements about the expected outcomes or patterns in the data. For instance, a prediction based on the above hypothesis could be:

Prediction: Individuals who engage in regular physical activity will report higher levels of subjective well-being compared to those who lead a sedentary lifestyle.

In the context of observational studies, the choice between prediction and hypothesis depends on whether the researcher is proposing a general association between variables or making specific predictions based on existing knowledge.

3. Theoretical Research

In theoretical research, where scientists develop and refine theoretical frameworks or models, the choice between prediction and hypothesis may take a different form. In this context, hypotheses are often used to propose theoretical explanations or mechanisms. For example, in a study exploring the mechanisms of climate change, a hypothesis could be:

Hypothesis: Changes in greenhouse gas concentrations lead to alterations in the Earth’s temperature through the greenhouse effect.

On the other hand, predictions in theoretical research are often derived from the established theories or models. They are specific statements about the expected outcomes or patterns in the system being studied. For instance, a prediction based on the above hypothesis could be:

Prediction: The increase in greenhouse gas emissions will result in a rise in global average temperatures by at least 2 degrees Celsius over the next century.

In the context of theoretical research, the choice between prediction and hypothesis depends on whether the researcher is proposing a theoretical explanation or making specific predictions based on established theories or models.

Exceptions To The Rules

In most cases, the rules for using prediction and hypothesis provide a solid framework for scientific inquiry and logical reasoning. However, there are a few exceptional situations where these rules might not apply in the same way. Let’s explore some of these exceptions and delve into brief explanations and examples for each case.

1. Historical Analysis

In the realm of historical analysis, the application of prediction and hypothesis can be challenging due to the lack of controlled experiments and the inability to directly test hypotheses. Instead, historians often rely on interpretation and inference to understand past events.

For example, when studying ancient civilizations, historians may propose hypotheses to explain the rise and fall of empires based on available evidence. However, these hypotheses are often subject to interpretation and can be influenced by personal biases. While predictions can be made about potential outcomes, they cannot be tested in the same way as in experimental sciences.

2. Complex Systems

Complex systems, such as climate patterns, ecosystems, or the human brain, present another exception to the strict application of prediction and hypothesis. These systems involve numerous interconnected variables and intricate feedback loops, making it difficult to formulate precise predictions or testable hypotheses.

For instance, predicting the exact trajectory of a hurricane or the behavior of a specific species within an ecosystem is a complex task due to the multitude of factors at play. While scientists can develop models and make predictions based on existing data, the inherent complexity of these systems often leads to a margin of error and a level of uncertainty.

3. Unpredictable Events

Some events are inherently unpredictable, rendering the traditional use of prediction and hypothesis ineffective. These events are often characterized by their randomness or chaotic nature, making it impossible to accurately forecast outcomes or formulate hypotheses.

Consider, for instance, the stock market. Despite the use of sophisticated algorithms and mathematical models, predicting stock prices with absolute certainty remains elusive. The interplay of various factors, including global events, investor sentiment, and market psychology, makes it challenging to establish reliable predictions or testable hypotheses.

4. Creative And Artistic Endeavors

In creative and artistic endeavors, the rigid application of prediction and hypothesis may hinder the freedom of expression and innovation. Artists, writers, and musicians often rely on intuition, inspiration, and experimentation to create their works.

For example, a painter may not be able to predict the exact outcome of their artistic process or formulate a hypothesis about the emotional impact of their artwork. Instead, they explore different techniques, colors, and compositions, allowing their creativity to guide them. While some predictions or hypotheses may emerge during the creative process, they are often secondary to the expressive and subjective nature of the art form.

While prediction and hypothesis serve as valuable tools in scientific inquiry and logical reasoning, there are exceptions where their application may not be as straightforward. Historical analysis, complex systems, unpredictable events, and creative endeavors all present unique challenges that deviate from the traditional use of prediction and hypothesis. Recognizing these exceptions allows us to appreciate the diversity of knowledge and the multifaceted nature of human endeavors.

Understanding the distinction between prediction and hypothesis is crucial for any individual seeking to engage in scientific inquiry or critical thinking. While both concepts involve making educated guesses about the future or unknown, their underlying principles and applications differ significantly.

A prediction is a specific statement that anticipates a certain outcome based on existing knowledge or patterns. It is often derived from empirical evidence or logical reasoning and aims to forecast a particular event or phenomenon. Predictions are commonly used in fields such as meteorology, economics, and sports analytics to make informed decisions and plan for the future.

On the other hand, a hypothesis is a tentative explanation or proposition that seeks to explain a phenomenon or answer a research question. It is formulated based on preliminary observations, prior knowledge, and logical reasoning. Hypotheses serve as the foundation for scientific investigations, guiding the design of experiments and data analysis.

While predictions focus on the outcome of a specific event or situation, hypotheses aim to provide a broader understanding of the underlying mechanisms or causes. Predictions are often more straightforward and can be directly tested or validated through observation or experimentation. In contrast, hypotheses require rigorous testing and analysis to evaluate their validity and support or refute them.

Overall, predictions and hypotheses are both valuable tools in different contexts. Predictions help us make informed decisions and anticipate future outcomes, while hypotheses drive scientific exploration and contribute to the advancement of knowledge. By recognizing the distinction between these concepts, we can enhance our critical thinking skills and approach problem-solving with greater clarity and precision.

Shawn Manaher

Shawn Manaher is the founder and creative force behind GrammarBeast.com. A seasoned entrepreneur and language enthusiast, he is dedicated to making grammar and spelling both fun and accessible. Shawn believes in the power of clear communication and is passionate about helping people master the intricacies of the English language.

Fiber vs Iron: Do These Mean The Same? How To Use Them

Relation vs Relationship: Differences And Uses For Each One

© 2024 GrammarBeast.com - All Rights Reserved.

Privacy Policy

Terms of Service

- Skip to primary navigation

- Skip to main content

- Skip to footer

Science Struck

What’s the Real Difference Between Hypothesis and Prediction

Both hypothesis and prediction fall in the realm of guesswork, but with different assumptions. This Buzzle write-up below will elaborate on the differences between hypothesis and prediction.

Like it? Share it!

“There is no justifiable prediction about how the hypothesis will hold up in the future; its degree of corroboration simply is a historical statement describing how severely the hypothesis has been tested in the past.” ― Robert Nozick, American author, professor, and philosopher

A lot of people tend to think that a hypothesis is the same as prediction, but this is not true. They are entirely different terms, though they can be manifested within the same example. They are both entities that stem from statistics, and are used in a variety of applications like finance, mathematics, science (widely), sports, psychology, etc. A hypothesis may be a prediction, but the reverse may not be true.

Also, a prediction may or may not agree with the hypothesis. Confused? Don’t worry, read the hypothesis vs. prediction comparison, provided below with examples, to clear your doubts regarding both these entities.

- A hypothesis is a kind of guess or proposition regarding a situation.

- It can be called a kind of intelligent guess or prediction, and it needs to be proved using different methods.

- Formulating a hypothesis is an important step in experimental design, for it helps to predict things that might take place in the course of research.

- The strength of the statement is based on how effectively it is proved while conducting experiments.

- It is usually written in the ‘If-then-because’ format.

- For example, ‘ If Susan’s mood depends on the weather, then she will be happy today, because it is bright and sunny outside. ‘. Here, Susan’s mood is the dependent variable, and the weather is the independent variable. Thus, a hypothesis helps establish a relationship.

- A prediction is also a type of guess, in fact, it is a guesswork in the true sense of the word.

- It is not an educated guess, like a hypothesis, i.e., it is based on established facts.

- While making a prediction for various applications, you have to take into account all the current observations.

- It can be testable, but just once. This goes to prove that the strength of the statement is based on whether the predicted event occurs or not.

- It is harder to define, and it contains many variations, which is why, probably, it is confused to be a fictional guess or forecast.

- For example, He is studying very hard, he might score an A . Here, we are predicting that since the student is working hard, he might score good marks. It is based on an observation and does not establish any relationship.

Factors of Differentiation

| It has a longer structure, a situation can be interpreted with different kinds of hypothesis (null, alternative, research hypothesis, etc.), and it may need different methods to prove as well. | It mostly has a shorter structure, since it can be a simple opinion, based on what you think might happen. |

| It contains independent and dependent variables, and it helps establish a relationship between them. It also helps analyze the relationships through different experimentation techniques. | It does not contain any variables or relationships, and the statement analysis is not elaborate. In fact, it is not exactly analyzed. Since it is a straightforward probability, it is tested once and done with. |

| It can go through multiple testing stages. Also, its story does not end with just the testing phase; for instance, tomorrow your hypothesis could be challenged by someone else, and a contrary proof might arise. It has a longer time span. | As already mentioned in the earlier point, it can be proven just once. You predict something; if it occurs, your statement is right, if it does not occur, your statement is wrong. That’s it, end of story. |

| It is based on facts, and the results are recorded and used in science and other applications. It is a speculated, testable, educational guess, but it is certainly not fictional. | Even though it is based on pure observations and already existing facts, it is linked with forecasting and fiction. This is because, you are purely guessing the outcomes, there may or may not be scientific backing. The person making a prediction may or may not have knowledge about the problem statement, thus it may exist only in a fictional context. |

♦ Consider a statement, ‘If I add some chili powder, the pasta may become spicy’. This is a hypothesis, and a testable statement. You can carry on adding 1 pinch of chili powder, or a spoon, or two spoons, and so on. The dish may become spicier or pungent, or there may be no reaction at all. The sum and substance is that, the amount of chili powder is the independent variable here, and the pasta dish is the dependent variable, which is expected to change with the addition of chili powder. This statement thus establishes and analyzes the relationship between both variables, and you will get a variety of results when the test is performed multiple times. Your hypothesis may even be opposed tomorrow.

♦ Consider the statement, ‘Robert has longer legs, he may run faster’. This is just a prediction. You may have read somewhere that people with long legs tend to run faster. It may or may not be true. What is important here is ‘Robert’. You are talking only of Robert’s legs, so you will test if he runs faster. If he does, your prediction is true, if he doesn’t, your prediction is false. No more testing.

♦ Consider a statement, ‘If you eat chocolates, you may get acne’. This is a simple hypothesis, based on facts, yet necessary to be proven. It can be tested on a number of people. It may be true, it may be false. The fact is, it defines a relationship between chocolates and acne. The relationship can be analyzed and the results can be recorded. Tomorrow, someone might come up with an alternative hypothesis that chocolate does not cause acne. This will need to be tested again, and so on. A hypothesis is thus, something that you think happens due to a reason.

♦ Consider a statement, ‘The sky is overcast, it may rain today’. A simple guess, based on the fact that it generally rains if the sky is overcast. It may not even be testable, i.e., the sky can be overcast now and clear the next minute. If it does rain, you have predicted correctly. If it does not, you are wrong. No further analysis or questions.

Both hypothesis and prediction need to be effectively structured so that further analysis of the problem statement is easier. Remember that, the key difference between the two is the procedure of proving the statements. Also, you cannot state one is better than the other, this depends entirely on the application in hand.

Get Updates Right to Your Inbox

Privacy overview.

- Key Differences

Know the Differences & Comparisons

Difference Between Hypothesis and Prediction

Due to insufficient knowledge, many misconstrue hypothesis for prediction, which is wrong, as these two are entirely different. Prediction is forecasting of future events, which is sometimes based on evidence or sometimes, on a person’s instinct or gut feeling. So take a glance at the article presented below, which elaborates the difference between hypothesis and prediction.

Content: Hypothesis Vs Prediction

Comparison chart.

| Basis for Comparison | Hypothesis | Prediction |

|---|---|---|

| Meaning | Hypothesis implies proposed explanation for an observable event, made on the basis of established facts, as an introduction to further investigation. | Prediction refers to a statement, which tells or estimates something, that will occur in future. |

| What is it? | A tentative supposition, that is capable of being tested through scientific methods. | A declaration made beforehand on what is expected to happen next, in the sequence of events. |

| Guess | Educated guess | Pure guess |

| Based on | Facts and evidence. | May or may not be based on facts or evidences. |

| Explanation | Yes | No |

| Formulation | Takes long time. | Takes comparatively short time. |

| Describes | A phenomenon, which might be a future or past event/occurrence. | Future occurrence/event. |

| Relationship | States casual correlation between variables. | Does not states correlation between variables. |

Definition of Hypothesis

In simple terms, hypothesis means a sheer assumption which can be approved or disapproved. For the purpose of research, the hypothesis is defined as a predictive statement, which can be tested and verified using the scientific method. By testing the hypothesis, the researcher can make probability statements on the population parameter. The objective of the hypothesis is to find the solution to a given problem.

A hypothesis is a mere proposition which is put to the test to ascertain its validity. It states the relationship between an independent variable to some dependent variable. The characteristics of the hypothesis are described as under:

- It should be clear and precise.

- It should be stated simply.

- It must be specific.

- It should correlate variables.

- It should be consistent with most known facts.

- It should be capable of being tested.

- It must explain, what it claims to explain.

Definition of Prediction

A prediction is described as a statement which forecasts a future event, which may or may not be based on knowledge and experience, i.e. it can be a pure guess based on the instinct of a person. It is termed as an informed guess, when the prediction comes out from a person having ample subject knowledge and uses accurate data and logical reasoning, to make it.

Regression analysis is one of the statistical technique, which is used for making the prediction.

In many multinational corporations, futurists (predictors) are paid a good amount for making prediction relating to the possible events, opportunities, threats or risks. And to do so, the futurists, study all past and current events, to forecast future occurrences. Further, it has a great role to play in statistics also, to draw inferences about a population parameter.

Key Differences Between Hypothesis and Prediction

The difference between hypothesis and prediction can be drawn clearly on the following grounds:

- A propounded explanation for an observable occurrence, established on the basis of established facts, as an introduction to the further study, is known as the hypothesis. A statement, which tells or estimates something that will occur in future is known as the prediction.

- The hypothesis is nothing but a tentative supposition which can be tested by scientific methods. On the contrary, the prediction is a sort of declaration made in advance on what is expected to happen next, in the sequence of events.

- While the hypothesis is an intelligent guess, the prediction is a wild guess.

- A hypothesis is always supported by facts and evidence. As against this, predictions are based on knowledge and experience of the person making it, but that too not always.

- Hypothesis always have an explanation or reason, whereas prediction does not have any explanation.

- Hypothesis formulation takes a long time. Conversely, making predictions about a future happening does not take much time.

- Hypothesis defines a phenomenon, which may be a future or a past event. Unlike, prediction, which always anticipates about happening or non-happening of a certain event in future.

- The hypothesis states the relationship between independent variable and the dependent variable. On the other hand, prediction does not state any relationship between variables.

To sum up, the prediction is merely a conjecture to discern future, while a hypothesis is a proposition put forward for the explanation. The former, can be made by any person, no matter he/she has knowledge in the particular field. On the flip side, the hypothesis is made by the researcher to discover the answer to a certain question. Further, the hypothesis has to pass to various test, to become a theory.

You Might Also Like:

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Hypothesis vs. Prediction

What's the difference.

Hypothesis and prediction are both important components of the scientific method, but they serve different purposes. A hypothesis is a proposed explanation or statement that can be tested through experimentation or observation. It is based on prior knowledge, observations, or theories and is used to guide scientific research. On the other hand, a prediction is a specific statement about what will happen in a particular situation or experiment. It is often derived from a hypothesis and serves as a testable outcome that can be confirmed or refuted through data analysis. While a hypothesis provides a broader framework for scientific inquiry, a prediction is a more specific and measurable expectation of the results.

| Attribute | Hypothesis | Prediction |

|---|---|---|

| Definition | A proposed explanation or answer to a scientific question | An educated guess about what will happen in a specific situation or experiment |

| Role | Forms the basis for scientific investigation and experimentation | Helps guide the design and conduct of experiments |

| Testability | Can be tested through experiments or observations | Can be tested to determine its accuracy or validity |

| Scope | Broader in nature, often explaining a phenomenon or relationship | Specific to a particular situation or experiment |

| Formulation | Based on prior knowledge, observations, and data analysis | Based on prior knowledge, observations, and data analysis |

| Outcome | Can be supported or rejected based on evidence | Can be confirmed or disproven based on the observed results |

| Level of Certainty | Less certain than a theory, but can become more certain with supporting evidence | Less certain than a theory, but can become more certain with supporting evidence |

Further Detail

Introduction.

When it comes to scientific research and inquiry, two important concepts that often come into play are hypothesis and prediction. Both of these terms are used to make educated guesses or assumptions about the outcome of an experiment or study. While they share some similarities, they also have distinct attributes that set them apart. In this article, we will explore the characteristics of hypothesis and prediction, highlighting their differences and similarities.

A hypothesis is a proposed explanation or statement that can be tested through experimentation or observation. It is typically formulated based on existing knowledge, observations, or theories. A hypothesis is often used as a starting point for scientific research, as it provides a framework for investigation and helps guide the research process.

One of the key attributes of a hypothesis is that it is testable. This means that it can be subjected to empirical evidence and observations to determine its validity. A hypothesis should be specific and measurable, allowing researchers to design experiments or gather data to either support or refute the hypothesis.

Another important aspect of a hypothesis is that it is falsifiable. This means that it is possible to prove the hypothesis wrong through experimentation or observation. Falsifiability is crucial in scientific research, as it ensures that hypotheses can be objectively tested and evaluated.

Hypotheses can be classified into two main types: null hypotheses and alternative hypotheses. A null hypothesis states that there is no significant relationship or difference between variables, while an alternative hypothesis proposes the existence of a relationship or difference. These two types of hypotheses are often used in statistical analysis to draw conclusions from data.

In summary, a hypothesis is a testable and falsifiable statement that serves as a starting point for scientific research. It is specific, measurable, and can be either a null or alternative hypothesis.

While a hypothesis is a proposed explanation or statement, a prediction is a specific outcome or result that is anticipated based on existing knowledge or theories. Predictions are often made before conducting an experiment or study and serve as a way to anticipate the expected outcome.

Unlike a hypothesis, a prediction is not necessarily testable or falsifiable on its own. Instead, it is used to guide the research process and provide a basis for comparison with the actual results obtained from the experiment or study. Predictions can be based on previous research, theoretical models, or logical reasoning.

One of the key attributes of a prediction is that it is specific and precise. It should clearly state the expected outcome or result, leaving little room for ambiguity. This allows researchers to compare the prediction with the actual results and evaluate the accuracy of their anticipated outcome.

Predictions can also be used to generate hypotheses. By making a prediction and comparing it with the actual results, researchers can identify discrepancies or unexpected findings. These observations can then be used to formulate new hypotheses and guide further research.

In summary, a prediction is a specific anticipated outcome or result that is not necessarily testable or falsifiable on its own. It serves as a basis for comparison with the actual results obtained from an experiment or study and can be used to generate new hypotheses.

Similarities

While hypotheses and predictions have distinct attributes, they also share some similarities in the context of scientific research. Both hypotheses and predictions are based on existing knowledge, observations, or theories. They are both used to make educated guesses or assumptions about the outcome of an experiment or study.

Furthermore, both hypotheses and predictions play a crucial role in the scientific method. They provide a framework for research, guiding the design of experiments, data collection, and analysis. Both hypotheses and predictions are subject to evaluation and revision based on empirical evidence and observations.

Additionally, both hypotheses and predictions can be used to generate new knowledge and advance scientific understanding. By testing hypotheses and comparing predictions with actual results, researchers can gain insights into the relationships between variables, uncover new phenomena, or challenge existing theories.

Overall, while hypotheses and predictions have their own unique attributes, they are both integral components of scientific research and inquiry.

In conclusion, hypotheses and predictions are important concepts in scientific research. While a hypothesis is a testable and falsifiable statement that serves as a starting point for investigation, a prediction is a specific anticipated outcome or result that guides the research process. Hypotheses are specific, measurable, and can be either null or alternative, while predictions are precise and serve as a basis for comparison with actual results.

Despite their differences, hypotheses and predictions share similarities in terms of their reliance on existing knowledge, their role in the scientific method, and their potential to generate new knowledge. Both hypotheses and predictions contribute to the advancement of scientific understanding and play a crucial role in the research process.

By understanding the attributes of hypotheses and predictions, researchers can effectively formulate research questions, design experiments, and analyze data. These concepts are fundamental to the scientific method and are essential for the progress of scientific research and inquiry.

Comparisons may contain inaccurate information about people, places, or facts. Please report any issues.

- Peterborough

Understanding Hypotheses and Predictions

Hypotheses and predictions are different components of the scientific method. The scientific method is a systematic process that helps minimize bias in research and begins by developing good research questions.

Research Questions

Descriptive research questions are based on observations made in previous research or in passing. This type of research question often quantifies these observations. For example, while out bird watching, you notice that a certain species of sparrow made all its nests with the same material: grasses. A descriptive research question would be “On average, how much grass is used to build sparrow nests?”

Descriptive research questions lead to causal questions. This type of research question seeks to understand why we observe certain trends or patterns. If we return to our observation about sparrow nests, a causal question would be “Why are the nests of sparrows made with grasses rather than twigs?”

In simple terms, a hypothesis is the answer to your causal question. A hypothesis should be based on a strong rationale that is usually supported by background research. From the question about sparrow nests, you might hypothesize, “Sparrows use grasses in their nests rather than twigs because grasses are the more abundant material in their habitat.” This abundance hypothesis might be supported by your prior knowledge about the availability of nest building materials (i.e. grasses are more abundant than twigs).

On the other hand, a prediction is the outcome you would observe if your hypothesis were correct. Predictions are often written in the form of “if, and, then” statements, as in, “if my hypothesis is true, and I were to do this test, then this is what I will observe.” Following our sparrow example, you could predict that, “If sparrows use grass because it is more abundant, and I compare areas that have more twigs than grasses available, then, in those areas, nests should be made out of twigs.” A more refined prediction might alter the wording so as not to repeat the hypothesis verbatim: “If sparrows choose nesting materials based on their abundance, then when twigs are more abundant, sparrows will use those in their nests.”

As you can see, the terms hypothesis and prediction are different and distinct even though, sometimes, they are incorrectly used interchangeably.

Let us take a look at another example:

Causal Question: Why are there fewer asparagus beetles when asparagus is grown next to marigolds?

Hypothesis: Marigolds deter asparagus beetles.

Prediction: If marigolds deter asparagus beetles, and we grow asparagus next to marigolds, then we should find fewer asparagus beetles when asparagus plants are planted with marigolds.

A final note

It is exciting when the outcome of your study or experiment supports your hypothesis. However, it can be equally exciting if this does not happen. There are many reasons why you can have an unexpected result, and you need to think why this occurred. Maybe you had a potential problem with your methods, but on the flip side, maybe you have just discovered a new line of evidence that can be used to develop another experiment or study.

Home » Language » English Language » Words and Meanings » What is the Difference Between Hypothesis and Prediction

What is the Difference Between Hypothesis and Prediction

The main difference between hypothesis and prediction is that the hypothesis proposes an explanation to something which has already happened whereas the prediction proposes something that might happen in the future.

Hypothesis and prediction are two significant concepts that give possible explanations to several occurrences or phenomena. As a result, one may be able to draw conclusions that assist in formulating new theories , which can affect the future advancements in the human civilizations. Thus, both these terms are common in the field of science, research and logic. In addition, to make a prediction, one should need evidence or observation whereas one can formulate a hypothesis based on limited evidence .

Key Areas Covered

1. What is a Hypothesis – Definition, Features 2. What is a Prediction – Definition, Features 3. What is the Relationship Between Hypothesis and Prediction – Outline of Common Features 4. What is the Difference Between Hypothesis and Prediction – Comparison of Key Differences

Hypothesis, Logic, Prediction, Theories, Science

What is a Hypothesis

By definition, a hypothesis refers to a supposition or a proposed explanation made on the basis of limited evidence as a starting point for further investigation. In brief, a hypothesis is a proposed explanation for a phenomenon. Nevertheless, this is based on limited evidence, facts or information one has based on the underlying causes of the problem. However, it can be further tested by experimentation. Therefore, this is yet to be proven as correct.

This term hypothesis is, thus, used often in the field of science and research than in general usage. In science, it is termed as a scientific hypothesis. However, a scientific hypothesis has to be tested by a scientific method. Moreover, scientists usually base scientific hypotheses on previous observations which cannot be explained by the existing scientific theories.

Figure 01: A Hypothesis on Colonial Flagellate

In research studies, a hypothesis is based on independent and dependent variables. This is known as a ‘working hypothesis’, and it is provisionally accepted as a basis for further research, and often serves as a conceptual framework in qualitative research. As a result, based on the gathered facts in research, the hypothesis tends to create links or connections between the different variables. Thus, it will work as a source for a more concrete scientific explanation.

Hence, one can formulate a theory based on the hypothesis to lead on the investigation to the problem. A strong hypothesis can create effective predictions based on reasoning. As a result, a hypothesis can predict the outcome of an experiment in a laboratory or the observation of a natural phenomenon. Hence, a hypothesis is known as an ‘educated guess’.

What is a Prediction

A prediction can be defined as a thing predicted or a forecast. Hence, a prediction is a statement about something that might happen in the future. Thus, one can guess as to what might happen based on the existing evidence or observations.

In the general context, although it is difficult to predict the uncertain future, one can draw conclusions as to what might happen in the future based on the observations of the present. This will assist in avoiding negative consequences in the future when there are dangerous occurrences in the present.

Moreover, there is a link between hypothesis and prediction. A strong hypothesis will enable possible predictions. This link between a hypothesis and a prediction can be clearly observed in the field of science.

Figure 2: Weather Predictions

Hence, in scientific and research studies, a prediction is a specific design that can be used to test one’s hypothesis. Thus, the prediction is the outcome one can observe if their hypothesis were supported with experiment. Moreover, predictions are often written in the form of “if, then” statements; for example, “if my hypothesis is true, then this is what I will observe.”

Relationship Between Hypothesis and Prediction

- Based on a hypothesis, one can create a prediction

- Also, a hypothesis will enable predictions through the act of deductive reasoning.

- Furthermore, the prediction is the outcome that can be observed if the hypothesis were supported proven by the experiment.

Difference Between Hypothesis and Prediction

Hypothesis refers to the supposition or proposed explanation made on the basis of limited evidence, as a starting point for further investigation. On the other hand, prediction refers to a thing that is predicted or a forecast of something. Thus, this explains the main difference between hypothesis and prediction.

Interpretation

Hypothesis will lead to explaining why something happened while prediction will lead to interpreting what might happen according to the present observations. This is a major difference between hypothesis and prediction.

Another difference between hypothesis and prediction is that hypothesis will result in providing answers or conclusions to a phenomenon, leading to theory, while prediction will result in providing assumptions for the future or a forecast.

While a hypothesis is directly related to statistics, a prediction, though it may invoke statistics, will only bring forth probabilities.

Moreover, hypothesis goes back to the beginning or causes of the occurrence while prediction goes forth to the future occurrence.

The ability to be tested is another difference between hypothesis and prediction. A hypothesis can be tested, or it is testable whereas a prediction cannot be tested until it really happens.

Hypothesis and prediction are integral components in scientific and research studies. However, they are also used in the general context. Hence, hypothesis and prediction are two distinct concepts although they are related to each other as well. The main difference between hypothesis and prediction is that hypothesis proposes an explanation to something which has already happened whereas prediction proposes something that might happen in the future.

1. “Prediction.” Wikipedia, Wikimedia Foundation, 17 Sept. 2018, Available here . 2. “Hypothesis.” Wikipedia, Wikimedia Foundation, 20 Sept. 2018, Available here . 3. Bradford, Alina. “What Is a Scientific Hypothesis? | Definition of Hypothesis.” LiveScience, Purch, 26 July 2017, Available here . 4. “Understanding Hypotheses and Predictions.” The Academic Skills Centre Trent University, Available here .

Image Courtesy:

1. “Colonial Flagellate Hypothesis” By Katelynp1 – Own work (CC BY-SA 3.0) via Commons Wikimedia 2. “USA weather forecast 2006-11-07” By NOAA – (Public Domain) via Commons Wikimedia

About the Author: Upen

Upen, BA (Honours) in Languages and Linguistics, has academic experiences and knowledge on international relations and politics. Her academic interests are English language, European and Oriental Languages, Internal Affairs and International Politics, and Psychology.

You May Also Like These

Leave a reply cancel reply.

- ABBREVIATIONS

- BIOGRAPHIES

- CALCULATORS

- CONVERSIONS

- DEFINITIONS

Grammar Tips & Articles »

Hypothesis vs. prediction, go to grammar.com to read about hypothesis vs. prediction and their differences. relax and enjoy.

Email Print

Have a discussion about this article with the community:

Report Comment

We're doing our best to make sure our content is useful, accurate and safe. If by any chance you spot an inappropriate comment while navigating through our website please use this form to let us know, and we'll take care of it shortly.

You need to be logged in to favorite .

Create a new account.

Your name: * Required

Your email address: * Required

Pick a user name: * Required

Username: * Required

Password: * Required

Forgot your password? Retrieve it

Use the citation below to add this article to your bibliography:

Style: MLA Chicago APA

"Hypothesis vs. Prediction." Grammar.com. STANDS4 LLC, 2024. Web. 19 Aug. 2024. < https://www.grammar.com/hypothesis_vs._prediction >.

The Web's Largest Resource for

Grammar & spelling, a member of the stands4 network, checkout our entire collection of, grammar articles.

- charlatan - vocabulary

- sufficient - correct spelling

- persuasion - correct spelling

- epithet - vocabulary

See more

Free, no signup required :

Add to chrome.

Two clicks install »

Add to Firefox

Browse grammar.com.

Free Writing Tool :

Instant grammar checker.

Improve your grammar, vocabulary, style, and writing — all for FREE !

Try it now »

Are you a grammar master?

Choose the sentence with correct use of the definite article:.

Improve your writing now :

Download grammar ebooks.

It’s now more important than ever to develop a powerful writing style. After all, most communication takes place in reports, emails, and instant messages.

- Understanding the Parts of Speech

- Common Grammatical Mistakes

- Developing a Powerful Writing Style

- Rules on Punctuation

- The Top 25 Grammatical Mistakes

- The Awful Like Word

- Build Your Vocabulary

More eBooks »

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Stay Informed Group

Stay informed with opportunities online

Hypothesis vs Prediction: Differences and Comparison

September 8, 2023 by Chukwuemeka Gabriel Leave a Comment

A hypothesis is a tentative conjecture that explains an observation, phenomenon or scientific problem that can be tested through observation, investigation or scientific experimentation.

A prediction is a statement of what will happen in the future. Based on the continuous recent outcome of an event, one can make a prediction on what will happen next.

A prediction is basically a forecast. It’s a statement of what will happen in the future based on collected data, evidence, or previous knowledge.

A hypothesis is an assumption considered to be true for the purpose of argument or investigation.

In the academic world, hypotheses and predictions are important elements of the scientific process. However, there are key differences between a hypothesis vs prediction and we will be looking at those differences in this article.

What Is a Hypothesis?

A hypothesis is a tentative conjecture that explains a phenomenon, observation, or scientific problem that can be tested through scientific experimentation, observation or investigation.

It’s an assumption considered to be true for the purpose of argument or investigation. It’s a statement that gives an answer to a proposed question by using actual facts and research.

Researchers form hypotheses for the purpose of explaining a certain phenomenon. To prove their point, they make their hypotheses before starting their scientific experiments.

A hypothesis is an assumption that can be approved or disapproved. It’s considered a predictive statement for research and can be tested using scientific methods.

Also Read: Diploma vs Degree: Differences and Comparison

What Is a Prediction?

A prediction is a statement that describes what will happen in the future. Based on the continuous recent outcome of an event, one can make a prediction on what will happen next.

It’s a statement of what will happen in the future based on collected data, evidence, or previous knowledge.

Predictions can be a guess based on the collective data or instinct. If you have noticed an occurrence regularly, you are likely to make correct predictions about that occurrence.

For instance, if a mailman comes to your house each day at exactly 3 p.m. for five days straight, you might predict the time the mailman will come to your house the next day.

Your prediction that the mailman will arrive at your house at exactly 3 p.m. is based on your previous observations.

A prediction is considered an informed guess if it comes out from a person with the subject knowledge. Using accurate data and logical reasoning based on close observation leads to a probable prediction.

Hypothesis vs Prediction: Differences between Hypothesis and Prediction

It’s an educated guess for a scientific problem or phenomenon, while a prediction is a statement of what will happen in the future. In science, hypotheses are based on recent knowledge and understanding.

It’s an assumption considered to be true for the purpose of argument or investigation.

Predictions describe future events or outcomes and it’s a statement of what will happen in the future based on collected data, evidence, or previous knowledge.

Also Read: Meter vs Yard: Difference and Comparison

Hypothesis vs Prediction: Comparison Chart

| Hypothesis | Prediction | |

| Definition | A hypothesis is a tentative conjecture that explains a phenomenon, observation, or scientific problem that can be tested through scientific experimentation, observation or investigation. | A prediction is a statement that tells what will happen in the future. It’s a statement of what will happen in the future based on collected data, evidence, or previous knowledge. |

| Based on | Facts and evidences | Based on collected data, previous observation, knowledge, facts or evidences. |

| Formulation | Usually takes a long time | Generally takes comparatively short time |

| Relationship | States casual correlation between variables | Predictions does not state correlation between variables |

| Guess | Educated guess/sheer assumption | Pure guess |

Hypothesis vs Prediction: Similarities between Hypothesis and Prediction

Both hypothesis and prediction are statements defining the relationship between variables or the result of an event. A hypothesis and a prediction can be tested, verified and rejected or supported by evidence for the purpose of future research.

While predictions describe potential future events, hypotheses are statements describing potential cause-and-effect relationships.

Also Read: Genotype vs Phenotype: Differences and Comparison

Hypothesis vs Prediction: Tips on How to Write a Hypothesis

Here is how to write a hypothesis, with simple steps.

State your research question

Firstly, state your research questions orderly and clear. You should include an answer to the problem statement or research question in the hypothesis.

Next, create a topic-centric challenge once you have clearly understood the limitations of the study topic you selected. This will enable you to formulate a hypothesis and any other research you need to conduct for collecting data.

Conduct an inspection

Once you have successfully established your study, preliminary research should be carried out. Read through your previous hypothesis, any academic article, or data.

Make a three-dimensional theory

Every hypothesis often includes variables, so it’s important for you to create a correlation between your independent and dependent variables. You will do this by identifying both variables.

Write the first draft

Once you have everything set up, you can then compose your hypothesis.

Firstly, you start by writing the first draft and then write your research based on what you want it to be. Make sure that your independent and dependent variables vary, as well as the connection between them.

Hypothesis vs Prediction: Advantages of Hypothesis

Let’s explore a few advantages of using a hypothesis in scientific research.

- A hypothesis can be tested and verified through scientific experimentation, observation, or investigation. It can be verified or rejected.

- Hypothesis guides further research, as it suggests observations and scientific experiments that should be carried out.

- Hypothesis encourages critical thinking and helps to identify cause-and-effect relationships.

Disadvantages of Hypothesis

- Using a hypothesis can limit the scope. In reality, research findings may be limited by hypotheses.

- Also, research findings may not be generalized if hypotheses are strictly applicable to a specific population.

Also Read: Seminar vs Workshop: Difference and Comparison

Hypothesis vs Prediction: Advantages of Prediction

- Prediction can be used by both people and organizations to make future plans for specific events like weather or market trends.

- Predictions help in decision-making. It provides insight into the potential results of various actions.

- It helps in risk management. With predictions, stock market fluctuations or natural disasters can be foreseen.

- It can provide assistance in allocating resources like inventory, budget, and workforce.

Disadvantages of Predictions

- Predictions can be inaccurate and should not be totally relied on.

- It can be influenced by bias, which can lead to inaccurate predictions.

Both hypothesis and prediction are statements defining the relationship between variables or the result of an event.

Based on the continuous recent outcome of an event, one can make a prediction on what will happen next. A hypothesis is an educated guess for a scientific problem or phenomenon, while a prediction is a statement of what will happen in the future.

Recommendations

- Bussing vs Busing: Difference and Comparison

- Pint vs Quart: Difference and Comparison

- Superheroine vs Heroine: Difference and Comparison

- Chilly vs Cool: Difference and Comparison

- Family Medicine vs Internal Medicine: Difference and Comparison

- Indeed : Hypothesis vs. Prediction: What’s the Difference?

- Keydifferences : Difference Between Hypothesis and Prediction

- Diffzy : Difference Between Hypothesis and Prediction

- Testbooks : Difference Between Hypothesis and Prediction

- Askanydifference : Hypothesis vs Prediction: Difference and Comparison

About Chukwuemeka Gabriel

Gabriel Chukwuemeka is a graduate of Physics; he loves Geography and has in-depth knowledge of Astrophysics. Gabriel is an ardent writer who writes for Stay Informed Group and enjoys looking at the world map when he is not writing.

Reader Interactions

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

10 Morning Meetings Greeting Ideas for Students

What are the 12 ivy league schools in 2024, examples of praying scripture for students, 21 top dental schools for international students 2024, negative effects of technology you should know, do scholarships count as income, 39 best law schools in uk and ranking, woods vs. forest: what is the difference, top 10 marketable careers in the world in 2024, what are the best architecture schools in the us, 15 best psychology schools in the world 2024, what are the best exercise science schools, 10 best medical schools in mexico in 2024, student loan forgiveness: how to obtain a student loan forgiveness, what are the 14 punctuation marks for effective english writing, top rated universities in canada with the highest acceptance rate, reasons why is education important all you need to know, most important languages to learn for more opportunities, top tips for first-year students, 10 tips to choosing an online college, what is the difference between going green and sustainability, how many nickels make a dollar all you need to know, highest paid military in the world in 2024 (top 10 countries), how to record a meeting on microsoft teams, 100 positive affirmations for students, 5 universities in cambridge massachusetts ma, 25 cheapest universities in usa for international students, why you should get your master’s in counselling, 32 best work from home companies that are legit, 15 google meet ideas for teachers, 25 short term courses with high salary, career opportunities for bcom students, how to become a pilot with or without a degree, how to become a home inspector, is a master’s in information technology worth it, how to build a career in digital marketing, is an online associate degree in health science for you, how to become a video game designer, what is a business lawyer, how to capitalize job titles, project management methodologies: definition, types and examples, career development plan: how to create a career plan, what does a film producer do, how to become a medical writer, how to become a music producer without school, 20 high paying part time jobs.

Hypothesis vs. Prediction: What's the Difference?

Key Differences

Comparison chart, testability, impact of incorrectness, hypothesis and prediction definitions, what happens if a prediction is wrong, what is the primary purpose of a hypothesis, can one hypothesis lead to multiple predictions, are hypotheses limited to scientific research, can predictions be made without a hypothesis, how is a prediction related to a hypothesis, can a hypothesis be proven, what makes a good hypothesis, how is a hypothesis different from a theory, what should one do if the hypothesis is not supported by evidence, how are predictions useful in experiments, can a hypothesis change over time, why is it essential for a prediction to be specific, are predictions always about the future, can multiple predictions validate a single hypothesis, is a hypothesis always correct, are all predictions accurate, what role do hypotheses and predictions play in the scientific method, can a hypothesis be a question, can predictions be qualitative.

Trending Comparisons

Popular Comparisons

New Comparisons

Difference Between | Descriptive Analysis and Comparisons

Search form, difference between hypothesis and prediction.

Key Difference: A Hypothesis is an uncertain explanation regarding a phenomenon or event. It is widely used as a base for conducting tests and the results of the tests determine the acceptance or rejection of the hypothesis. On the other hand, prediction is generally associated with the non-scientific guess. It defines the outcome of future events based on observation, experience and even a hypothesis. Hypothesis can also be defined in terms of prediction as a type of prediction which can be tested.

Example of a hypothesis -

“I think that these leaves of the plant became discolored due to lack of sunlight”

In this sentence, one can easily smell a sense of guess. However, this guess is a type of educated guess. Therefore, hypothesis is also known as an educated guess. This hypothesis can be tested by various scientific methods or further investigation.

Prediction is generally used in non-scientific world to define the outcome of future events. It is also referred to as forecast and in most of the cases it is not based on any experience or knowledge.

For example, if I will buy a lottery ticket, I will win today. Now, in this example, a prediction is made regarding the future. However, it cannot be tested before its actual occurrence. Therefore, it will be termed as a prediction.

Comparison between Hypothesis and Prediction:

|

|

|

|

| Definition | A Hypothesis is an uncertain explanation regarding a phenomenon or event. It is widely used as a base for conducting tests and the results of the tests determine the acceptance or rejection of the hypothesis. | Prediction is generally associated with a non-scientific guess. It defines the outcome of future events based on observation, experience and even a hypothesis. |

| Origin | The term derived from the Greek, hypotithenai meaning "to put under" or "to suppose." | From Latin praedict- 'made known beforehand, declared'. |

| Proving methodology | Various experiments can lead to various results. Thus, a hypothesis can be proved or rejected depending upon the method used by the scientists. | Predictions which are based on irrational notions can be tested on the occurrence of the associated phenomenon or occurrence. A scientific prediction is based on the hypothesis and can be tested. |

| Supported by Reasoning | Yes | Depends |

| Example | Ultra violet light may cause skin cancer. | Leaves will change color when the next season arrives. |

Image Courtesy: seminolestate.edu, nature.com

Mon, 10/26/2015 - 18:52

Add new comment

Copyright © 2024, Difference Between | Descriptive Analysis and Comparisons

Hypotheses Versus Predictions

Hypotheses and predictions are not the same thing..

Posted January 12, 2018

- Why Education Is Important

- Take our ADHD Test

- Find a Child Therapist

Blogs are not typically places where professors post views about arcane matters. But blogs have the advantage of providing places to convey quick messages that may be of interest to selected parties. I've written this blog to point students and others to a spot where a useful distinction is made that, as far as I know, hasn't been made before. The distinction concerns two words that are used interchangeably though they shouldn't be. The words are hypothesis (or hypotheses) and prediction (or predictions).

It's not uncommon to see these words swapped for each other willy-nilly, as in, "We sought to test the hypothesis that the two groups in our study would remember the same number of words," or "We sought to test the prediction that the two groups in our study would remember the same number of words." Indifference to the contrast in meaning between "hypothesis" and "prediction" is unfortunate, in my view, because "hypothesis" and "prediction" (or "hypotheses" and "predictions") mean very different things. A student proposing an experiment, or an already-graduated researcher doing the same, will have more gravitas if s/he states a hypothesis from which a prediction follows than if s/he proclaims a prediction from thin air.

Consider the prediction that the time for two balls to drop from the Tower Pisa will be the same if the two balls have different mass. This is the famous prediction tested (or allegedly tested) by Galileo. This experiment — one of the first in the history of science — was designed to test two contrasting predictions. One was that the time for the two balls to drop would be the same. The other was that the time for the heavier ball to drop would be shorter. (The third possibility, that the lighter ball would drop more quickly, was logically possible but not taken seriously.) The importance of the predictions came from the hypotheses on which they were based. Those hypotheses couldn't have been more different. One stemmed from Aristotle and had an entire system of assumptions about the world's basic elements, including the idea that motion requires a driving force, with the force being greater for a heavier object than a lighter one, in which case the heavier object would land first. The other hypothesis came from an entirely different conception which made no such assumptions, as crystallized (later) by Newton. It led to the prediction of equivalent drop times. Dropping two balls and seeing which, if either, landed first was a more important experiment if it was motivated by different hypotheses than if it was motivated by two different off-the-cuff predictions. Predictions can be ticked off by a monkey at a typewriter, so to speak. Anyone can list possible outcomes. That's not good (interesting) science.

Let me say this, then, to students or colleagues reading this (some of whom might be people to whom I give the URL for this blog): Be cognizant of the distinction between "hypotheses" and "predictions." Hypotheses are claims or educated guesses about the world or the part of it you are studying. Predictions are derived from hypotheses and define opportunities for seeing whether expected consequences of hypotheses are observed. Critically, if a prediction is confirmed — if the data agree with the prediction — you can say that the data are consistent with the prediction and, from that point onward you can also say that the data are consistent with the hypothesis that spawned the prediction. You can't say that the data prove the hypothesis, however. The reason is that any of an infinite number of other hypotheses might have caused the outcome you obtained. If you say that a given data pattern proves that such-and-such hypothesis is correct, you will be shot down, and rightly so, for any given data pattern can be explained by an infinite number of possible hypotheses. It's fine to say that the data you have are consistent with a hypothesis, and it's fine for you to say that a hypothesis is (or appears to be) wrong because the data you got are inconsistent with it. The latter outcome is the culmination of the hypothetico-deductive method, where you can say that a hypothesis is, or seems to be, incorrect if you have data that violates it, but you can never say that a hypothesis is right because you have data consistent with it; some other hypothesis might actually correspond to the true explanation of what you found. By creating hypotheses that lead to different predictions, you can see which prediction is not supported, and insofar as you can make progress by rejecting hypotheses, you can depersonalize your science by developing hypotheses that are worth disproving. The worth of a hypothesis will be judged by how resistant it is to attempts at disconfirmation over many years by many investigators using many methods.

Some final comments.... First, hypotheses don't predict; people do. You can say that a prediction arose from a hypothesis, but you can't say, or shouldn't say, that a hypothesis predicts something.

Second, beware of the admonition that hypotheses are weak if they predict no differences. Newtonian mechanics predicts no difference in the landing times of heavy and light objects dropped from the same height at the same time. The fact that Newtonian mechanics predicts no difference hardly means that Newtonian mechanics is lightweight. Instead, the prediction of no difference in landing times demands creation of extremely sensitive experiments. Anyone can get no difference with sloppy experiments. By contrast, getting no difference when a sophisticated hypothesis predicts none and when one has gone to great lengths to detect even the tiniest possible difference ... now that's good science.