An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Biostatistics Series Module 2: Overview of Hypothesis Testing

Affiliations.

- 1 Department of Pharmacology, Institute of Postgraduate Medical Education and Research, Kolkata, West Bengal, India.

- 2 Department of Clinical Pharmacology, Seth GS Medical College and KEM Hospital, Parel, Mumbai, Maharashtra, India.

- PMID: 27057011

- PMCID: PMC4817436

- DOI: 10.4103/0019-5154.177775

Hypothesis testing (or statistical inference) is one of the major applications of biostatistics. Much of medical research begins with a research question that can be framed as a hypothesis. Inferential statistics begins with a null hypothesis that reflects the conservative position of no change or no difference in comparison to baseline or between groups. Usually, the researcher has reason to believe that there is some effect or some difference which is the alternative hypothesis. The researcher therefore proceeds to study samples and measure outcomes in the hope of generating evidence strong enough for the statistician to be able to reject the null hypothesis. The concept of the P value is almost universally used in hypothesis testing. It denotes the probability of obtaining by chance a result at least as extreme as that observed, even when the null hypothesis is true and no real difference exists. Usually, if P is < 0.05 the null hypothesis is rejected and sample results are deemed statistically significant. With the increasing availability of computers and access to specialized statistical software, the drudgery involved in statistical calculations is now a thing of the past, once the learning curve of the software has been traversed. The life sciences researcher is therefore free to devote oneself to optimally designing the study, carefully selecting the hypothesis tests to be applied, and taking care in conducting the study well. Unfortunately, selecting the right test seems difficult initially. Thinking of the research hypothesis as addressing one of five generic research questions helps in selection of the right hypothesis test. In addition, it is important to be clear about the nature of the variables (e.g., numerical vs. categorical; parametric vs. nonparametric) and the number of groups or data sets being compared (e.g., two or more than two) at a time. The same research question may be explored by more than one type of hypothesis test. While this may be of utility in highlighting different aspects of the problem, merely reapplying different tests to the same issue in the hope of finding a P < 0.05 is a wrong use of statistics. Finally, it is becoming the norm that an estimate of the size of any effect, expressed with its 95% confidence interval, is required for meaningful interpretation of results. A large study is likely to have a small (and therefore "statistically significant") P value, but a "real" estimate of the effect would be provided by the 95% confidence interval. If the intervals overlap between two interventions, then the difference between them is not so clear-cut even if P < 0.05. The two approaches are now considered complementary to one another.

Keywords: Confidence interval; P value; hypothesis testing; inferential statistics; null hypothesis; research question.

PubMed Disclaimer

Diagrammatic representation of the concept…

Diagrammatic representation of the concept of the null hypothesis and error types. Note…

A normal distribution curve with…

A normal distribution curve with its two tails. Note that an observed result…

Tests to assess statistical significance…

Tests to assess statistical significance of difference between data sets that are independent…

Tests to assess statistical significance of difference between data sets that are or…

Tests for association between variables

Tests for comparing time to…

Tests for comparing time to event data sets

Similar articles

- Statistical Significance. Tenny S, Abdelgawad I. Tenny S, et al. 2023 Nov 23. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan–. 2023 Nov 23. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan–. PMID: 29083828 Free Books & Documents.

- Inappropriate use of statistical power. Fraser RA. Fraser RA. Bone Marrow Transplant. 2023 May;58(5):474-477. doi: 10.1038/s41409-023-01935-3. Epub 2023 Mar 3. Bone Marrow Transplant. 2023. PMID: 36869191

- Statistics for the nonstatistician: Part I. Wissing DR, Timm D. Wissing DR, et al. South Med J. 2012 Mar;105(3):126-30. doi: 10.1097/SMJ.0b013e3182498ad5. South Med J. 2012. PMID: 22392207 Review.

- Overview of biostatistics used in clinical research. De Muth JE. De Muth JE. Am J Health Syst Pharm. 2009 Jan 1;66(1):70-81. doi: 10.2146/ajhp070006. Am J Health Syst Pharm. 2009. PMID: 19106347 Review.

- [Comparison of two or more samples of quantitative data]. Ivanković D, Tiljak MK. Ivanković D, et al. Acta Med Croatica. 2006;60 Suppl 1:37-46. Acta Med Croatica. 2006. PMID: 16526306 Croatian.

- Effect of N-acetylcysteine on hair follicle changes in mouse model of cyclophosphamide-induced alopecia: histological and biochemical study. Hassan YF, Shabaan DA. Hassan YF, et al. Histochem Cell Biol. 2024 Jun;161(6):477-491. doi: 10.1007/s00418-024-02282-0. Epub 2024 Apr 20. Histochem Cell Biol. 2024. PMID: 38641701 Free PMC article.

- Systematic Review of NMR-Based Metabolomics Practices in Human Disease Research. Huang K, Thomas N, Gooley PR, Armstrong CW. Huang K, et al. Metabolites. 2022 Oct 12;12(10):963. doi: 10.3390/metabo12100963. Metabolites. 2022. PMID: 36295865 Free PMC article. Review.

- Prevalence of E-Cigarette Use and Its Associated Factors Among Youths Aged 12 to 16 Years in 68 Countries and Territories: Global Youth Tobacco Survey, 2012‒2019. Sun J, Xi B, Ma C, Zhao M, Bovet P. Sun J, et al. Am J Public Health. 2022 Apr;112(4):650-661. doi: 10.2105/AJPH.2021.306686. Am J Public Health. 2022. PMID: 35319939 Free PMC article.

- Comparative study of the ameliorative effects of omega-3 versus selenium on etoposide-induced changes in Sertoli cells and ectoplasmic specialization of adult rat testes: immunohistochemical and electron microscopic study. Hassan YF, Khalaf HA, Omar NM, Sakkara ZA, Moustafa AM. Hassan YF, et al. J Mol Histol. 2022 Jun;53(3):523-542. doi: 10.1007/s10735-022-10062-0. Epub 2022 Feb 3. J Mol Histol. 2022. PMID: 35118589

- Prevalence and changes of anemia among young children and women in 47 low- and middle-income countries, 2000-2018. Sun J, Wu H, Zhao M, Magnussen CG, Xi B. Sun J, et al. EClinicalMedicine. 2021 Sep 17;41:101136. doi: 10.1016/j.eclinm.2021.101136. eCollection 2021 Nov. EClinicalMedicine. 2021. PMID: 34585127 Free PMC article.

- Glaser AN, editor. High Yield Biostatistics. Baltimore: Lippincott Williams & Wilkins; 2001. Hypothesis testing; pp. 33–49.

- Motulsky HJ, editor. Prism 4 Statistics Guide - Statistical Analysis for Laboratory and Clinical Researchers. San Diego: GraphPad Software Inc; 2005. P values and statistical hypothesis testing; pp. 16–9.

- Motulsky HJ, editor. Prism 4 Statistics Guide - Statistical Analysis for Laboratory and Clinical Researchers. San Diego: GraphPad Software Inc; 2005. Interpreting P values and statistical significance; pp. 20–4.

- Dawson B, Trapp RG, editors. Basic and Clinical Biostatistics. 4th ed. New York: McGraw Hill; 2004. Research questions about one group; pp. 93–133.

- Dawson B, Trapp RG, editors. Basic & Clinical Biostatistics. 4th ed. New York: McGraw Hill; 2004. Research questions about two separate or independent groups; pp. 131–61.

Related information

Linkout - more resources, full text sources.

- Europe PubMed Central

- Medknow Publications and Media Pvt Ltd

- Ovid Technologies, Inc.

- PubMed Central

Other Literature Sources

- scite Smart Citations

Miscellaneous

- NCI CPTAC Assay Portal

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Pharmacol

- v.44(4); Jul-Aug 2012

Basic biostatistics for post-graduate students

Ganesh n. dakhale.

Department of Pharmacology, Indira Gandhi Govt. Medical College, Nagpur - 440 018, Maharashtra, India

Sachin K. Hiware

Abhijit t. shinde, mohini s. mahatme.

Statistical methods are important to draw valid conclusions from the obtained data. This article provides background information related to fundamental methods and techniques in biostatistics for the use of postgraduate students. Main focus is given to types of data, measurement of central variations and basic tests, which are useful for analysis of different types of observations. Few parameters like normal distribution, calculation of sample size, level of significance, null hypothesis, indices of variability, and different test are explained in detail by giving suitable examples. Using these guidelines, we are confident enough that postgraduate students will be able to classify distribution of data along with application of proper test. Information is also given regarding various free software programs and websites useful for calculations of statistics. Thus, postgraduate students will be benefitted in both ways whether they opt for academics or for industry.

Introduction

Statistics is basically a way of thinking about data that are variable. This article deals with basic biostatistical concepts and their application to enable postgraduate medical and allied science students to analyze and interpret their study data and to critically interpret published literature. Acquiring such skills currently forms an integral part of their postgraduate training. It has been commonly seen that most postgraduate students have an inherent apprehension and prefer staying away from biostatistics, except for memorizing some information that helps them through their postgraduate examination. Self-motivation for effective learning and application of statistics is lacking.

Statistics implies both, data and statistical methods. It can be considered as an art as well as science. Statistics can neither prove not disprove anything. It is just a tool. Statistics without scientific application has no roots. Thus, statistics may be defined as the discipline concerned with the treatment of numerical data derived from group of individuals. These individuals may be human beings, animals, or other organisms. Biostatistics is a branch of statistics applied to biological or medical sciences. Biostatistics covers applications and contributions not only from health, medicines and, nutrition but also from fields such as genetics, biology, epidemiology, and many others.[ 1 ] Biostatistics mainly consists of various steps like generation of hypothesis, collection of data, and application of statistical analysis. To begin with, readers should know about the data obtained during the experiment, its distribution, and its analysis to draw a valid conclusion from the experiment.

Statistical method has two major branches mainly descriptive and inferential. Descriptive statistics explain the distribution of population measurements by providing types of data, estimates of central tendency (mean, mode and median), and measures of variability (standard deviation, correlation coefficient), whereas inferential statistics is used to express the level of certainty about estimates and includes hypothesis testing, standard error of mean, and confidence interval.

Types of Data

Observations recorded during research constitute data. There are three types of data i.e. nominal, ordinal, and interval data. Statistical methods for analysis mainly depend on type of data. Generally, data show picture of the variability and central tendency. Therefore, it is very important to understand the types of data.

1) Nominal data: This is synonymous with categorical data where data is simply assigned “names” or categories based on the presence or absence of certain attributes/characteristics without any ranking between the categories.[ 2 ] For example, patients are categorized by gender as males or females; by religion as Hindu, Muslim, or Christian. It also includes binominal data, which refers to two possible outcomes. For example, outcome of cancer may be death or survival, drug therapy with drug ‘X’ will show improvement or no improvement at all.

2) Ordinal data: It is also called as ordered, categorical, or graded data. Generally, this type of data is expressed as scores or ranks. There is a natural order among categories, and they can be ranked or arranged in order.[ 2 ] For example, pain may be classified as mild, moderate, and severe. Since there is an order between the three grades of pain, this type of data is called as ordinal. To indicate the intensity of pain, it may also be expressed as scores (mild = 1, moderate = 2, severe = 3). Hence, data can be arranged in an order and rank.

3) Interval data: This type of data is characterized by an equal and definite interval between two measurements. For example, weight is expressed as 20, 21, 22, 23, 24 kg. The interval between 20 and 21 is same as that between 23 and 24. Interval type of data can be either continuous or discrete. A continuous variable can take any value within a given range. For example: hemoglobin (Hb) level may be taken as 11.3, 12.6, 13.4 gm % while a discrete variable is usually assigned integer values i.e. does not have fractional values. For example, blood pressure values are generally discrete variables or number of cigarettes smoked per day by a person.

Sometimes, certain data may be converted from one form to another form to reduce skewness and make it to follow the normal distribution. For example, drug doses are converted to their log values and plotted in dose response curve to obtain a straight line so that analysis becomes easy.[ 3 ] Data can be transformed by taking the logarithm, square root, or reciprocal. Logarithmic conversion is the most common data transformation used in medical research.

Measures of Central Tendencies

Mean, median, and mode are the three measures of central tendencies. Mean is the common measure of central tendency, most widely used in calculations of averages. It is least affected by sampling fluctuations. The mean of a number of individual values (X) is always nearer the true value of the individual value itself. Mean shows less variation than that of individual values, hence they give confidence in using them. It is calculated by adding up the individual values (Σx) and dividing the sum by number of items (n). Suppose height of 7 children's is 60, 70, 80, 90, 90, 100, and 110 cms. Addition of height of 7 children is 600 cm, so mean(X) = Σx/n=600/7=85.71.

Median is an average, which is obtained by getting middle values of a set of data arranged or ordered from lowest to the highest (or vice versa ). In this process, 50% of the population has the value smaller than and 50% of samples have the value larger than median. It is used for scores and ranks. Median is a better indicator of central value when one or more of the lowest or the highest observations are wide apart or are not evenly distributed. Median in case of even number of observations is taken arbitrary as an average of two middle values, and in case of odd number, the central value forms the median. In above example, median would be 90. Mode is the most frequent value, or it is the point of maximum concentration. Most fashionable number, which occurred repeatedly, contributes mode in a distribution of quantitative data . In above example, mode is 90. Mode is used when the values are widely varying and is rarely used in medical studies. For skewed distribution or samples where there is wide variation, mode, and median are useful.

Even after calculating the mean, it is necessary to have some index of variability among the data. Range or the lowest and the highest values can be given, but this is not very useful if one of these extreme values is far off from the rest. At the same time, it does not tell how the observations are scattered around the mean. Therefore, following indices of variability play a key role in biostatistics.

Standard Deviation

In addition to the mean, the degree of variability of responses has to be indicated since the same mean may be obtained from different sets of values. Standard deviation (SD) describes the variability of the observation about the mean.[ 4 ] To describe the scatter of the population, most useful measure of variability is SD. Summary measures of variability of individuals (mean, median, and mode) are further needed to be tested for reliability of statistics based on samples from population variability of individual.

To calculate the SD, we need its square called variance. Variance is the average square deviation around the mean and is calculated by Variance = Σ(x-x-) 2/n OR Σ(x-x-) 2/n-1, now SD = √variance. SD helps us to predict how far the given value is away from the mean, and therefore, we can predict the coverage of values. SD is more appropriate only if data are normally distributed. If individual observations are clustered around sample mean (M) and are scattered evenly around it, the SD helps to calculate a range that will include a given percentage of observation. For example, if N ≥ 30, the range M ± 2(SD) will include 95% of observation and the range M ± 3(SD) will include 99% of observation. If observations are widely dispersed, central values are less representative of data, hence variance is taken. While reporting mean and SD, better way of representation is ‘mean (SD)’ rather than ‘mean ± SD’ to minimize confusion with confidence interval.[ 5 , 6 ]

Correlation Coefficient

Correlation is relationship between two variables. It is used to measure the degree of linear relationship between two continuous variables.[ 7 ] It is represented by ‘r’. In Chi-square test, we do not get the degree of association, but we can know whether they are dependent or independent of each other. Correlation may be due to some direct relationship between two variables. This also may be due to some inherent factors common to both variables. The correlation is expressed in terms of coefficient. The correlation coefficient values are always between -1 and +1. If the variables are not correlated, then correlation coefficient is zero. The maximum value of 1 is obtained if there is a straight line in scatter plot and considered as perfect positive correlation. The association is positive if the values of x-axis and y-axis tend to be high or low together. On the contrary, the association is negative i.e. -1 if the high y axis values tends to go with low values of x axis and considered as perfect negative correlation. Larger the correlation coefficient, stronger is the association. A weak correlation may be statistically significant if the numbers of observation are large. Correlation between the two variables does not necessarily suggest the cause and effect relationship. It indicates the strength of association for any data in comparable terms as for example, correlation between height and weight, age and height, weight loss and poverty, parity and birth weight, socioeconomic status and hemoglobin. While performing these tests, it requires x and y variables to be normally distributed. It is generally used to form hypothesis and to suggest areas of future research.

Types of Distribution

Though this universe is full of uncertainty and variability, a large set of experimental/biological observations always tend towards a normal distribution. This unique behavior of data is the key to entire inferential statistics. There are two types of distribution.

1) Gaussian /normal distribution

If data is symmetrically distributed on both sides of mean and form a bell-shaped curve in frequency distribution plot, the distribution of data is called normal or Gaussian. The noted statistician professor Gauss developed this, and therefore, it was named after him. The normal curve describes the ideal distribution of continuous values i.e. heart rate, blood sugar level and Hb % level. Whether our data is normally distributed or not, can be checked by putting our raw data of study directly into computer software and applying distribution test. Statistical treatment of data can generate a number of useful measurements, the most important of which are mean and standard deviation of mean. In an ideal Gaussian distribution, the values lying between the points 1 SD below and 1 SD above the mean value (i.e. ± 1 SD) will include 68.27% of all values. The range, mean ± 2 SD includes approximately 95% of values distributed about this mean, excluding 2.5% above and 2.5% below the range [ Figure 1 ]. In ideal distribution of the values; the mean, mode, and median are equal within population under study.[ 8 ] Even if distribution in original population is far from normal, the distribution of sample averages tend to become normal as size of sample increases. This is the single most important reason for the curve of normal distribution. Various methods of analysis are available to make assumptions about normality, including ‘t’ test and analysis of variance (ANOVA). In normal distribution, skew is zero. If the difference (mean–median) is positive, the curve is positively skewed and if it is (mean–median) negative, the curve is negatively skewed, and therefore, measure of central tendency differs [ Figure 1 ]

Diagram showing normal distribution curve with negative and positive skew μ = Mean, σ = Standard deviation

2) Non-Gaussian (non-normal) distribution

If the data is skewed on one side, then the distribution is non-normal. It may be binominal distribution or Poisson distribution. In binominal distribution, event can have only one of two possible outcomes such as yes/no, positive/negative, survival/death, and smokers/non-smokers. When distribution of data is non-Gaussian, different test like Wilcoxon, Mann-Whitney, Kruskal-Wallis, and Friedman test can be applied depending on nature of data.

Standard Error of Mean

Since we study some points or events (sample) to draw conclusions about all patients or population and use the sample mean (M) as an estimate of the population mean (M 1 ), we need to know how far M can vary from M 1 if repeated samples of size N are taken. A measure of this variability is provided by Standard error of mean (SEM), which is calculated as (SEM = SD/√n). SEM is always less than SD. What SD is to the sample, the SEM is to the population mean.

Applications of Standard Error of Mean:

Applications of SEM include:

- 1) To determine whether a sample is drawn from same population or not when it's mean is known.

Mean fasting blood sugar + 2 SEM = 90 + (2 × 0.56) = 91.12 while

Mean fasting blood sugar - 2 SEM = 90 - (2 × 0.56) = 88.88

So, confidence limits of fasting blood sugar of lawyer's population are 88.88 to 91.12 mg %. If mean fasting blood sugar of another lawyer is 80, we can say that, he is not from the same population.

Confidence Interval (CI) OR (Fiducial limits)

Confidence limits are two extremes of a measurement within which 95% observations would lie. These describe the limits within which 95% of the mean values if determined in similar experiments are likely to fall. The value of ‘t’ corresponding to a probability of 0.05 for the appropriate degree of freedom is read from the table of distribution. By multiplying this value with the standard error, the 95% confidence limits for the mean are obtained as per formula below.

Lower confidence limit = mean - (t 0.05 × SEM)

Upper confidence limit = mean + (t 0.05 × SEM)

If n > 30, the interval M ± 2(SEM) will include M with a probability of 95% and the interval M ± 2.8(SEM) will include M with probability of 99%. These intervals are, therefore, called the 95% and 99% confidence intervals, respectively.[ 9 ] The important difference between the ‘p’ value and confidence interval is that confidence interval represents clinical significance, whereas ‘p’ value indicates statistical significance. Therefore, in many clinical studies, confidence interval is preferred instead of ‘p’ value,[ 4 ] and some journals specifically ask for these values.

Various medical journals use mean and SEM to describe variability within the sample. The SEM is a measure of precision for estimated population mean, whereas SD is a measure of data variability around mean of a sample of population. Hence, SEM is not a descriptive statistics and should not be used as such.[ 10 ] Correct use of SEM would be only to indicate precision of estimated mean of population.

Null Hypothesis

The primary object of statistical analysis is to find out whether the effect produced by a compound under study is genuine and is not due to chance. Hence, the analysis usually attaches a test of statistical significance. First step in such a test is to state the null hypothesis. In null hypothesis (statistical hypothesis), we make assumption that there exist no differences between the two groups. Alternative hypothesis (research hypothesis) states that there is a difference between two groups. For example, a new drug ‘A’ is claimed to have analgesic activity and we want to test it with the placebo. In this study, the null hypothesis would be ‘drug A is not better than the placebo.’ Alternative hypothesis would be ‘there is a difference between new drug ‘A’ and placebo.’ When the null hypothesis is accepted, the difference between the two groups is not significant. It means, both samples were drawn from single population, and the difference obtained between two groups was due to chance. If alternative hypothesis is proved i.e. null hypothesis is rejected, then the difference between two groups is statistically significant. A difference between drug ‘A’ and placebo group, which would have arisen by chance is less than five percent of the cases, that is less than 1 in 20 times is considered as statistically significant ( P < 0.05). In any experimental procedure, there is possibility of occurring two errors.

1) Type I Error (False positive)

This is also known as α error. It is the probability of finding a difference; when no such difference actually exists, which results in the acceptance of an inactive compound as an active compound. Such an error, which is not unusual, may be tolerated because in subsequent trials, the compound will reveal itself as inactive and thus finally rejected.[ 11 ] For example, we proved in our trial that new drug ‘A’ has an analgesic action and accepted as an analgesic. If we commit type I error in this experiment, then subsequent trial on this compound will automatically reject our claim that drug ‘A’ is having analgesic action and later on drug ‘A’ will be thrown out of market. Type I error is actually fixed in advance by choice of the level of significance employed in test.[ 12 ] It may be noted that type I error can be made small by changing the level of significance and by increasing the size of sample.

2) Type II Error (False negative)

This is also called as β error. It is the probability of inability to detect the difference when it actually exists, thus resulting in the rejection of an active compound as an inactive. This error is more serious than type I error because once we labeled the compound as inactive, there is possibility that nobody will try it again. Thus, an active compound will be lost.[ 11 ] This type of error can be minimized by taking larger sample and by employing sufficient dose of the compound under trial. For example, we claim that drug ‘A’ is not having analgesic activity after suitable trial. Therefore, drug ‘A’ will not be tried by any other researcher for its analgesic activity and thus drug ‘A’, in spite of having analgesic activity, will be lost just because of our type II error. Hence, researcher should be very careful while reporting type II error.

Level of Significance

If the probability ( P ) of an event or outcome is high, we say it is not rare or not uncommon. But, if the P is low, we say it is rare or uncommon. In biostatistics, a rare event or outcome is called significant, whereas a non-rare event is called non-significant. The ‘ P ’ value at which we regard an event or outcomes as enough to be regarded as significant is called the significance level.[ 2 ] In medical research, most commonly P value less than 0.05 or 5% is considered as significant level . However, on justifiable grounds, we may adopt a different standard like P < 0.01 or 1%. Whenever possible, it is better to give actual P values instead of P < 0.05.[ 13 ] Even if we have found the true value or population value from sample, we cannot be confident as we are dealing with a part of population only; howsoever big the sample may be. We would be wrong in 5% cases only if we place the population value within 95% confidence limits. Significant or insignificant indicates whether a value is likely or unlikely to occur by chance. ‘ P ’ indicates probability of relative frequency of occurrence of the difference by chance.

Sometimes, when we analyze the data, one value is very extreme from the others. Such value is referred as outliers. This could be due to two reasons. Firstly, the value obtained may be due to chance; in that case, we should keep that value in final analysis as the value is from the same distribution. Secondly, it may be due to mistake. Causes may be listed as typographical or measurement errors. In such cases, these values should be deleted, to avoid invalid results.

One-tailed and Two-tailed Test

When comparing two groups of continuous data, the null hypothesis is that there is no real difference between the groups (A and B). The alternative hypothesis is that there is a real difference between the groups. This difference could be in either direction e.g. A > B or A < B. When there is some sure way to know in advance that the difference could only be in one direction e.g. A > B and when a good ground considers only one possibility, the test is called one-tailed test. Whenever we consider both the possibilities, the test of significance is known as a two-tailed test. For example, when we know that English boys are taller than Indian boys, the result will lie at one end that is one tail distribution, hence one tail test is used. When we are not absolutely sure of the direction of difference, which is usual, it is always better to use two-tailed test.[ 14 ] For example, a new drug ‘X’ is supposed to have an antihypertensive activity, and we want to compare it with atenolol. In this case, as we don’t know exact direction of effect of drug ‘X’, so one should prefer two-tailed test. When you want to know the action of particular drug is different from that of another, but the direction is not specific, always use two-tailed test. At present, most of the journals use two-sided P values as a standard norm in biomedical research.[ 15 ]

Importance of Sample Size Determination

Sample is a fraction of the universe. Studying the universe is the best parameter. But, when it is possible to achieve the same result by taking fraction of the universe, a sample is taken. Applying this, we are saving time, manpower, cost, and at the same time, increasing efficiency. Hence, an adequate sample size is of prime importance in biomedical studies. If sample size is too small, it will not give us valid results, and validity in such a case is questionable, and therefore, whole study will be a waste. Furthermore, large sample requires more cost and manpower. It is a misuse of money to enroll more subjects than required. A good small sample is much better than a bad large sample. Hence, appropriate sample size will be ethical to produce precise results.

Factors Influencing Sample Size Include

- 1) Prevalence of particular event or characteristics- If the prevalence is high, small sample can be taken and vice versa . If prevalence is not known, then it can be obtained by a pilot study.

- 2) Probability level considered for accuracy of estimate- If we need more safeguard about conclusions on data, we need a larger sample. Hence, the size of sample would be larger when the safeguard is 99% than when it is only 95%. If only a small difference is expected and if we need to detect even that small difference, then we need a large sample.

- 3) Availability of money, material, and manpower.

- 4) Time bound study curtails the sample size as routinely observed with dissertation work in post graduate courses.

Sample Size Determination and Variance Estimate

To calculate sample size, the formula requires the knowledge of standard deviation or variance, but the population variance is unknown. Therefore, standard deviation has to be estimated. Frequently used sources for estimation of standard deviation are:

- A pilot[ 16 ] or preliminary sample may be drawn from the population, and the variance computed from the sample may be used as an estimate of standard deviation. Observations used in pilot sample may be counted as a part of the final sample.[ 17 ]

- Estimates of standard deviation may be accessible from the previous or similar studies,[ 17 ] but sometimes, they may not be correct.

Calculation of Sample Size

Calculation of sample size plays a key role while doing any research. Before calculation of sample size, following five points are to be considered very carefully. First of all, we have to assess the minimum expected difference between the groups. Then, we have to find out standard deviation of variables. Different methods for determination of standard deviation have already been discussed previously. Now, set the level of significance (alpha level, generally set at P < 0.05) and Power of study (1-beta = 80%). After deciding all these parameters, we have to select the formula from computer programs to obtain the sample size. Various softwares are available free of cost for calculation of sample size and power of study. Lastly, appropriate allowances are given for non-compliance and dropouts, and this will be the final sample size for each group in study. We will work on two examples to understand sample size calculation.

- a) The mean (SD) diastolic blood pressure of hypertensive patient after enalapril therapy is found to be 88(8). It is claimed that telmisartan is better than enalapril, and a trial is to be conducted to find out the truth. By our convenience, suppose we take minimum expected difference between the two groups is 6 at significance level of 0.05 with 80% power. Results will be analyzed by unpaired ‘t’ test. In this case, minimum expected difference is 6, SD is 8 from previous study, alpha level is 0.05, and power of study is 80%. After putting all these values in computer program, sample size comes out to be 29. If we take allowance to non-compliance and dropout to be 4, then final sample size for each group would be 33.

- b) The mean hemoglobin (SD) of newborn is observed to be 10.5 (1.4) in pregnant mother of low socioeconomic group. It was decided to carry out a study to decide whether iron and folic acid supplementation would increase hemoglobin level of newborn. There will be two groups, one with supplementation and other without supplementation. Minimum difference expected between the two groups is taken as 1.0 with 0.05 level of significance and power as 90%. In this example, SD is 1.4 with minimum difference 1.0. After keeping these values in computer-based formula, sample size comes out to be 42 and with allowance of 10%, final sample size would be 46 in each group.

Power of Study

It is a probability that study will reveal a difference between the groups if the difference actually exists. A more powerful study is required to pick up the higher chances of existing differences. Power is calculated by subtracting the beta error from 1. Hence, power is (1-Beta). Power of study is very important while calculation of sample size. Power of study can be calculated after completion of study called as posteriori power calculation. This is very important to know whether study had enough power to pick up the difference if it existed. Any study to be scientifically sound should have at least 80% power. If power of study is less than 80% and the difference between groups is not significant, then we can say that difference between groups could not be detected, rather than no difference between the groups. In this case, power of study is too low to pick up the exiting difference. It means probability of missing the difference is high and hence the study could have missed to detect the difference. If we increase the power of study, then sample size also increases. It is always better to decide power of study at initial level of research.

How to Choose an Appropriate Statistical Test

There are number of tests in biostatistics, but choice mainly depends on characteristics and type of analysis of data. Sometimes, we need to find out the difference between means or medians or association between the variables. Number of groups used in a study may vary; therefore, study design also varies. Hence, in such situation, we will have to make the decision which is more precise while selecting the appropriate test. Inappropriate test will lead to invalid conclusions. Statistical tests can be divided into parametric and non-parametric tests. If variables follow normal distribution, data can be subjected to parametric test, and for non-Gaussian distribution, we should apply non-parametric test. Statistical test should be decided at the start of the study. Following are the different parametric test used in analysis of various types of data.

1) Student's ‘t’ Test

Mr. W. S. Gosset, a civil service statistician, introduced ‘t’ distribution of small samples and published his work under the pseudonym ‘Student.’ This is one of the most widely used tests in pharmacological investigations, involving the use of small samples. The ‘t’ test is always applied for analysis when the number of sample is 30 or less. It is usually applicable for graded data like blood sugar level, body weight, height etc. If sample size is more than 30, ‘Z’ test is applied. There are two types of ‘t’ test, paired and unpaired.

When to apply paired and unpaired

- a) When comparison has to be made between two measurements in the same subjects after two consecutive treatments, paired ‘t’ test is used. For example, when we want to compare effect of drug A (i.e. decrease blood sugar) before start of treatment (baseline) and after 1 month of treatment with drug A.

- b) When comparison is made between two measurements in two different groups, unpaired ‘t’ test is used. For example, when we compare the effects of drug A and B (i.e. mean change in blood sugar) after one month from baseline in both groups, unpaired ‘t’ test’ is applicable.

When we want to compare two sets of unpaired or paired data, the student's ‘t’ test is applied. However, when there are 3 or more sets of data to analyze, we need the help of well-designed and multi-talented method called as analysis of variance (ANOVA). This test compares multiple groups at one time.[ 18 ] In ANOVA, we draw assumption that each sample is randomly drawn from the normal population, and also they have same variance as that of population. There are two types of ANOVA.

A) One way ANOVA

It compares three or more unmatched groups when the data are categorized in one way. For example, we may compare a control group with three different doses of aspirin in rats. Here, there are four unmatched group of rats. Therefore, we should apply one way ANOVA. We should choose repeated measures ANOVA test when the trial uses matched subjects. For example, effect of supplementation of vitamin C in each subject before, during, and after the treatment. Matching should not be based on the variable you are com paring. For example, if you are comparing blood pressures in two groups, it is better to match based on age or other variables, but it should not be to match based on blood pressure. The term repeated measures applies strictly when you give treatments repeatedly to one subjects. ANOVA works well even if the distribution is only approximately Gaussian. Therefore, these tests are used routinely in many field of science. The P value is calculated from the ANOVA table.

B) Two way ANOVA

Also called two factors ANOVA, determines how a response is affected by two factors. For example, you might measure a response to three different drugs in both men and women. This is a complicated test. Therefore, we think that for postgraduates, this test may not be so useful.

Importance of post hoc test

Post tests are the modification of ‘t’ test. They account for multiple comparisons, as well as for the fact that the comparison are interrelated. ANOVA only directs whether there is significant difference between the various groups or not. If the results are significant, ANOVA does not tell us at what point the difference between various groups subsist. But, post test is capable to pinpoint the exact difference between the different groups of comparison. Therefore, post tests are very useful as far as statistics is concerned. There are five types of post- hoc test namely; Dunnett's, Turkey, Newman-Keuls, Bonferroni, and test for linear trend between mean and column number.[ 18 ]

How to select a post test?

- I) Select Dunnett's post-hoc test if one column represents control group and we wish to compare all other columns to that control column but not to each other.

- II) Select the test for linear trend if the columns are arranged in a natural order (i.e. dose or time) and we want to test whether there is a trend so that values increases (or decreases) as you move from left to right across the columns.

- III) Select Bonferroni, Turkey's, or Newman's test if we want to compare all pairs of columns.

Following are the non-parametric tests used for analysis of different types of data.

1) Chi-square test

The Chi-square test is a non-parametric test of proportions. This test is not based on any assumption or distribution of any variable. This test, though different, follows a specific distribution known as Chi-square distribution, which is very useful in research. It is most commonly used when data are in frequencies such as number of responses in two or more categories. This test involves the calculations of a quantity called Chi-square (x 2 ) from Greek letter ‘Chi’(x) and pronounced as ‘Kye.’ It was developed by Karl Pearson.

Applications

- a) Test of proportion: This test is used to find the significance of difference in two or more than two proportions.

- b) Test of association: The test of association between two events in binomial or multinomial samples is the most important application of the test in statistical methods. It measures the probabilities of association between two discrete attributes. Two events can often be studied for their association such as smoking and cancer, treatment and outcome of disease, level of cholesterol and coronary heart disease. In these cases, there are two possibilities, either they influence or affect each other or they do not. In other words, you can say that they are dependent or independent of each other. Thus, the test measures the probability ( P ) or relative frequency of association due to chance and also if two events are associated or dependent on each other. Varieties used are generally dichotomous e.g. improved / not improved. If data are not in that format, investigator can transform data into dichotomous data by specifying above and below limit. Multinomial sample is also useful to find out association between two discrete attributes. For example, to test the association between numbers of cigarettes equal to 10, 11- 20, 21-30, and more than 30 smoked per day and the incidence of lung cancer. Since, the table presents joint occurrence of two sets of events, the treatment and outcome of disease, it is called contingency table (Con- together, tangle- to touch).

How to prepare 2 × 2 table

When there are only two samples, each divided into two classes, it is called as four cell or 2 × 2 contingency table. In contingency table, we need to enter the actual number of subjects in each category. We cannot enter fractions or percentage or mean. Most contingency tables have two rows (two groups) and two columns (two possible outcomes). The top row usually represents exposure to a risk factor or treatment, and bottom row is mainly for control. The outcome is entered as column on the right side with the positive outcome as the first column and the negative outcome as the second column. A particular subject or patient can be only in one column but not in both. The following table explains it in more detail:

Even if sample size is small (< 30), this test is used by using Yates correction, but frequency in each cell should not be less than 5.[ 19 ] Though, Chi-square test tells an association between two events or characters, it does not measure the strength of association. This is the limitation of this test. It only indicates the probability ( P ) of occurrence of association by chance. Yate's correction is not applicable to tables larger than 2 X 2. When total number of items in 2 X 2 table is less than 40 or number in any cell is less than 5, Fischer's test is more reliable than the Chi-square test.[ 20 ]

2) Wilcoxon-Matched-Pairs Signed-Ranks Test

This is a non-parametric test. This test is used when data are not normally distributed in a paired design. It is also called Wilcoxon-Matched Pair test. It analyses only the difference between the paired measurements for each subject. If P value is small, we can reject the idea that the difference is coincidence and conclude that the populations have different medians.

3) Mann-Whitney test

It is a Student's ‘t’ test performed on ranks. For large numbers, it is almost as sensitive as Student's ‘t’ test. For small numbers with unknown distribution, this test is more sensitive than Student's ‘t’ test. This test is generally used when two unpaired groups are to be compared and the scale is ordinal (i.e. ranks and scores), which are not normally distributed.

4) Friedman test

This is a non-parametric test, which compares three or more paired groups. In this, we have to rank the values in each row from low to high. The goal of using a matched test is to control experimental variability between subjects, thus increasing the power of the test.

5) Kruskal-Wallis test

It is a non-parametric test, which compares three or more unpaired groups. Non-parametric tests are less powerful than parametric tests. Generally, P values tend to be higher, making it harder to detect real differences. Therefore, first of all, try to transform the data. Sometimes, simple transformation will convert non-Gaussian data to a Gaussian distribution. Non-parametric test is considered only if outcome variable is in rank or scale with only a few categories [ Table 1 ]. In this case, population is far from Gaussian or one or few values are off scale, too high, or too low to measure.

Summary of statistical tests applied for different types of data

Common problems faced by researcher in any trial and how to address them

Whenever any researcher thinks of any experimental or clinical trial, number of queries arises before him/her. To explain some common difficulties, we will take one example and try to solve it. Suppose, we want to perform a clinical trial on effect of supplementation of vitamin C on blood glucose level in patients of type II diabetes mellitus on metformin. Two groups of patients will be involved. One group will receive vitamin C and other placebo.

a) How much should be the sample size?

In such trial, first problem is to find out the sample size. As discussed earlier, sample size can be calculated if we have S.D, minimum expected difference, alpha level, and power of study. S.D. can be taken from the previous study. If the previous study report is not reliable, you can do pilot study on few patients and from that you will get S.D. Minimum expected difference can be decided by investigator, so that the difference would be clinically important. In this case, Vitamin C being an antioxidant, we will take difference between the two groups in blood sugar level to be 15. Minimum level of significance may be taken as 0.05 or with reliable ground we can increase it, and lastly, power of study is taken as 80% or you may increase power of study up to 95%, but in both the situations, sample size will be increased accordingly. After putting all the values in computer software program, we will get sample size for each group.

b) Which test should I apply?

After calculating sample size, next question is to apply suitable statistical test. We can apply parametric or non-parametric test. If data are normally distributed, we should use parametric test otherwise apply non-parametric test. In this trial, we are measuring blood sugar level in both groups after 0, 6, 12 weeks, and if data are normally distributed, then we can apply repeated measure ANOVA in both the groups followed by Turkey's post-hoc test if we want to compare all pairs of column with each other and Dunnet's post-hoc for comparing 0 with 6 or 12 weeks observations only. If we want to see whether supplementation of vitamin C has any effect on blood glucose level as compared to placebo, then we will have to consider change from baseline i.e. from 0 to 12 weeks in both groups and apply unpaired ‘t’ with two-tailed test as directions of result is non-specific. If we are comparing effects only after 12 weeks, then paired ‘t’ test can be applied for intra-group comparison and unpaired ‘t’ test for inter-group comparison. If we want to find out any difference between basic demographic data regarding gender ratio in each group, we will have to apply Chi-square test.

c) Is there any correlation between the variable?

To see whether there is any correlation between age and blood sugar level or gender and blood sugar level, we will apply Spearman or Pearson correlation coefficient test, depending on Gaussian or non-Gaussian distribution of data. If you answer all these questions before start of the trial, it becomes painless to conduct research efficiently.

Softwares for Biostatistics

Statistical computations are now made very feasible owing to availability of computers and suitable software programs. Now a days, computers are mostly used for performing various statistical tests as it is very tedious to perform it manually. Commonly used software's are MS Office Excel, Graph Pad Prism, SPSS, NCSS, Instant, Dataplot, Sigmastat, Graph Pad Instat, Sysstat, Genstat, MINITAB, SAS, STATA, and Sigma Graph Pad. Free website for statistical softwares are www.statistics.com , http://biostat.mc.vanderbilt.edu/wiki/Main/PowerSampleSize .

Statistical methods are necessary to draw valid conclusion from the data. The postgraduate students should be aware of different types of data, measures of central tendencies, and different tests commonly used in biostatistics, so that they would be able to apply these tests and analyze the data themselves. This article provides a background information, and an attempt is made to highlight the basic principles of statistical techniques and methods for the use of postgraduate students.

Further information about the population structure:

| Gender (C 2021) | |

|---|---|

| Males | 508,387 |

| Females | 617,308 |

- Omsk with city districts

Located in:

- Omsk Oblast

Biostatistics-Lecture 3 Estimation , confidence interval and hypothesis testing

Aug 11, 2014

400 likes | 565 Views

Biostatistics-Lecture 3 Estimation , confidence interval and hypothesis testing. Ruibin Xi Peking University School of Mathematical Sciences. Some Results in Probability (1). Suppose that X, Y are independent ( ) E( cX ) = ? (c is a constant) E(X+Y) = ? Var ( cX ) = ?

Share Presentation

- classic hypothesis testing framework

- which error

- alternative parameter values

- critical value

Presentation Transcript

Biostatistics-Lecture 3Estimation, confidence interval and hypothesis testing Ruibin Xi Peking University School of Mathematical Sciences

Some Results in Probability (1) • Suppose that X, Y are independent ( ) • E(cX) = ? (c is a constant) • E(X+Y) = ? • Var(cX) = ? • Var(X+Y) = ? • Suppose are mutually independent identically distributed (i.i.d.)

Some Results in Probability (2) • The Law of Large Number (LLN) • Assume BMI follows a normal distribution with mean 32.3 and sd 6.13

Some Results in Probability (3) • The Central Limit Theorem (CLT)

Statistical Inference • Draw conclusions about a population from a sample • Two approaches • Estimation • Hypothesis testing

Estimation • Point estimation—summary statistics from sample to give an estimate of the true population parameter • The LLN implies that when n is large, these should be close to the true parameter values • These estimates are random • Confidence intervals (CI): indicate the variability of point estimates from sample to sample

Confidence interval • Assume , then (σ is known) • Confidence interval of level 95% • Repeatedly construct the confidence interval, 95% of the time, they will cover μ • In the BMI example, μ=32.3, σ=6.13, n = 20

Confidence interval • Assume , then (σ is known) • Confidence interval • Repeatedly construct the confidence interval, 95% of the time, they will cover μ • In the BMI example, μ=32.3, σ=6.13, n = 20

Confidence Interval for the Mean • Assume , then (σ is known) • Confidence interval of level 1-α

Confidence Interval for the Mean • Assume , then (σ is known) • Confidence interval of level 1-α 1-α/2

Confidence Interval for the Mean • Assume , then (σ is known) • Confidence interval of level 1-α • What if σ is unknown? • t-statistics!

Confidence Interval for the Mean • Assume , then • by the LLN. • Replace σ2 by , then • Confidence interval of level 1-α Standard error (SE)

Confidence Interval for the Mean • Assume , then • by the LLN. • Replace σ2 by , then • Confidence interval of level 1-α

Confidence Interval for the Mean • Measure serum cholesterol (血清胆固醇) in 100 adults • Construct a 95% CI for the mean serum cholesterol based on t-distribution • CI based on normal distribution

Confidence interval based on the CLT • Assume are i.i.d. random variable with population mean μ and population variance σ2 • Construct CI for μ? • From the CLT, approximately, • From the LLN, • The asymptotic CI of level 1-α is

Confidence Interval for the proportions • Telomerase • a ribonucleoproteinpolymerase • maintains telomere ends by addition of the telomere repeat TTAGGG • usually suppressed in postnatal somatic cells • Cancer cells (~90%) often have increased telomerase activity, making them immortal (e.g. HeLa cells) • A subunit of telomerase is encode by the gene TERT (telomerase reverse transcriptase)

Confidence Interval for the proportions • Huang et. al (2013) found that TERT promoter mutation is highly recurrent in human melanoma • 50 of 70 has the mutation • Construct a 95% CI for the proportion (p) of melanoma genomes that has the TERT promoter mutation • From the data above, our estimate is • The standard error is • The CI is • Note: to guarantee this approximation good, need p and 1-p ≥ 5/n

Hypothesis testing • Scientific research often start with a hypothesis • Aspirin can prevent heart attack • Imatinib can treat CML patient • TERT mutation can promote tumor progression • Collect data and perform statistical analysis to see if the data support the hypothesis or not

Steps in hypothesis testing • Step 1. state the hypothesis • Null hypothesis H0: no different, effect is zero or no improvement • Alternative hypothesis H1: some different, effect is nonzero Directionality—one-tailed or two-tailed μ<constant μ≠constant

Steps in hypothesis testing • Step 2. choose appropriate statistics • Test statistics depends on your hypothesis • Comparing two means z-test or t-test • Test independence of two categorical variables Fisher’s test or chi-square test

Steps in hypothesis testing • Step 3. Choose the level of significance—α • How much confidence do you want in decision to reject the null hypothesis • α is also thy type I error or false positive level • Typically 0.05 or 0.01

Steps in hypothesis testing • Step 4. Determine the critical value of the test statistics that must be obtained to reject the null hypothesis under the significance level • Example—two-tailed 0.05 significance level for z-test Rejection region

Steps in hypothesis testing • Step 5. Calculate the test statistic • Example: t-statistic • Step 6. Compare the test statistic to the critical value • If the test statistic is more extreme than the critical value, reject H0 DO NOT ACCEPT H1 • Otherwise, Do Not reject or Fail to reject H0 DO NOT ACCEPT H0

Steps in hypothesis testing: an example • Data Pima.tr in the MASS package • Data from Pima Indian heritage women living in USA (≥21) testing for diabetes • Question: Is the mean BMI of Pima Indian heritage women living in USA testing for diabetes is the same as the mean women BMI (26.5) • Step 1. state the hypothesis • Let μ be the mean BMI of Pima Indian heritage women living in USA • H0: μ=26.5; H1: μ≠26.5

Steps in hypothesis testing: an example • Step 2. Choose appropriate test • Two-sided t-test • Hypotheses problem μ=μ0; H1: μ≠ μ0 • Assumptions are independent, σ is unknown • Test statistic (under H0, follows tn-1) • Critical value • Check if the test is appropriate

Steps in hypothesis testing: an example • Step 3. Choose a significance level α=0.05 • Step 4. Determine the critical value • From n = 200, • Get • Step 5. Calculate the test statistic • Step 6. Compare the test statistic to the critical value • Since|t| > Ccri,0.05,we reject the null hypothesis

P-value • Often desired to see how extreme your observed data is if the null is true • P-value • P-value • the probability that you will observe more extreme data under the null • The smallest significance level that your null would be rejected • In the previous example, P-value = P(|T|>t) = 1.3e-29

Making errors • Type I error (false positive) • Reject the null hypothesis when the null hypothesis is true • The probability of Type I error is controlled by the significance level α • Type II error (false negative) • Fail to reject the null hypothesis when the null hypothesis is false • Power = 1- probability of Type II error = 1- β • Power = P(reject H0 | H0 is false) • Which error is more serious? • Depends on the context • In the classic hypothesis testing framework, Type I error is more serious

Making Errors • Here’s an illustration of the four situations in a hypothesis test: α Power = 1-β 1-α β

Making Errors (cont.) • When H0 is false and we fail to reject it, we have made a Type II error. • We assign the letter to the probability of this mistake. • It’s harder to assess the value of because we don’t know what the value of the parameter really is. • There is no single value for --we can think of a whole collection of ’s, one for each incorrect parameter value.

Making Errors (cont.) • We could reduce for all alternative parameter values by increasing . • This would reduce but increase the chance of a Type I error. • This tension between Type I and Type II errors is inevitable. • The only way to reduce both types of errors is to collect more data. Otherwise, we just wind up trading off one kind of error against the other.

Power • When H0 is false and we reject it, we have done the right thing. • A test’s ability to detect a false hypothesis is called the power of the test. • The power of a test is the probability that it correctly rejects a false null hypothesis. • When the power is high, we can be confident that we’ve looked hard enough at the situation. • The power of a test is 1 – .

Original comparison With a larger sample size: Reducing Both Type I and Type II Error

Hypothesis test for single proportion • Kantarjian et al. (2012) studied the effect of imatinib therapy on CML patients • CML: Chronic myelogenousleukemia (慢性粒细胞性白血病) • 95% of patients have ABL-BCR gene fusion • Imatinib was introduced to target the gene fusion • Since 2001, the 8-year survival rate of CML patient in chronic phase is 87%(361/415) (with Imatinib treatment) • Before 1990, 20% • 1991-2000, 45%

Hypothesis test for single proportion • Suppose that we want to test if Imatinib can improve the 8-year survival rate • Step 1. state the hypothesis • H0: μ=0.45 vs H1: μ >0.45 (μ is the 8-year survival rate with Imatinib treatment) • Step 2. Choose appropriate test • Z-test based on the CLT • Test statistic • Follow standard normal under the null • Reject null if z > Ccrt

Hypothesis test for single proportion • Step 3. Choose the significance level α=0.01 • Step 4. Determine the critical value • Step 5. Calculate the test statistic • Step6. Compare the test statistic with the critical value, reject the null • Pvalue = 1.4e-66

- More by User

ESTIMATION & HYPOTHESIS TESTING

ESTIMATION & HYPOTHESIS TESTING. Dr Liddy Goyder Dr Stephen Walters. At the end of session, you should know about: The process of setting and testing statistical hypotheses At the end of session, you should be able to: Explain: Null hypothesis P-value, and what different values mean

3.1k views • 47 slides

Confidence Interval and Hypothesis Testing for:

Confidence Interval and Hypothesis Testing for:. Population Mean ( ). Assumptions & Conditions. Random sample Independent observations Nearly normal distribution y ~ N ( , / n ) . Student ’ s t-Model for decisions about the mean, . -. y - . t =. s. n. With df=n-1.

630 views • 27 slides

Confidence Interval Estimation

IE 340/ 440 PROCESS IMPROVEMENT THROUGH PLANNED EXPERIMENTATION. Confidence Interval Estimation. Dr. Xueping Li University of Tennessee. Chapter Topics. Estimation Process Point Estimates Interval Estimates Confidence Interval Estimation for the Mean ( Known)

881 views • 39 slides

Introduction to Biostatistics/Hypothesis Testing

Introduction to Biostatistics/Hypothesis Testing. Brian Healy, PhD. Course objectives. Introduction to concepts of biostatistics Type of data Hypothesis testing p-value Choosing the best statistical test Study design When you should get help Statistical thinking, not math proofs.

1.51k views • 56 slides

Lecture 2.4 Preview: Interval Estimates and Hypothesis Testing

Lecture 2.4 Preview: Interval Estimates and Hypothesis Testing. Clint’s Assignment: Taking Stock. Estimate Reliability: Interval Estimate Question. Normal Distribution versus the Student t -Distribution: One Last Complication.

246 views • 15 slides

Estimation and Hypothesis Testing

Estimation and Hypothesis Testing. The Investment Decision. What would you like to know? What will be the return on my investment? Not possible PDF for return. Assume the normal PDF Use statistics to estimate E[r] and s . Use statistics to estimate the correct PDF.

477 views • 31 slides

Confidence Intervals, Hypothesis Testing

Confidence Intervals, Hypothesis Testing. Example 1.

664 views • 54 slides

Biostatistics-Lecture 4 More about hypothesis testing

Biostatistics-Lecture 4 More about hypothesis testing. Ruibin Xi Peking University School of Mathematical Sciences. Comparing two populations—two sample z-test. Consider Fisher’s Iris data Interested to see if Sepal.Length of Setosa and versicolor are the same

275 views • 16 slides

Confidence Intervals and Hypothesis Testing

Confidence Intervals and Hypothesis Testing. Making Inferences. In this unit we will use what we know about the normal distribution, along with some new information, to make inferences about populations. Before we can do that, we need to understand more about sampling.

702 views • 52 slides

Ch. 8: Confidence Interval Estimation

Ch. 8: Confidence Interval Estimation. In chapter 6, we had information about the population and, using the theory of Sampling Distribution (chapter 7), we learned about the properties of samples. (what are they?)

433 views • 14 slides

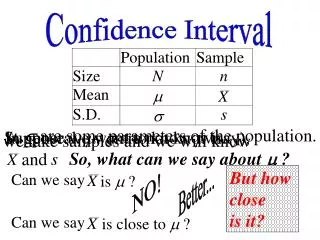

Confidence Interval

But how close is it?. Confidence Interval. , are some parameters of the population. Suppose we want to know (say),. In general, , are not known. we take samples and we will know. s. So, what can we say about ?. and. Better. Can we say. is ?. NO!. Can we say.

790 views • 32 slides

Confidence Interval Estimation. Lesson Objective. Learn how to construct a confidence interval estimate for many situations. L.O.P. Understand the meaning of being “95%” confident by using a simulation.

589 views • 35 slides

Hypothesis Testing Lecture

Hypothesis Testing Lecture. Statistics 509 E. A. Pena. Overview of this Lecture. The problem of hypotheses testing

436 views • 29 slides

Confidence Interval Estimation. For statistical inference in decision making: Chapter 6. Objectives. Central Limit Theorem Confidence Interval Estimation of the Mean ( σ known) Interpretation of the Confidence Interval Confidence Interval Estimation of the Mean ( σ unknown)

1k views • 70 slides

Confidence intervals and hypothesis testing

Confidence intervals and hypothesis testing. Petter Mostad 2005.10.03. Confidence intervals (repetition). Assume μ and σ 2 are some real numbers, and assume the data X 1 ,X 2 ,…,X n are a random sample from N( μ , σ 2 ). Then thus so

452 views • 29 slides

PY1PR1 lecture 3: Hypothesis testing

PY1PR1 lecture 3: Hypothesis testing. Dr David Field. Summary. Null hypothesis and alternative hypothesis Statistical significance (p-value, alpha level) One tailed and two tailed predictions What is a true experiment? random allocation to conditions Outcomes of experiments

410 views • 40 slides

Confidence Interval Estimation. For statistical inference in decision making:. Objectives. Central Limit Theorem Confidence Interval Estimation of the Mean ( σ known) Interpretation of the Confidence Interval Confidence Interval Estimation of the Mean ( σ unknown)

767 views • 70 slides

Hypothesis testing and parameter estimation

Hypothesis testing and parameter estimation. Bhuvan Urgaonkar “Empirical methods in AI” by P. Cohen. System behavior in unknown situations. Self-tuning systems ought to behave properly in situations not previously encountered

189 views • 16 slides

750 views • 70 slides

484 views • 47 slides

IMAGES

VIDEO

COMMENTS

Ultra-low dose contraception. STA 102: Introduction to Biostatistics. Oral contraceptive pills work well, but must have a precise dose of estrogen. If a pill has too high a dose, then women may risk side e ects such as headaches, nausea, and rare but potentially fatal blood clots. If a pill has too low a dose, then women may get pregnant.

The hypotheses are H0 : = 175 vs. H1 : 6= 175. The observed test statistic is t(obs) = 1:58. Critical-value method: At signi cance level of 5%, the rejection region is t > 2:262 or t < 2:262. Since the observed test statistic is 2:262 < 1:58 < 2:262, we cannot reject H0 at signi cance level 5%.

Introduction to concepts of biostatistics Type of data Hypothesis testing p-value Choosing the best statistical test Study design When you should get help Statistical thinking, not math proofs. 1.5k views • 56 slides. Introduction to biostatistics. Introduction to biostatistics. Georgi Iskrov , MBA, MPH, PhD Department of Social Medicine.

Hypothesis test • H0: meanleft=meanright • Paired continuous data with side as explanatory variable • Paired t-test • Mean difference=0.063 • p-value=0.046 • Since the p-value is less than 0.05, we can reject the null hypothesis • We conclude that the intensity is unequal in the two sides of the brain. p-value.

Presentation Transcript. INTRODUCTION TO BIOSTATISTICS DR.S.Shaffi Ahamed Asst. Professor Dept. of Family and Comm. Medicine KKUH. This session covers: • Origin and development of Biostatistics • Definition of Statistics and Biostatistics • Reasons to know about Biostatistics • Types of data • Graphical representation of a data ...

STA 102: Introduction to Biostatistics Yue Jiang February 25, 2020 The following material was used by Yue Jiang during a live lecture. Without the accompanying oral comments, the text is incomplete as a record of the presentation. STA 102: Introduction to BiostatisticsDepartment of Statistical Science, Duke University Yue Jiang Lecture 12 Slide 1

Ultra-low dose contraception. STA 102: Introduction to Biostatistics. Oral contraceptive pills work well, but must have a precise dose of estrogen. If a pill has too high a dose, then women may risk side e ects such as headaches, nausea, and rare but potentially fatal blood clots. If a pill has too low a dose, then women may get pregnant.

Hypothesis testing (or statistical inference) is one of the major applications of biostatistics. Much of medical research begins with a research question that can be framed as a hypothesis. Inferential statistics begins with a null hypothesis that reflects the conservative position of no change or n ….

Introduction. Understanding the relationship between sampling distributions, probability distributions, and hypothesis testing is the crucial concept in the NHST — Null Hypothesis Significance Testing — approach to inferential statistics. is crucial, and many introductory text books are excellent here. I will add some here to their discussion, perhaps with a different approach, but the ...

Biostatistics. Unit 7 - Hypothesis Testing. Testing Hypotheses. 2.35k views • 207 slides. Biostatistics. Biostatistics. Unit 9 Regression and Correlation. Regression and Correlation. Regression and correlation analysis studies the relationships between variables. This area of statistics was started in the 1860s by Francis Galton (1822-1911 ...

Abstract. Statistical methods are important to draw valid conclusions from the obtained data. This article provides background information related to fundamental methods and techniques in biostatistics for the use of postgraduate students. Main focus is given to types of data, measurement of central variations and basic tests, which are useful ...

Contents: Cities and Settlements The population of all cities and urban settlements in Omsk Oblast according to census results and latest official estimates. The icon links to further information about a selected place including its population structure (gender).

Oral HIV tests give positive or negative results depending on levels of HIV antibodies detected in saliva. If antibody levels are above a certain threshold, it is classi ed as a positive test. Varying the threshold for a positive vs. negative test will result in a test in di erent characteristics.

Omsk (Omsk Oblast, Russia) with population statistics, charts, map, location, weather and web information.

Biostatistics-Lecture 4 More about hypothesis testing. Biostatistics-Lecture 4 More about hypothesis testing. Ruibin Xi Peking University School of Mathematical Sciences. Comparing two populations—two sample z-test. Consider Fisher's Iris data Interested to see if Sepal.Length of Setosa and versicolor are the same. 275 views • 16 slides

Omsk (/ ˈ ɒ m s k /; Russian: Омск, IPA:) is the administrative center and largest city of Omsk Oblast, Russia.It is situated in southwestern Siberia and has a population of over 1.1 million. Omsk is the third largest city in Siberia after Novosibirsk and Krasnoyarsk, and the twelfth-largest city in Russia. [12] It is an important transport node, serving as a train station for the Trans ...

Omsk Oblast (Russian: О́мская о́бласть, romanized: Omskaya oblast') is a federal subject of Russia (an oblast), located in southwestern Siberia.The oblast has an area of 139,700 square kilometers (53,900 sq mi). Its population is 1,977,665 (2010 Census) [9] with the majority, 1.12 million, living in Omsk, the administrative center.One of the Omsk streets